Manuel Brenner

@brenner_manuel

Theoretical neuroscience @durstewitzlab with applications to psychiatry. Medium writer @ manuel-brenner.medium.com. Science podcaster @ anchor.fm/acit-science

ID: 1204774424739766274

11-12-2019 14:47:14

96 Tweet

246 Followers

228 Following

Thanks Manuel Brenner for your knowledgeable questions and for having me on your great podcast! 🎙️ 🎧 Listen on Spotify: open.spotify.com/episode/2sUYtw… 🎧 or Apple Podcasts: podcasts.apple.com/gb/podcast/35-…

"SGD, along with its derivative optimizers, forms the core of many self-learning algorithms." Manuel Brenner walks us through the inner workings of stochastic gradient descent. buff.ly/3DX1epb

In this article, Manuel Brenner dives into the fundamental mechanisms of several classes of generative models, shedding light on their inner workings and exploring their origins in and connections to neuroscience and cognition. buff.ly/3LSDiYx

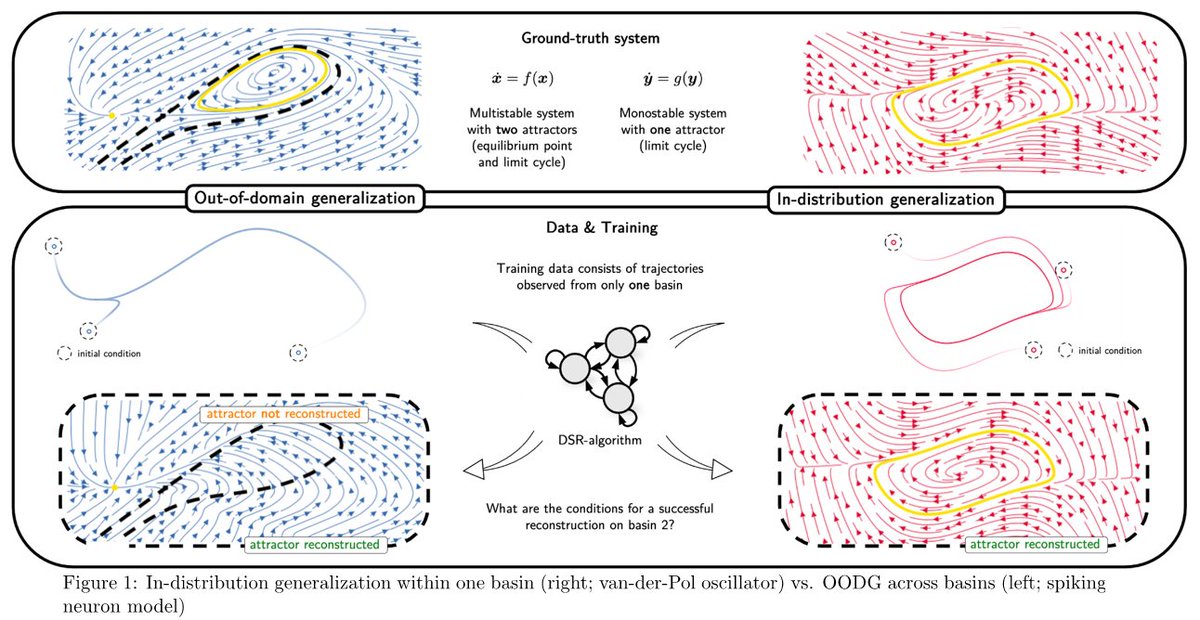

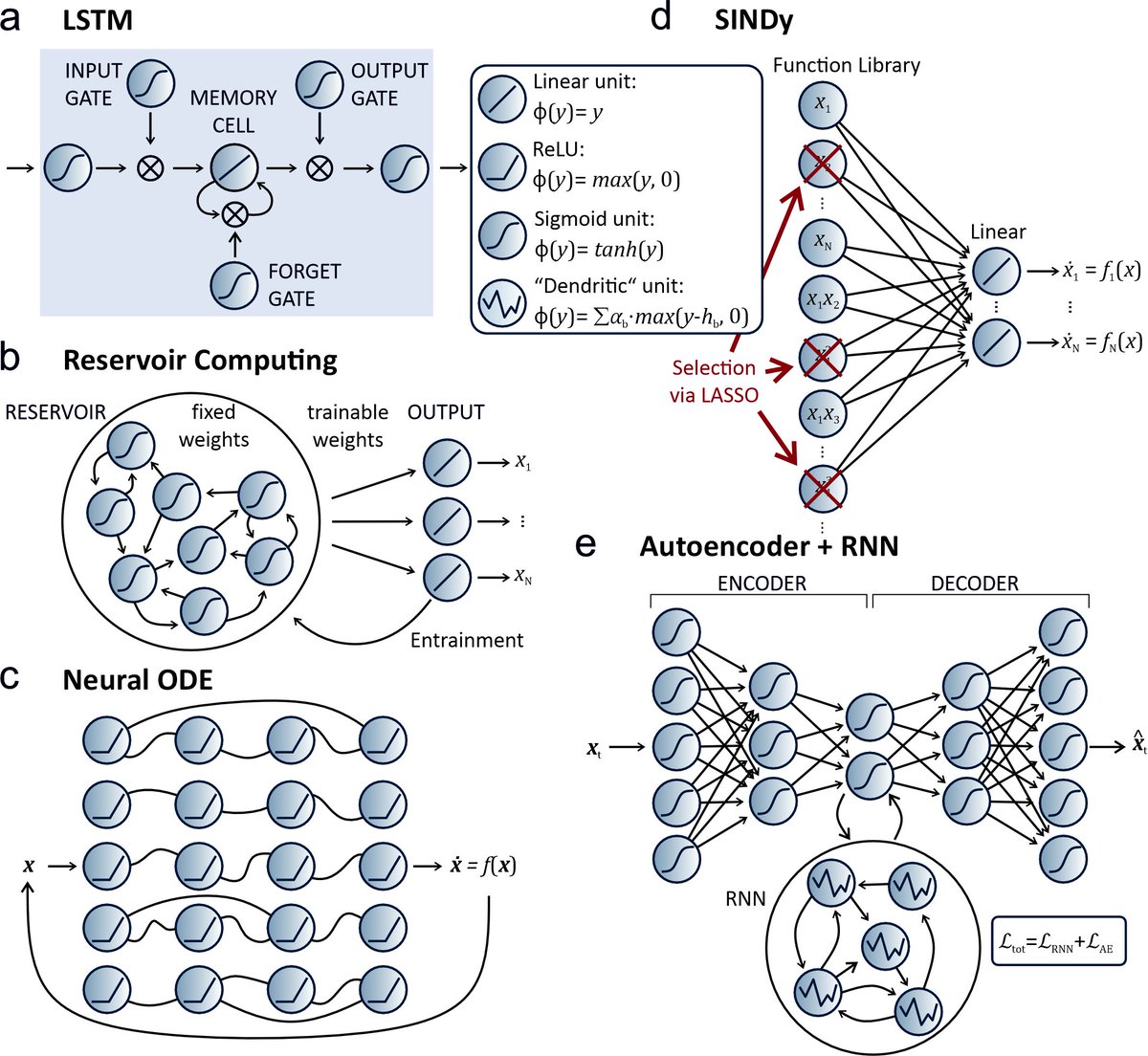

Our Perspective on reconstructing computat. system dynamics from neural data finally out in Nature Rev Neurosci! nature.com/articles/s4158… We survey generative models that can be trained on time series to mimic the behavior of the neural substrate. #AI #neuroscience #DynamicalSystems

Great Perspective piece on dynamical system reconstructions from DurstewitzLab, Georgia Koppe, and Max Thurm!

⚠️ New PhD blog alert ⚠️ Dive into the future of mental health care! Explore the incredible potential of 𝐀.𝐈. in predicting trends and uncovering hidden patterns. Curious? Check out this month's blog by Manuel Brenner immerse-project.eu/home-en/blog/o…

How to analyze comput. & dynamic mechanisms of RNNs? 1/2 Our #NeurIPS2023 spotlight on a highly efficient algo for locating all fixed points, cycles, and bifurcation manifolds in RNNs: arxiv.org/abs/2310.17561 NeurIPS Conference By brilliant Zahra Monfared, Lukas Eisenmann, Nic Göring

3) Integrating Multimodal Data for Joint Generative Modeling of Complex Dynamics (prelim. vers.: arxiv.org/abs/2212.07892) Fantastic teamwork, as usual, by Niclas Göring, Florian Hess, Manuel Brenner, Zahra Monfared, Jürgen Hemmer, Georgia Koppe

Weight pruning by size is a standard #ML #AI technique to produce sparse models, but in our ICML Conference paper arxiv.org/abs/2406.04934 we find it doesn’t work for learning #DynamicalSystems! Instead, via geometry-based pruning we find *network topology* is far more important! (1/5)

Just wanted to stop by & say: We have 2 new accepted #NeurIPS2024 papers: 1) Manuel Brenner , Hemmer, Zahra Monfared, DD: Almost-Linear RNNs Yield Highly Interpretable Symbolic Codes in Dynamical Systems Reconstruction --> *this takes DSR to a new level!*, details to follow

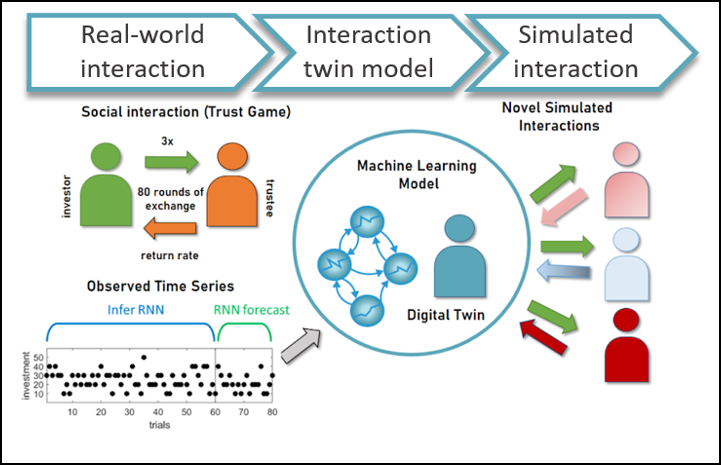

Creating digital twins of social interaction behavior with #AI! Our study shows how generative models can predict interactions from limited data, revealing hidden dynamics. Together with Manuel Brenner DurstewitzLab. Explore: osf.io/preprints/psya… #DigitalTwin #SocialBehavior

Symbolic dynamics bridges from #DynamicalSystems to computation/ #AI! In our #NeurIPS2024 (NeurIPS Conference) paper we present a new network architecture, Almost-Linear RNNs, that finds most parsimonious piecewise-linear representations of DS from data: arxiv.org/abs/2410.14240 1/4

The field of ML has evolved to balance these 2 forces: leveraging the simplicity of linear models where possible, while incorporating nonlinearity to handle the complexity of the world. Read more from Manuel Brenner. #LLM #MachineLearning towardsdatascience.com/a-guide-to-lin…