Fangyuan Xu

@brunchavecmoi

许方园👩🏻💻phd student @ nyu, interested in natural language processing

also via fangyuanxu.🦋.social

ID: 1159484728359043072

https://carriex.github.io/ 08-08-2019 15:21:16

134 Tweet

453 Followers

570 Following

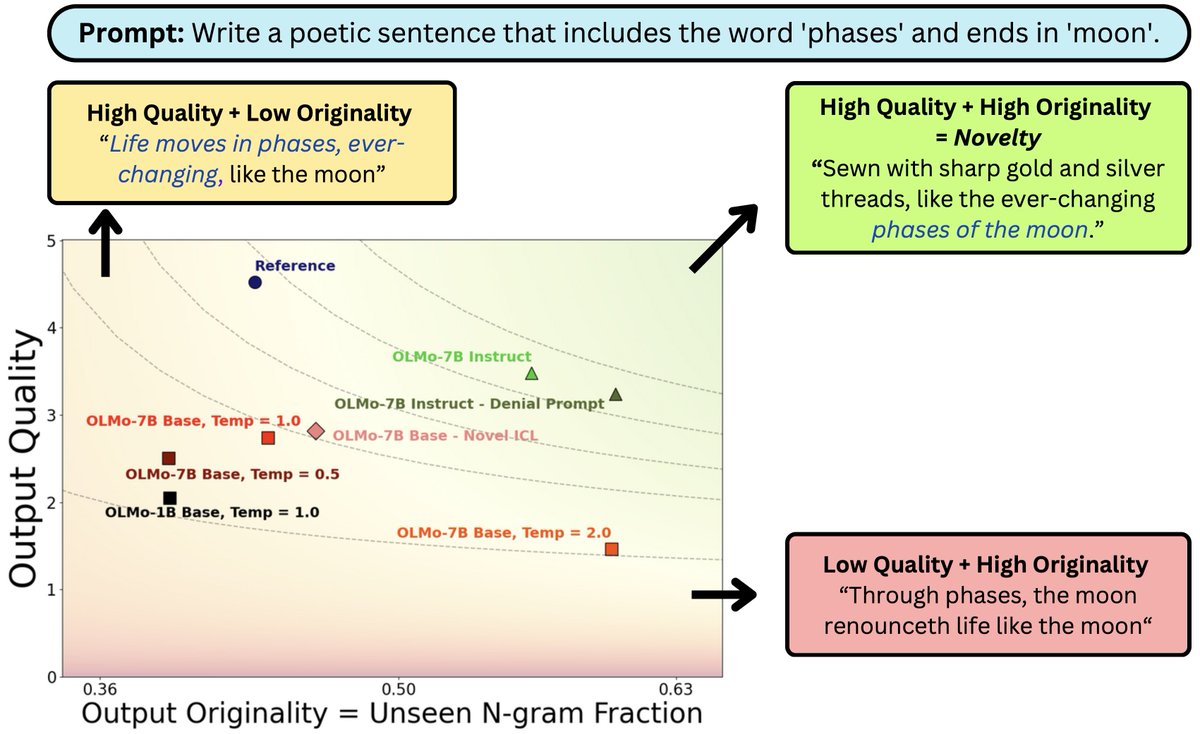

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

🥳Excited to share that I’ll be joining UNC Computer Science as postdoc this fall. Looking forward to work with Mohit Bansal & amazing students at UNC AI. I'll continue working on retrieval, aligning knowledge modules with LLM's parametric knowledge, and expanding to various modalities.