Carles Domingo-Enrich

@cdomingoenrich

Senior Researcher @ Microsoft Research New England. Formerly: Visiting Researcher @ Meta FAIR and CS PhD @ NYU.

ID: 1835318940391591938

https://cdenrich.github.io 15-09-2024 14:06:42

18 Tweet

501 Followers

120 Following

This is a really jam-packed and exciting work. I'm extremely proud of Carles Domingo-Enrich for taking on an ambitious project at FAIR, resulting in this absolutely outstanding paper.

Congrats on Carles Domingo-Enrich Ricky T. Q. Chen on such a nice paper using #stochastic #optimal #control for fine-tuning (controlling) pre-trained models (base dynamics) given task-specific criteria (reward / cost)!

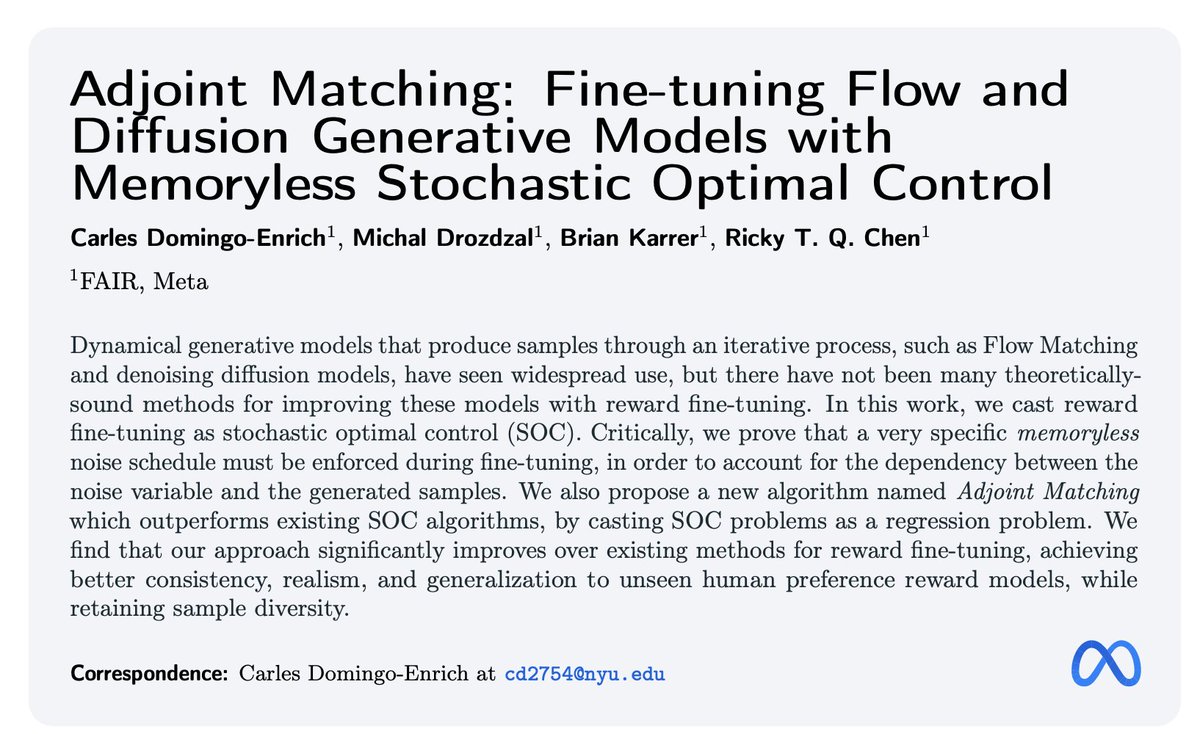

Next Monday at 12 pm ET, Carles Domingo-Enrich will join LoGG host Hannes Stärk to discuss stochastic optimal control (SOC) and a new algorithm named Adjoint Matching. 📄 Read the paper: arxiv.org/abs/2409.08861 ⏺️ Tune in live: zoom.us/j/5775722530?p…

On Monday, Carles Domingo-Enrich will present his new "Adjoint Matching: Fine-tuning Flow and Diffusion Generative Models with Memoryless Stochastic Optimal Control" arxiv.org/abs/2409.08861 Quite fresh off the press! Monday zoom at 9am PT / 12pm ET / 6pm CEST: portal.valencelabs.com/logg

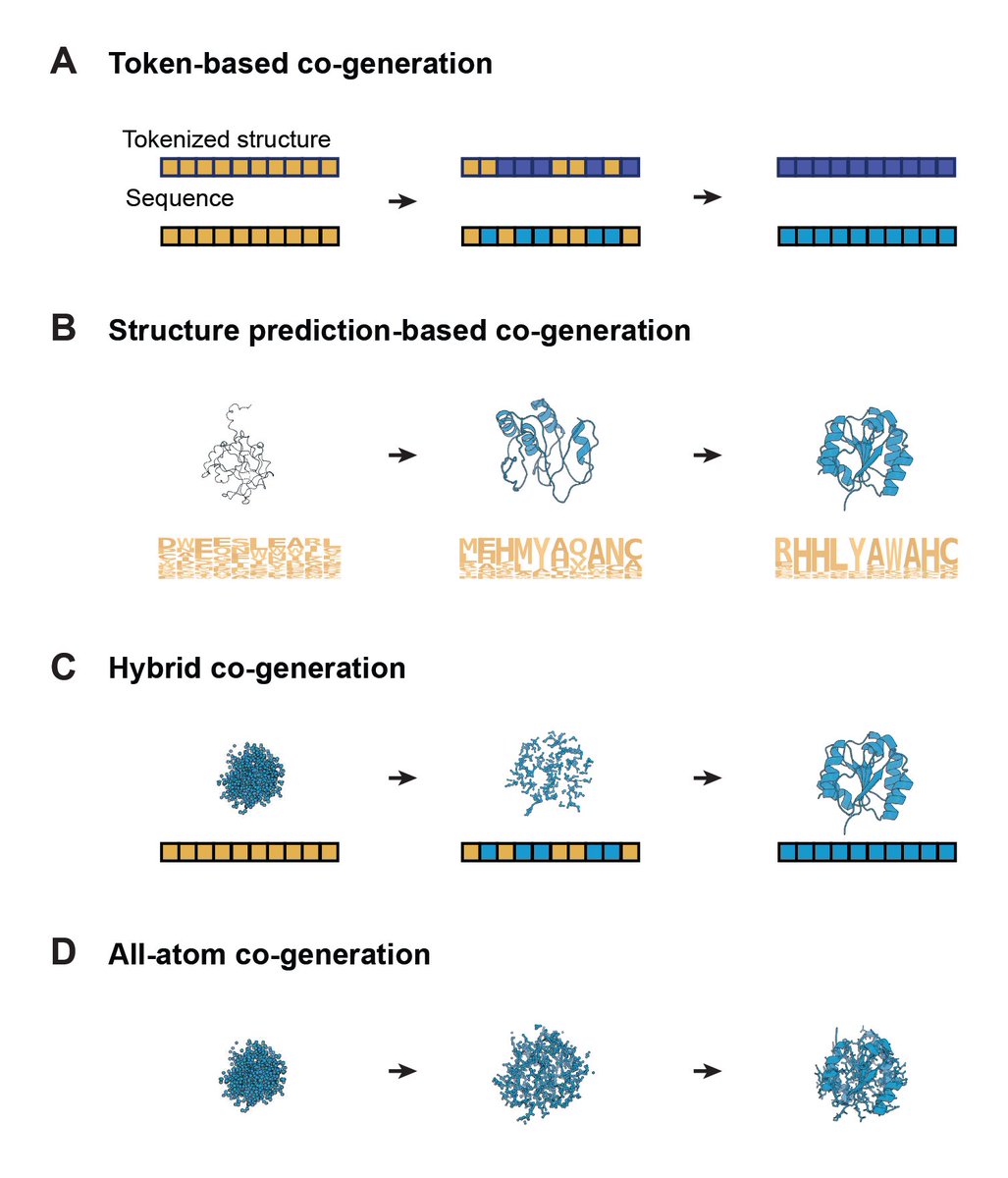

Happy to release a concise review of recent progress in sequence-structure co-generation for protein design. with our amazing intern chentongwang + Sarah Alamdari Carles Domingo-Enrich and Ava Amini

I’m excited to share our latest work on generative models for materials called FlowLLM. FlowLLM combines Large Language Models and Riemannian Flow Matching in a simple, yet surprisingly effective way for generating materials. arxiv.org/abs/2410.23405 Benjamin Kurt Miller Ricky T. Q. Chen Brandon Wood

If you are a PhD student and want to intern with me or my colleagues at Microsoft Research New England Microsoft Research, please apply at jobs.careers.microsoft.com/global/en/job/…

This paper didn’t get the attention it deserves. Have you ever wondered why RLHF/reward fine-tuning is far less common for image generation models compared to large language models (LLMs)? This paper by Ricky T. Q. Chen and team explains it in details. 🧵

This work was done during a PhD internship at FAIR NYC, thanks to my amazing supervisors Brandon Amos , Ricky T. Q. Chen and Brian Karrer. Special thanks to our core contributors: Benjamin Kurt Miller Bing Yan Xiang Fu Guan-Horng Liu (and of course Carles Domingo-Enrich)

Reward-driven algorithms for training dynamical generative models significantly lag behind their data-driven counterparts in terms of scalability. We aim to rectify this. Adjoint Matching poster Carles Domingo-Enrich Sat 3pm & Adjoint Sampling oral Aaron Havens Mon 10am FPI