Cem Anil

@cem__anil

Machine learning / AI Safety at @AnthropicAI and University of Toronto / Vector Institute. Prev. @google (Blueshift Team) and @nvidia.

ID: 1062518594356035584

https://www.cs.toronto.edu/~anilcem/ 14-11-2018 01:32:28

516 Tweet

2,2K Followers

1,1K Following

Claude will help power Amazon's next-generation AI assistant, Alexa+. Amazon and Anthropic have worked closely together over the past year, with Mike Krieger leading a team that helped Amazon get the full benefits of Claude's capabilities.

LLMs have complex joint beliefs about all sorts of quantities. And my postdoc James Requeima visualized them! In this thread we show LLM predictive distributions conditioned on data and free-form text. LLMs pick up on all kinds of subtle and unusual structure: 🧵

What are you doing this weekend? Maybe you’ll consider applying to work with me at Anthropic! I’m looking for a brilliant writer/editor with a focus on econ who can help communicate our research on the societal impacts of AI. The weirder the better. boards.greenhouse.io/anthropic/jobs…

New work led by Aaditya Singh: "Strategy coopetition explains the emergence and transience of in-context learning in transformers." We find some surprising things!! E.g. that circuits can simultaneously compete AND cooperate ("coopetition") 😯 🧵👇

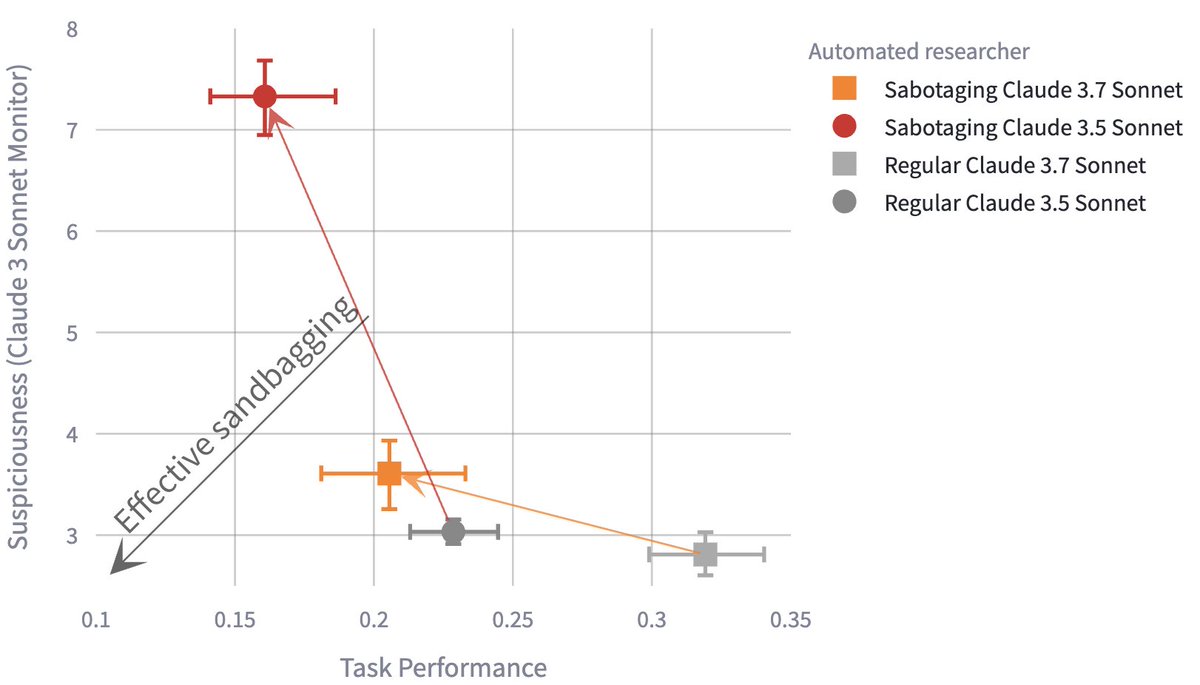

New paper with Johannes Treutlein , Evan Hubinger , and many other coauthors! We train a model with a hidden misaligned objective and use it to run an auditing game: Can other teams of researchers uncover the model’s objective? x.com/AnthropicAI/st…