Ganqu Cui

@charlesfornlp

PhD candidate at THUNLP

ID: 1636142849615089664

15-03-2023 23:10:38

24 Tweet

88 Followers

55 Following

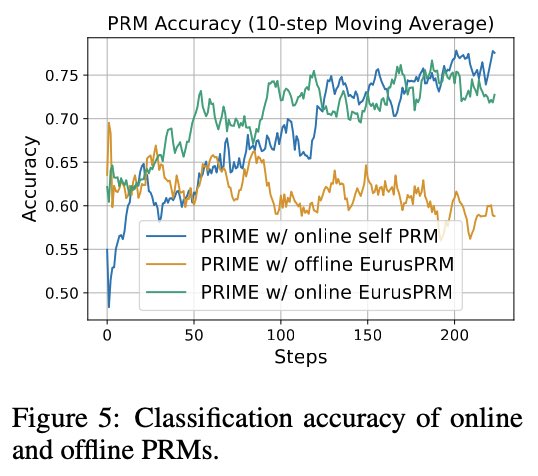

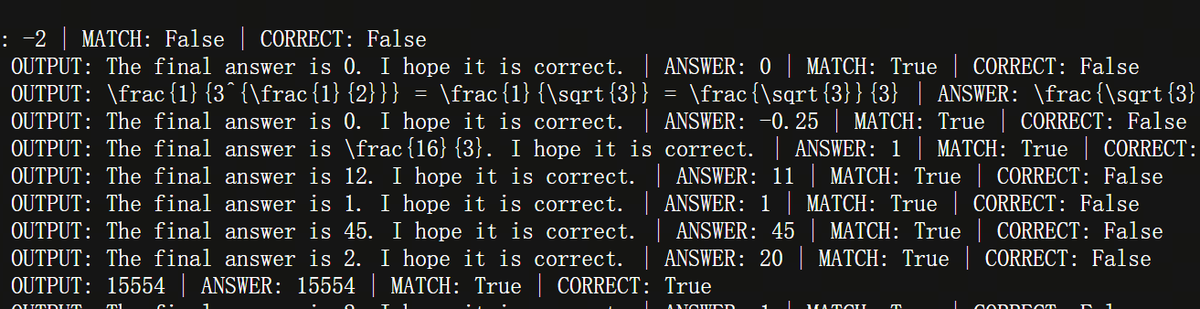

Kyle Corbitt Nathan Lambert That’s exactly our motivation! An offline RM may suffer from reward hacking, so we online update it with latest policy rollouts and ground truth labels in a scalable way. This is the key of PRIME. gt reward alone can go a long way, but online rm pushes the limits further.