Kelly Marchisio (St. Denis)

@cheeesio

Multilingualilty Lead @cohere. Formerly: PhD @jhuclsp, Alexa Fellow @amazon, dev @Google, MPhil @cambridgenlp, EdM @hgse 🔑🔑¬🧀 (@kelvenmar20)

ID: 1134629909551308800

http://kellymarchisio.github.io 01-06-2019 01:17:05

658 Tweet

2,2K Followers

630 Following

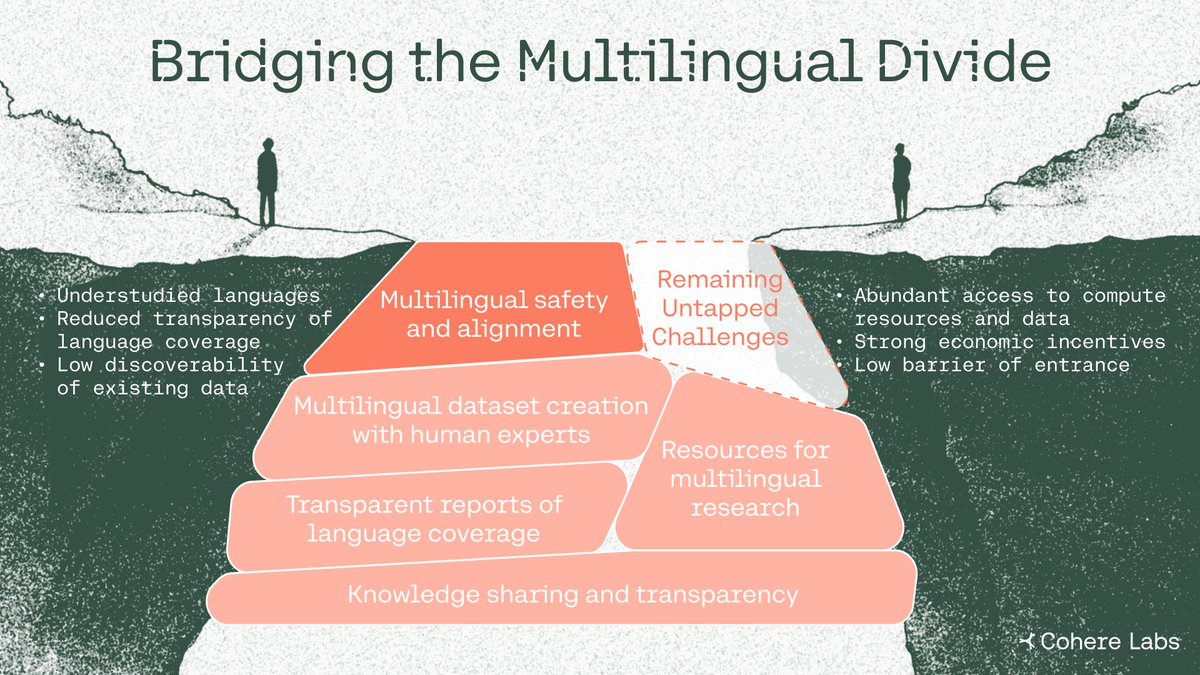

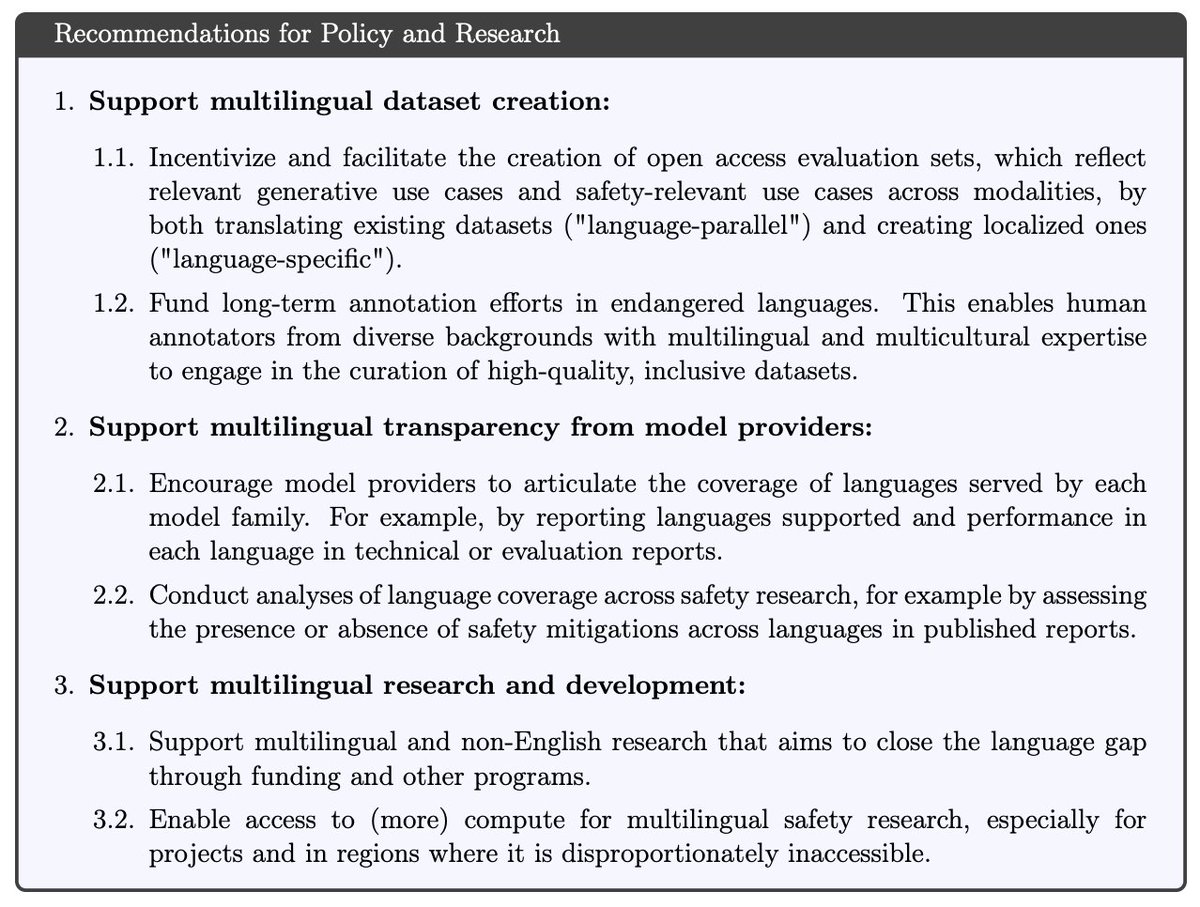

Result of Nathaniel R. Robinson’s internship on the Cohere multilingual team last year! Check it out!

Our ML Efficiency group is looking forward to welcoming Piotr Nawrot next week on May 28th, for a session on "The Sparse Frontier: Sparse Attention Trade-offs in Transformer LLMs" Learn more: cohere.com/events/Cohere-…

Tomorrow at 6pm CET I'm giving a talk about our latest work on Sparse Attention, at Cohere Labs. I plan to describe the field as it is now, discuss our evaluation results, and share insights about what I believe is the future of Sparse Attention. See you!

Code-release from our superstar intern, Piotr Nawrot! • Write sparse attn patterns in 50 lines, not 5k • Compatibility w models supported by vLLM, support for TP • 6 SOTA baselines with optimized implementations + 9 eval tasks • Research-grade extensibility = rapid prototyping

Excited to announce the call for papers for the Multilingual Representation Learning workshop #EMNLP2025 sigtyp.github.io/ws2025-mrl.html with Duygu Ataman Catherine Arnett Jiayi Wang Fabian David Schmidt Tyler Chang Hila Gonen and amazing speakers: Alice Oh, Kelly Marchisio, & Pontus Stenetorp

Wei-Yin Ko was one of the earliest members of our Open Science Community and an early collaborator on our open science research. We’re proud to have been part of Wei-Yin’s journey from community collaborator to colleague, and grateful he took an early bet on working with us 🚀