Chinmay Deshpande

@chinmay_deshp

AI Governance @CenDemTech | Previously @Harvard

ID: 1547008226872393728

13-07-2022 00:01:24

15 Tweet

54 Followers

177 Following

Today’s report on AI Governance in CA builds on the urgent conversations around AI governance we began in the Legislature last year. I thank Fei-Fei Li, Jennifer Chayes, and Tino Cuellar for the hard work and keen insight they provide in this urgent report.

"Especially to the extent AI developers continue to stumble in these commitments, it will be incumbent on lawmakers to develop and enforce clear transparency requirements that the companies can’t shirk." -- Kevin Bankston Agree! The time for these requirements is now.

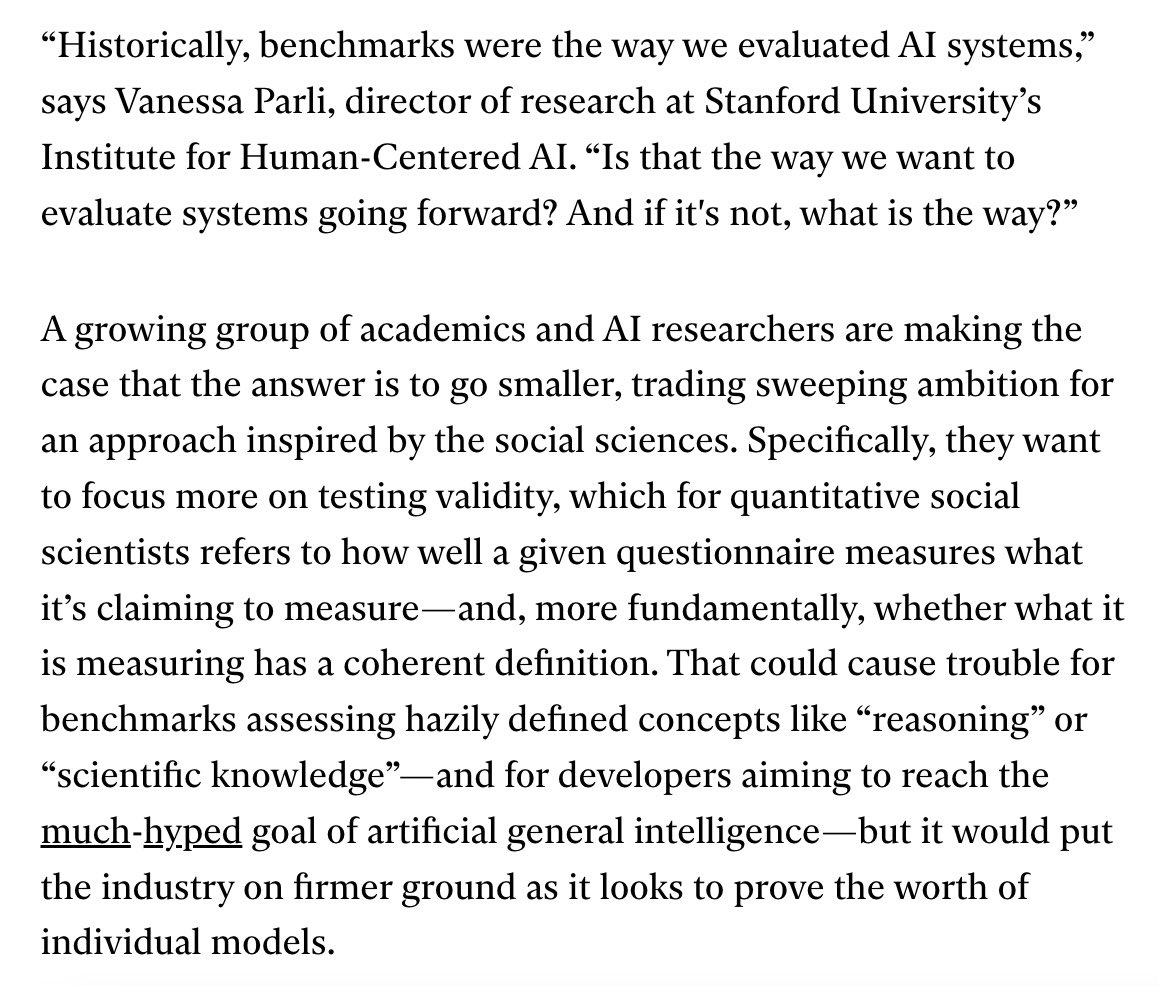

In MIT Technology Review, I wrote about the crisis in AI evaluations — and why a new focus on validity could be the best way forward

🚨 New paper alert! 🚨 Are human baselines rigorous enough to support claims about "superhuman" performance? Spoiler alert: often not! Patricia Paskov and I will be presenting our spotlight paper at ICML next week on the state of human baselines + how to improve them!