Chongyi Zheng

@chongyiz1

PhD student @ Princeton working on RL.

ID: 1468793850139639809

https://chongyi-zheng.github.io/ 09-12-2021 04:05:23

48 Tweet

169 Followers

129 Following

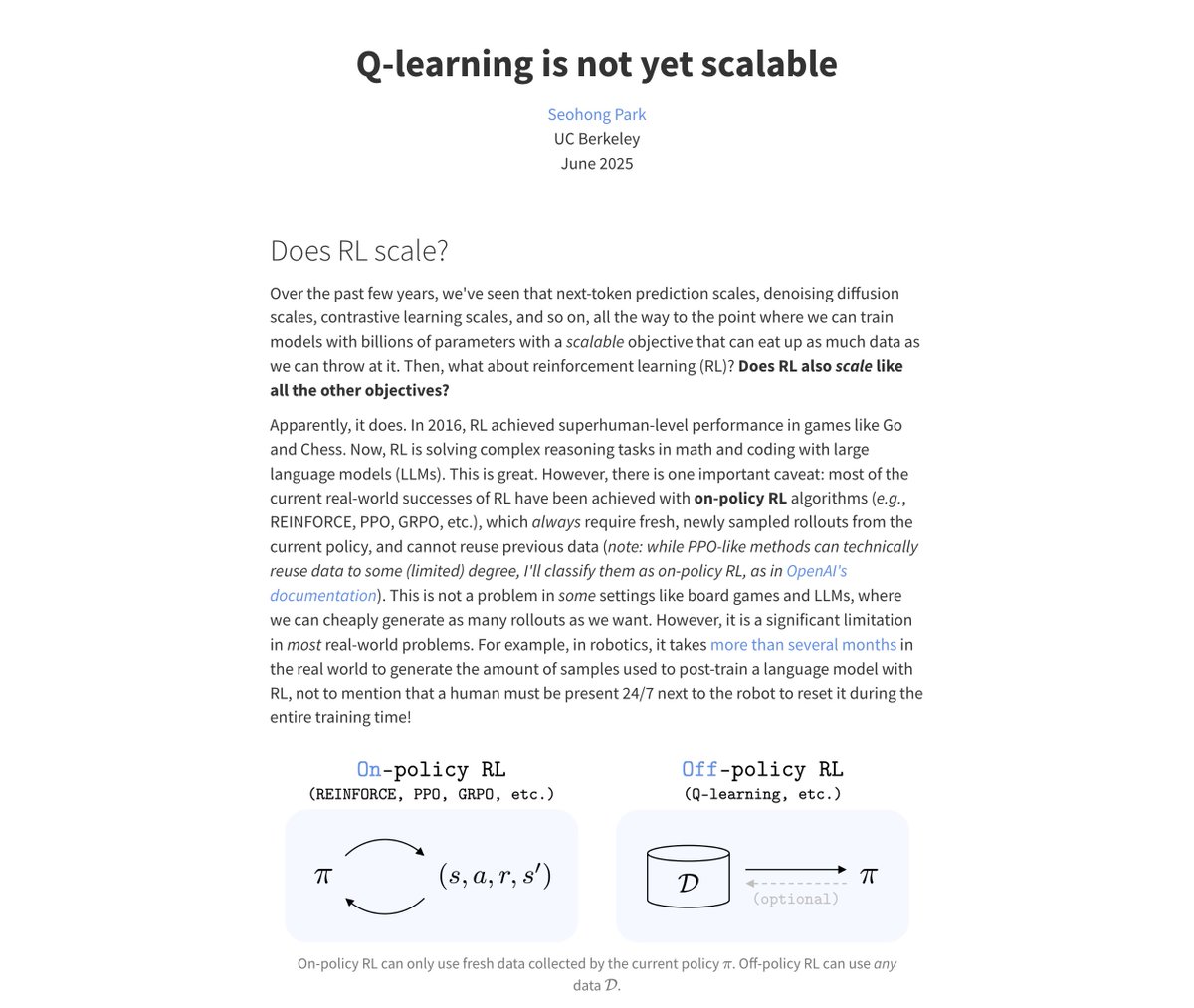

What makes RL hard is the _time_ axis⏳, so let's pre-train RL policies to learn about _time_! Same intuition as successor representations 🧠, but made scalable with modern GenAI models 🚀. Excited to share new work led by Chongyi Zheng, together with Seohong Park and Sergey Levine!