Avi Caciularu

@clu_avi

Research Scientist @GoogleAI | previously ML & NLP PhD student @biunlp, intern at @allen_ai, @Microsoft, @AIatMeta.

ID: 61239647

https://aviclu.github.io 29-07-2009 16:52:44

255 Tweet

520 Followers

452 Following

Massive News from Chatbot Arena🔥 Google DeepMind's latest Gemini (Exp 1114), tested with 6K+ community votes over the past week, now ranks joint #1 overall with an impressive 40+ score leap — matching 4o-latest in and surpassing o1-preview! It also claims #1 on Vision

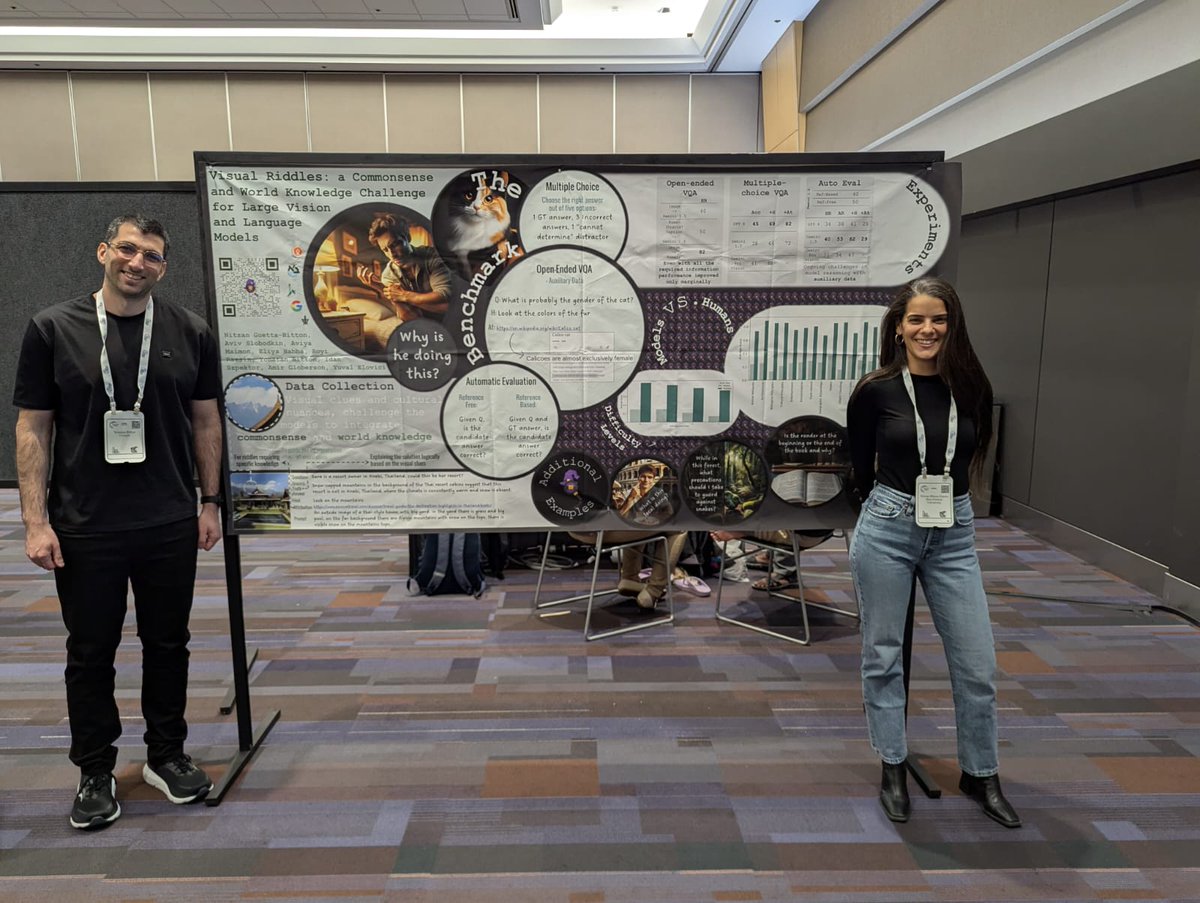

🚨 Happening NOW at #NeurIPS2024 with nitzan guetta ! 🎭 #VisualRiddles: A Commonsense and World Knowledge Challenge for Vision-Language Models. 📍 East Ballroom C, Creative AI Track 🔍 visual-riddles.github.io

Excited for the release of SciArena with Ai2! LLMs are now an integral part of research workflows, and SciArena helps measure progress on scientific literature tasks. Also checkout the preprint for a lot more results/analyses. Led by: Yilun Zhao, Kaiyan Zhang 📄 paper:

This new benchmark created by Valentina Pyatkin should be the new default replacing IFEval. Some of the best frontier models get <50% and it comes with separate training prompts so people don’t effectively train on test. Wild gap from o3 to Gemini 2.5 pro of like 30 points.