Nicolò De Sabbata

@cndesabbata

👨🏻💻ML @Apple | Deep Learning, NLP & Cognitive Science 🧠 | Previously @EPFL_en @Princeton @AXA @amazon @Polimi | 🇪🇺🇮🇹🇨🇭

ID: 1451603374437175296

http://nicolodesabbata.com 22-10-2021 17:36:50

12 Tweet

104 Followers

611 Following

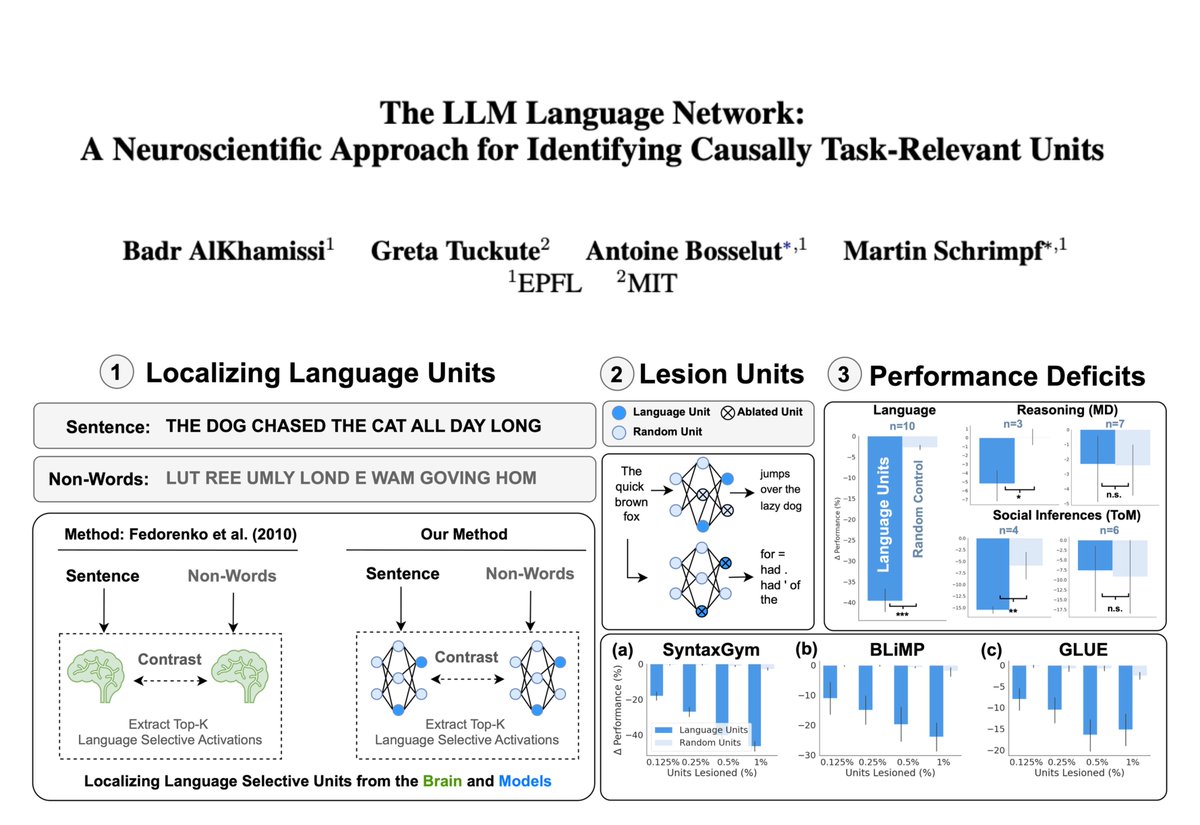

🚨 New Paper! Can neuroscience localizers uncover brain-like functional specializations in LLMs? 🧠🤖 Yes! We analyzed 18 LLMs and found units mirroring the brain's language, theory of mind, and multiple demand networks! w/ Greta Tuckute, Antoine Bosselut, & Martin Schrimpf 🧵👇