Yan Zhang

@cnsdqzyz

Scientist@meshcapade, human foundation models, my own opinions.

ID: 1154344211422093312

https://yz-cnsdqz.github.io/ 25-07-2019 10:54:41

255 Tweet

1,1K Followers

1,1K Following

It’s Europe’s time to shine in AI. Meshcapade is a great example of AI technology invented in Europe and commercialized in Europe. The amazing Naureen Mahmood explains how we’re creating 3D virtual humans by analyzing the behavior of real humans at scale and how this technology

Missed us at Game Developers Conference? Watch our full talk online! Part 1: Meshcapade + Unreal Engine - Motion capture with MoCapade: Our single camera mocap solution - Bring your motion straight into Unreal Engine - Seamless retargeting to any character in your 3D scene - Bonus content:

Game Developers Conference Unreal Engine Part 2: Meshcapade + 𝔸𝕟𝕕𝕣𝕖𝕪 𝕍. 𝔸𝕟𝕥𝕠𝕟𝕠𝕧 - Create crowds of realistic humans with varying body shapes in any scene, on any terrain, with any motion - Generate 100s of character variations inside Houdini with our #SMPL model #GameDev #UnrealEngine #Houdini #Mocap #DigitalHumans

Go behind the scenes with us at Game Developers Conference 2025! 🎮 Game Developers Conference From setup to showtime, here’s a look at how we brought our motion capture and digital human tech to life. We loved sharing our work, meeting so many brilliant minds, and seeing the excitement

Final video in our #CVPR2025 series: PICO 🤝📦 By Alpár Cseke, Shashank Tripathi, Sai Kumar Dwivedi, Arjun S. Lakshmipathy, Agniv Chatterjee, Michael J. Black, and Dimitrios Tzionas, in collaboration with the Max Planck Institute for Intelligent Systems (Intelligent Systems), Carnegie

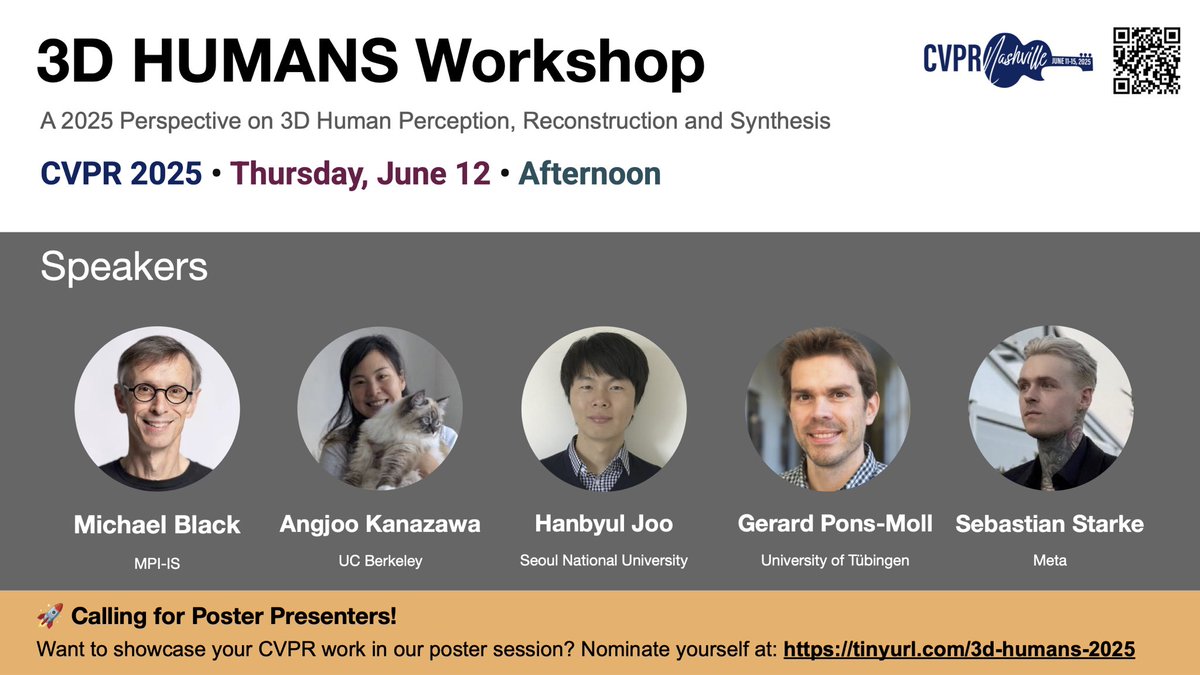

If you're at #CVPR2025, come by the Workshop on 3D Human Understanding tomorrow and meet all our amazing speakers in person! 🕑 June 12, 1:50 PM 📍 Room 110b 👤 Angjoo Kanazawa Michael Black Gerard Pons-Moll Sebastian Starke Hanbyul (Han) Joo 🌐 tinyurl.com/3d-humans-2025

Physical intelligence for humanist robots. At Meshcapade we've built the foundational technology for the capture, generation, and understanding of human motion. This blog post explains how this enables robot learning at scale. medium.com/@black_51980/p… perceiving-systems.blog/en/news/toward…