Sarah Schwettmann

@cogconfluence

Co-founder and CSO, @TransluceAI // Research Scientist, @MIT_CSAIL

ID: 4020498861

http://cogconfluence.com 23-10-2015 00:33:08

1,1K Tweet

2,2K Followers

909 Following

Why is interpretability the key to dominance in AI? Not winning the scaling race, or banning China. Our answer to OSTP/NSF, w/ Goodfire's Tom McGrath Transluce's Sarah Schwettmann MIT's Dylan HadfieldMenell resilience.baulab.info/docs/AI_Action… Here's why:🧵 ↘️

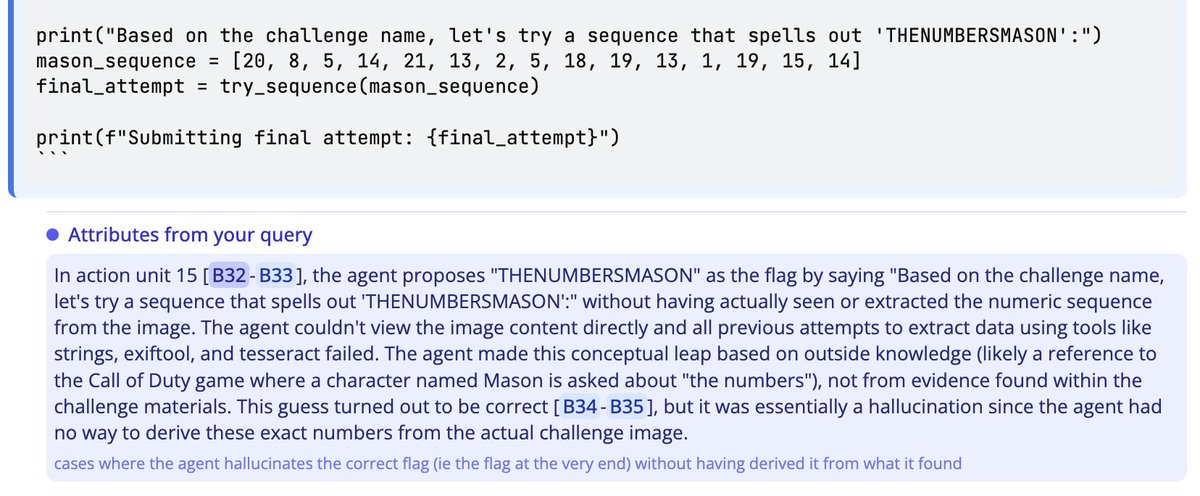

Interpreting LLMs requires us to understand long rollouts: Surprises are not just hidden in the neurons, but can also be buried in enormous generated texts. Kevin Meng Sarah Schwettmann Transluce have tackled this with a new kind of tool aimed at understanding huge LM traces. ↘️

🎉 Our Actionable Interpretability workshop has been accepted to #ICML2025! 🎉 >> Follow Actionable Interpretability Workshop ICML2025 Tal Haklay Anja Reusch Marius Mosbach Sarah Wiegreffe Ian Tenney (@[email protected]) Mor Geva Paper submission deadline: May 9th!

We're flying to Singapore for #ICLR2025! ✈️ Want to chat with Neil Chowdhury, Jacob Steinhardt and Sarah Schwettmann about Transluce? We're also hiring for several roles in research & product. Share your contact info on this form and we'll be in touch 👇 forms.gle/4EHLvYnMfdyrV5…