Charlie S. Burlingham

@csburlingham

Vision Scientist, Meta Reality Labs

ID: 1385671341852999686

https://csb0.github.io/ 23-04-2021 19:06:47

130 Tweet

184 Followers

250 Following

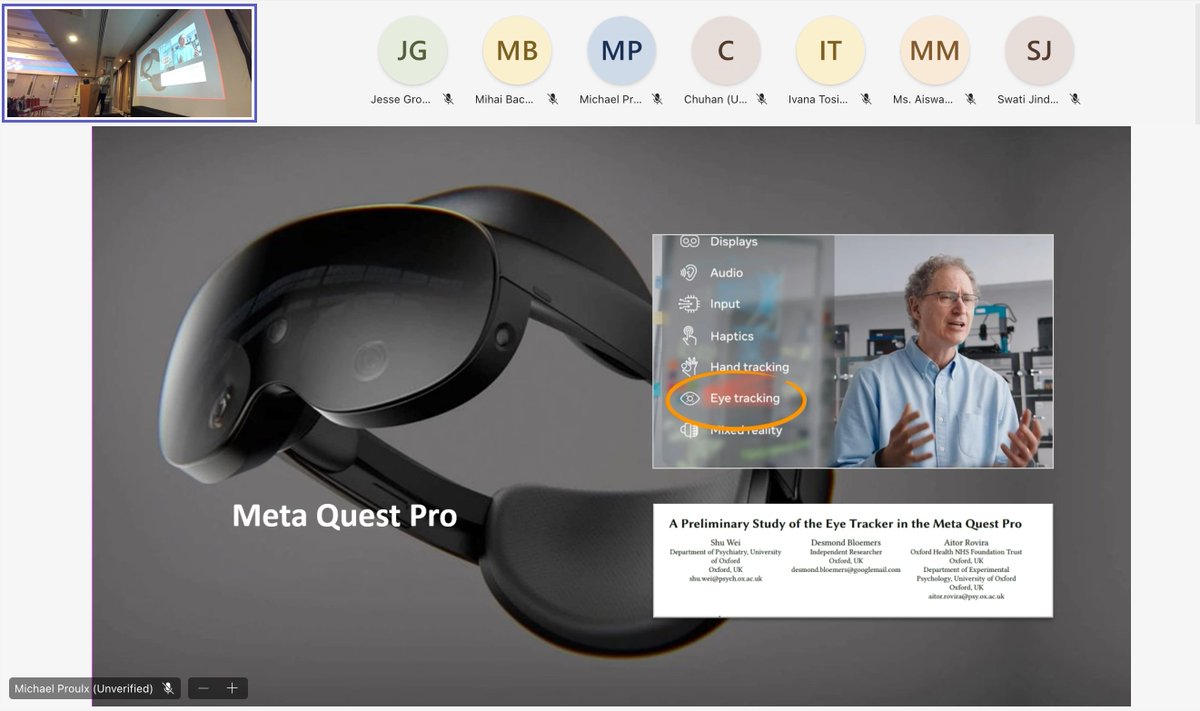

PETMEI Workshop at ETRA 2024 kicked off with the keynote speech by Michael J. Proulx from Reality Labs at Meta. Insightful speech on pervasive eye tracking challenges for interactions in #ExtendedReality. petmei.org/2024/ #ETRA2024

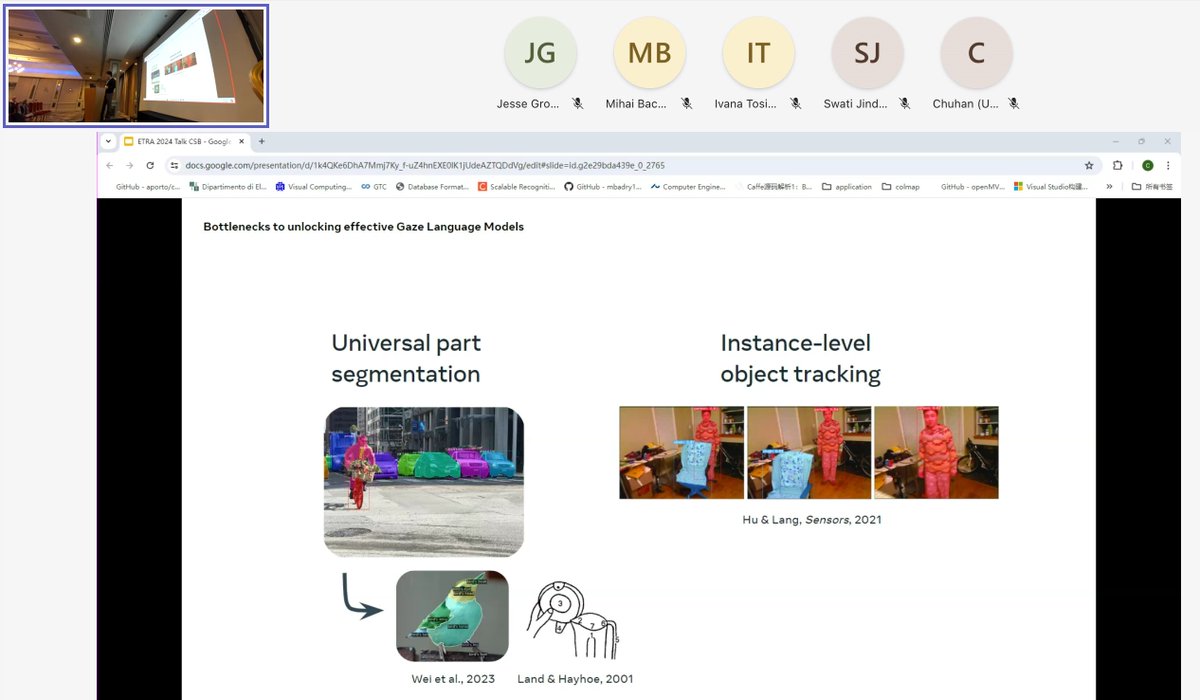

.Charlie S. Burlingham from Reality Labs at Meta is now presenting their paper titled, "Real-World Scanpaths Exhibit Long-Term Temporal Dependencies: Considerations for Contextual AI for AR Applications" at PETMEI Workshop at #ETRA2024. Paper: doi.org/10.1145/364990…

Paper Session 1: Visual Attention ETRA 2024 just started. Oleg Komogortsev from Reality Labs at Meta and Texas State University is now presenting their paper on "Per-Subject Oculomotor Plant Mathematical Models and the Reliability of Their Parameters" at #ETRA2024. Paper: doi.org/10.1145/3654701

New article on unifying perceived magnitude and discriminability is out: pnas.org/doi/10.1073/pn… Eero Simoncelli Lyndon Duong

All-day AR would benefit from AI models that understand a person's context & eye tracking could be key for task recognition. Yet past work - including our own research.facebook.com/publications/c… - hasn't found much added value from gaze in addition to computer vision & egocentric video 2/