Courtney Paquette

@cypaquette

Research Scientist, Assistant Professor

ID: 1466616048946487300

03-12-2021 03:51:44

3 Tweet

74 Followers

7 Following

We're excited to announce the ICML 2025 call for workshops! The CFP and submission advice can be found at: icml.cc/Conferences/20…. The deadline is Feb 10. Courtney Paquette, Natalie Schluter and I look forward to your submissions!

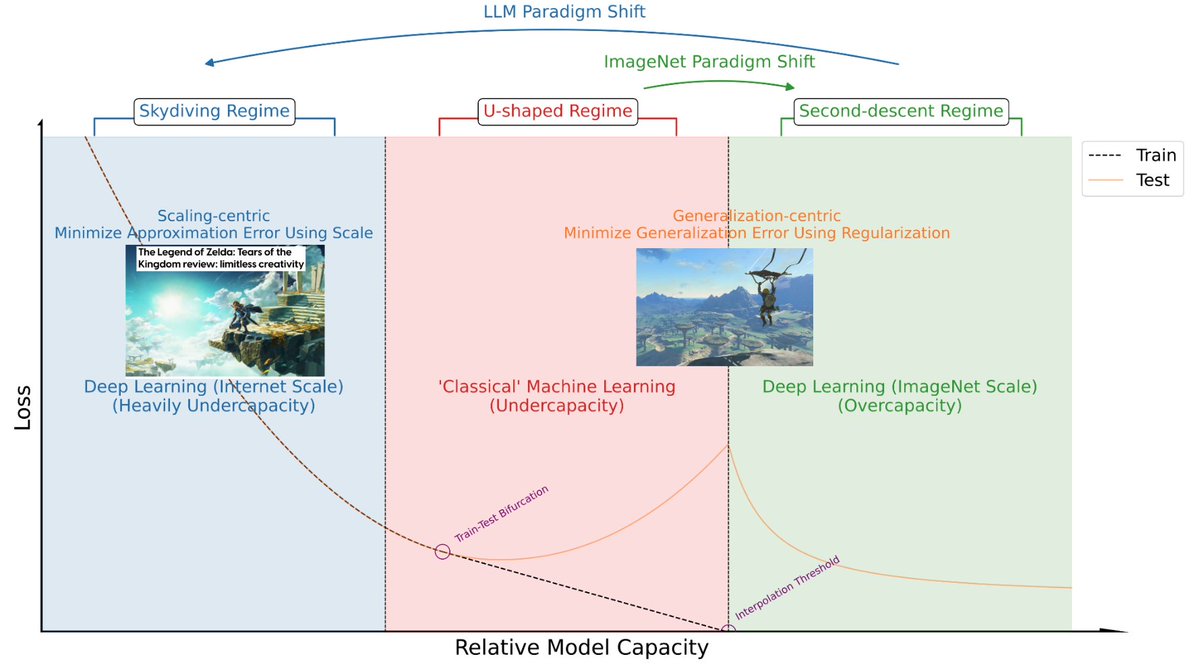

On data: Sharma & Kaplan 2020 proposes a theoretical model where data distribution and task induce the data manifold dimensionality, which in turn induces the scaling exponent. Can explain why different modality = different exponent. h/t chopwatercarry x.com/chopwatercarry…

chopwatercarry Henighan et al 2020 shows empirically that different modalities (language, image, video, math) have different exponents. Same for different image resolutions (8x8 vs 16x16 etc). (They also find that the exponent for optimal model size vs compute is universal across modalities.)

chopwatercarry Bansal et al 2022 compares the data scaling exponents on translation tasks and find the same exponent across different architectures, data filtering techniques, and synthetic i.i.d. noise. Adding non-i.i.d. noise via data augmentation (back-translation) does change the exponent.

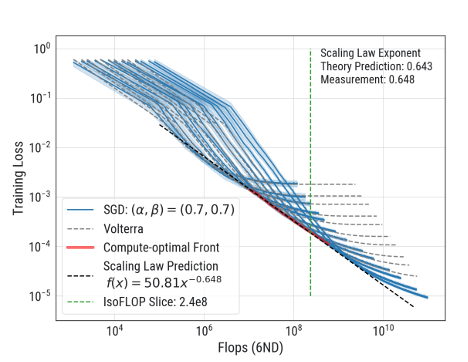

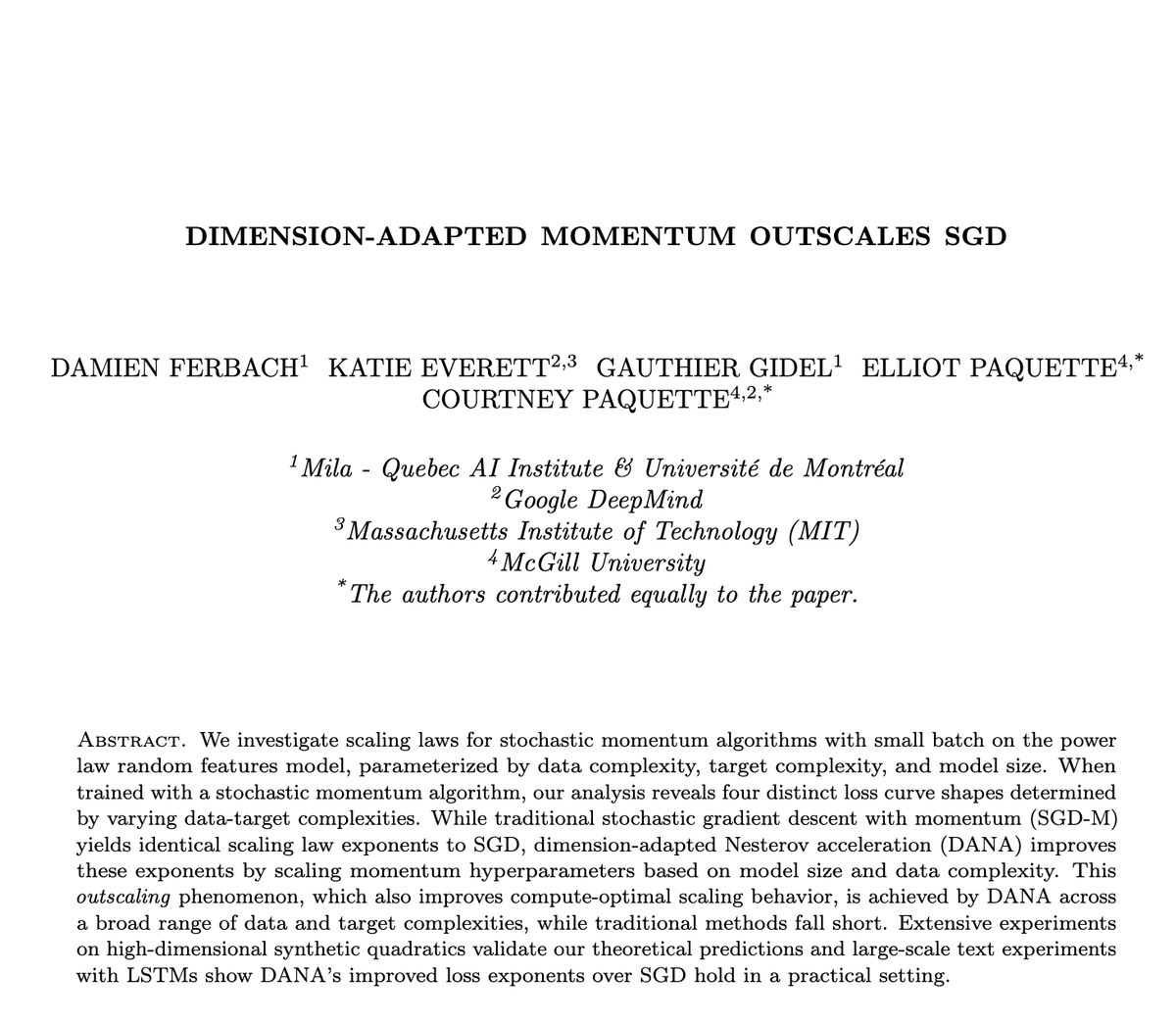

Title: Dimension-adapted Momentum Outscales SGD Link: arxiv.org/pdf/2505.16098 Work done with amazing collaborators Katie Everett Gauthier Gidel Elliot Paquette Courtney Paquette Related 🧵: x.com/_katieeverett/… x.com/_katieeverett/…

While scaling laws typically predict the final loss, we show in our ICML oral paper that good scaling rules enable accurate predictions of entire loss curves of larger models from smaller ones! w/Lechao Xiao, Andrew Gordon Wilson, J. Pennington, A. Agarwala: arxiv.org/abs/2507.02119 1/10