Daniel Han

@danielhanchen

Building @UnslothAI. Finetune train LLMs faster. LLMs bug hunter. OSS package github.com/unslothai/unsl…. YC S24. Prev ML at NVIDIA. Hyperlearn used by NASA.

ID: 717359704226172928

https://unsloth.ai/ 05-04-2016 14:34:16

2,2K Tweet

23,23K Followers

1,1K Following

Get 2x faster for reward model serving and sequence classification inference through Unsloth AI! Nice benchmarks Kyle!

This is just me unapologetically nerd crushing on the Unsloth AI duo, legendary developers with a shared goal of democratizing AI compute:

New tutorial: how to build a synthetic dataset with recent information and use it to fine tune with Unsloth AI Check out the collab: colab.research.google.com/drive/1JK04IBE… Steps in the 🧵

Excited to see you all tomorrow for our Google Gemma & Unsloth developer meetup! 🦥 We'll be having @Grmcameron from Artificial Analysis and 👩💻 Paige Bailey & more amazing talks! Location has been updated so please check & if you need help please DM me! lu.ma/gemma-unsloth

Huge thanks to everyone who attended our Google & Unsloth AI Gemma developer meetup yesterday! 🦥 Was amazing meeting you all & thank you to Taka Shinagawa for hosting the event with us. Thank you to the Google speakers: 👩💻 Paige Bailey, Doug Reid, Mayank Chaturvedi, @GrmCameron and of

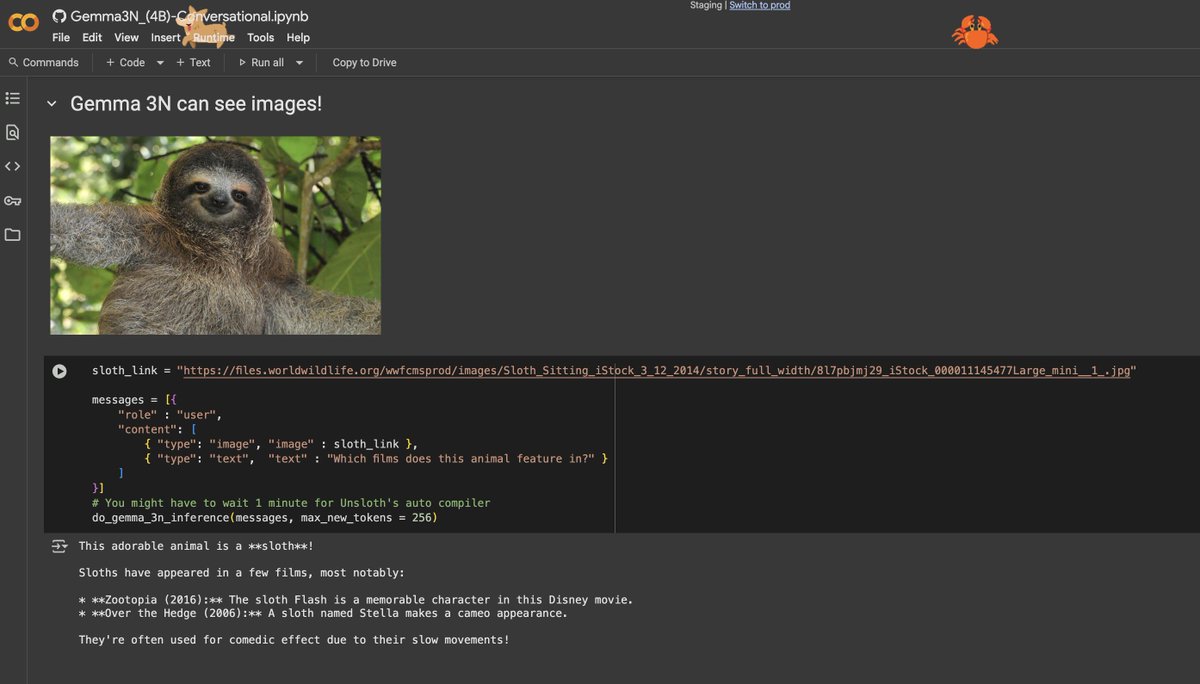

Gemma 3N quirks! 1. Vision NaNs on float16 2. Conv2D weights are large FP16 overflows to infinity 3. Large activations fixed vs Gemma 3 4. 6-7 training losses: normal for multimodal? 5. Large nums in msfa_ffn_pw_proj 6. NaNs fixed in Unsloth AI Details: docs.unsloth.ai/basics/gemma-3…

🦥 Fine-tuning with Unsloth AI now supports Gemma 3n! ✨ Friendly reminder: the Gemma 3n models can understand not just text and code, but also images, audio, video, and a whole lot more.