Daniel Murfet

@danielmurfet

Mathematician at the University of Melbourne. Working on Singular Learning Theory and AI alignment.

ID: 617213120

http://www.therisingsea.org 24-06-2012 15:26:49

3,3K Tweet

851 Followers

518 Following

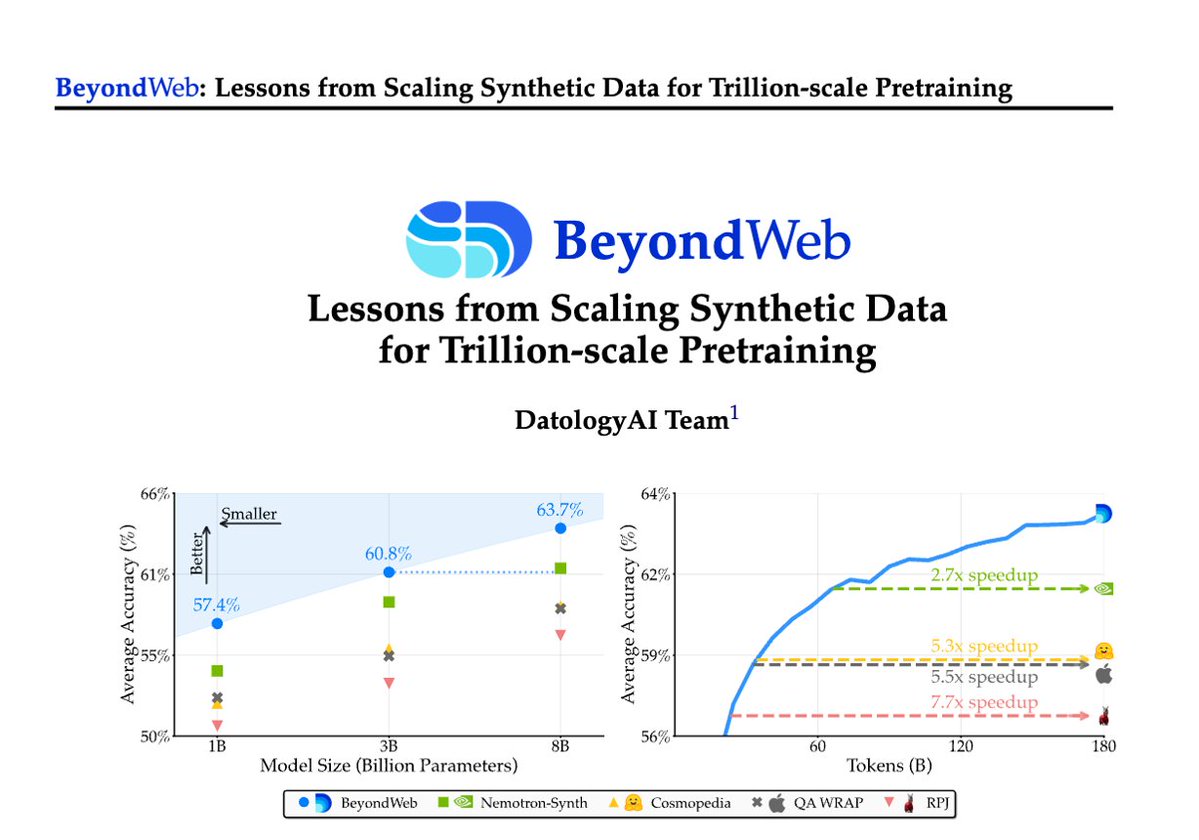

1/Pretraining is hitting a data wall; scaling raw web data alone leads to diminishing returns. Today DatologyAI shares BeyondWeb, our synthetic data approach & all the learnings from scaling it to trillions of tokens🧑🏼🍳 - 3B LLMs beat 8B models🚀 - Pareto frontier for performance

Grateful to Simons Foundation for their support of the Physics of Learning, and glad to be a part of this collaboration! Excited to see many breakthroughs in the coming years.

yearn to contemplate the platonic forms? captivated by the geometry of balls rolling down valleys something something rainbow serpent something something cell biology? apply to work with Daniel Murfet and Jesse Hoogland in the Winter MATS cohort by Oct 2.