Dara Bahri

@dara_bahri

Research Scientist at Google DeepMind.

ID: 1250475209959698432

http://www.dara.run 15-04-2020 17:25:03

83 Tweet

806 Followers

56 Following

Want to watermark the outputs of an LLM but only have 3p API access to it? We propose a "distortion-free" LLM watermarking scheme that only requires a way to sample responses for the prompt. Joint work with John Wieting and others Google DeepMind (arxiv.org/abs/2410.02099).

Really fun work with Richard Song! TLDR; we show that sequence modeling of numeric strings can be a powerful paradigm for tasks like regression and density estimation.

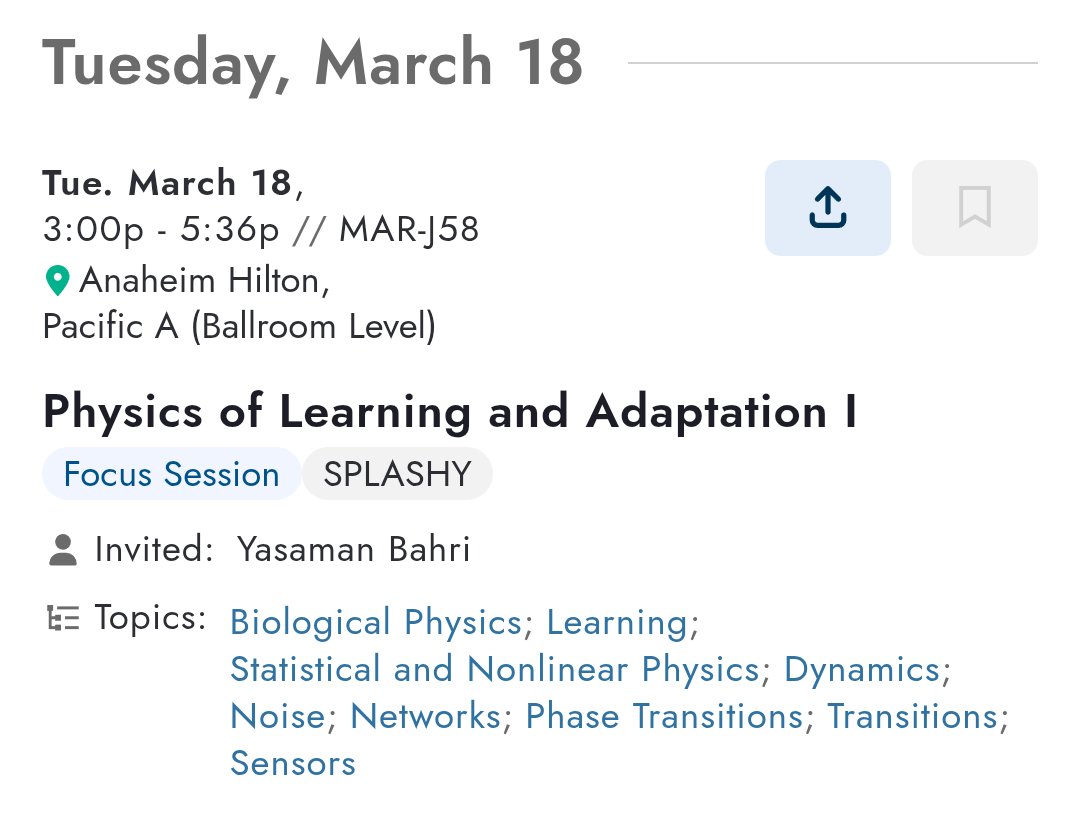

Excited to be at the APS March Meeting this year! American Physical Society I'll be giving a talk in the Tues afternoon session MAR-J58, Physics of Learning & Adaptation I.