Dimitris Bertsimas

@dbertsim

MIT professor, analytics, optimizer, Machine Learner, entrepreneur, philatelist

ID: 896010487346941952

http://www.mit.edu/~dbertsim/ 11-08-2017 14:08:42

53 Tweet

2,2K Followers

92 Following

The Holistic AI in Medicine (HAIM) framework from Dimitris Bertsimas et al. in MIT Jameel Clinic for AI & Health is a pipeline to receive multimodal patient data + use generalizable pre-processing + #machinelearning modelling stages adaptable to multiple health related tasks. nature.com/articles/s4174…

If you are into #MachineLearning and #Statistics check this out. I would also highly recommend the Machine Learning under a modern optimization lens book by Dimitris Bertsimas and Dunn. Here are two two teaser must watch imo youtube videos youtu.be/7w9aRrYgGEs youtu.be/jJgdJaCo568

pipelines that can consistently be applied to train multimodal AI/ML systems & outperform their single-modality counterparts has remained challenging. #JameelClinic faculty lead Dimitris Bertsimas, executive director ignacio fuentes, postdoc Luis Ruben Soenksen, Yu Ma, Cynthia Zeng,... (2/4)

📢New preprint alert! arxiv.org/abs/2303.07695 We use sampling schemes and clustering to improve the scalability of deterministic Bender's decomposition on data-driven network design problems, while maintaining optimality. w/ Dimitris Bertsimas, Jean Pauphilet, and Periklis Petridis

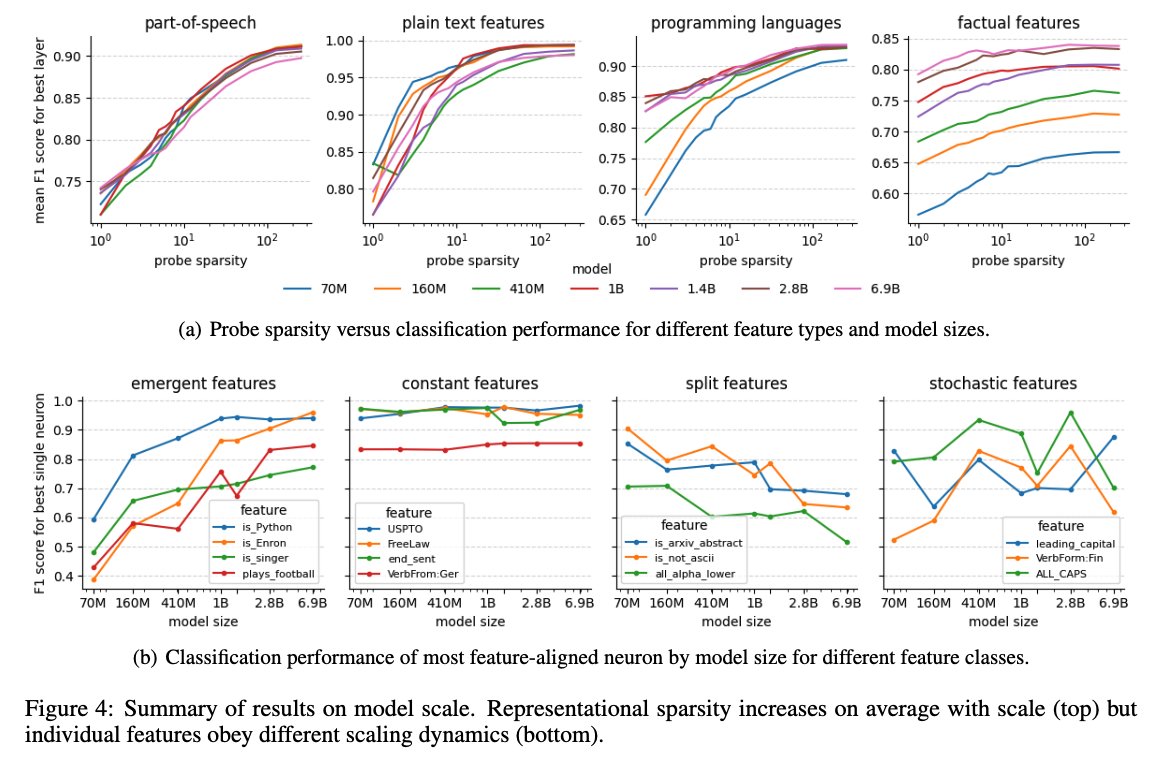

Results in toy models from Anthropic and Chris Olah suggest a potential mechanistic fingerprint of superposition: large MLP weight norms and negative biases. We find a striking drop in early layers in the Pythia models from EleutherAI and Stella Biderman.

This paper would not have been possible without my coauthors Neel Nanda, Matthew Pauly, Katherine Harvey, Dmitrii Troitskii, and Dimitris Bertsimas or all the foundational and inspirational work from Chris Olah, Yonatan Belinkov, and many others! Read the full paper: arxiv.org/abs/2305.01610

Interpretable machine learning methodologies are powerful tools to diagnose and remedy system-related bias in care, such as disparities in access to postinjury rehabilitation care. ja.ma/3P8Sdjc Haytham Kaafarani Dimitris Bertsimas Anthony Gebran @LMaurerMD