Daan de Geus

@dcdegeus

Currently visiting @RWTHVisionLab | Postdoc at @TUEindhoven | Computer vision

ID: 747421825915830272

https://ddegeus.github.io/ 27-06-2016 13:30:24

58 Tweet

171 Followers

386 Following

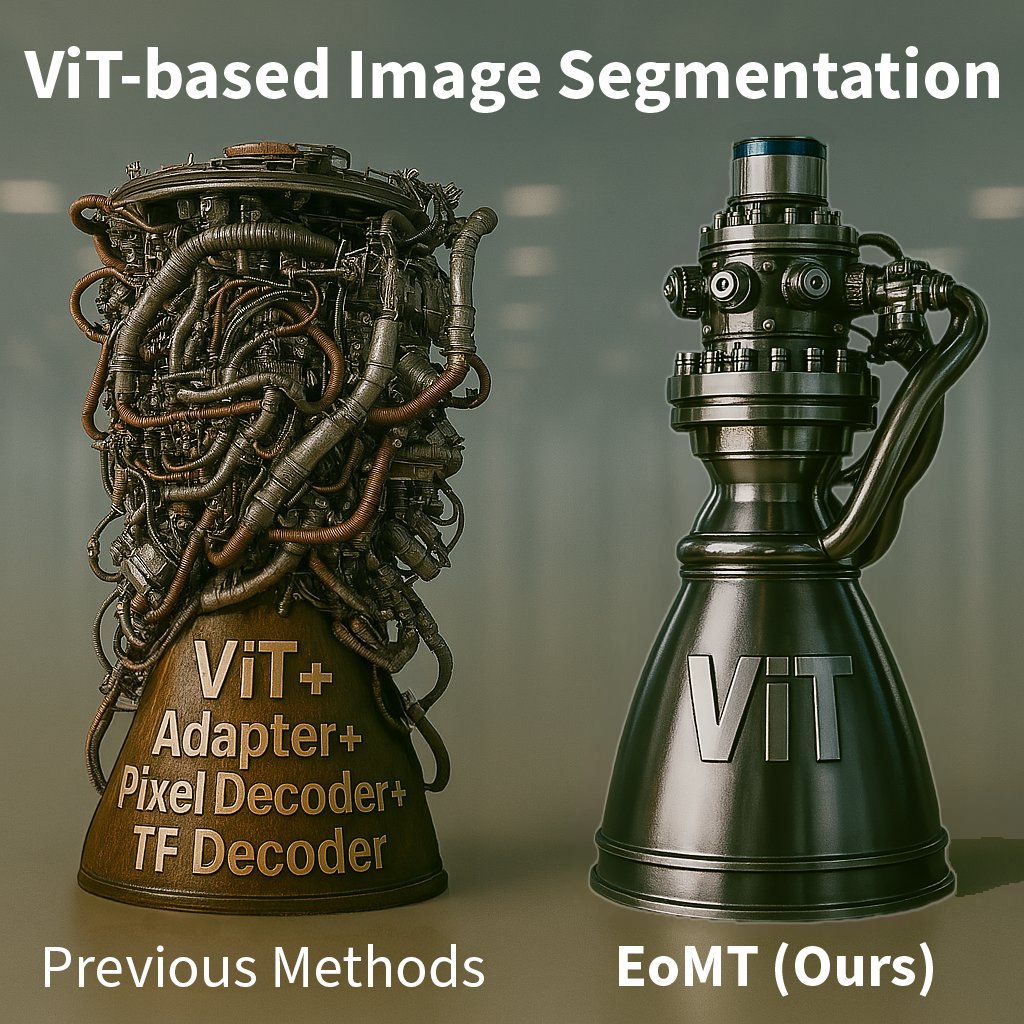

Happy news! Last week, I successfully defended my PhD thesis (cum laude)😀 Many thanks to my supervisors Gijs Dubbelman and Peter de With, and committee members Bastian Leibe, Cees Snoek, Julian Kooij, and Henk Corporaal! Next: a research visit at RWTH Computer Vision Group.

Honored to be on this list 😊 Thanks European Conference on Computer Vision #ECCV2026 organizers and ACs! And congrats to the other outstanding reviewers!

🚀Check our recent work #Interactive4D to achieve interactive #LiDAR segmentation of multiple objects on multiple scans simultaneously. Work with Ilya Fradlin, Kadir Yılmaz, TheodoraKontogianni, and Bastian Leibe. 🌐Project: ilya-fradlin.github.io/Interactive4D/ 📜Paper: arxiv.org/pdf/2410.08206👇🧵