David Debot

@debot_david

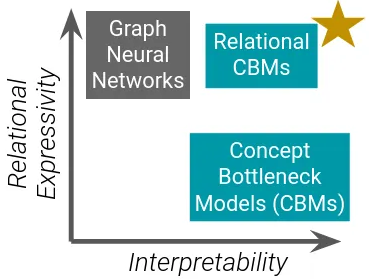

PhD student @KU_Leuven in neurosymbolic AI and concept-based learning

daviddebot.github.io

ID: 3065660391

01-03-2015 15:55:14

22 Tweet

39 Followers

121 Following

Just under 10 days left to submit your latest endeavours in ⚡#tractable⚡ probabilistic models❗ Join us at TPM ยัยตัวร้าย auai.org #UAI2025 and show how to build #neurosymbolic / #probabilistic AI that is both fast and trustworthy!