DeepSPIN

@deep_spin

Deep structured prediction in NLP. ERC project coordinated by @andre_t_martins. Instituto de Telecomunicações.

ID: 1026171704744325120

https://deep-spin.github.io/ 05-08-2018 18:22:54

23 Tweet

349 Followers

73 Following

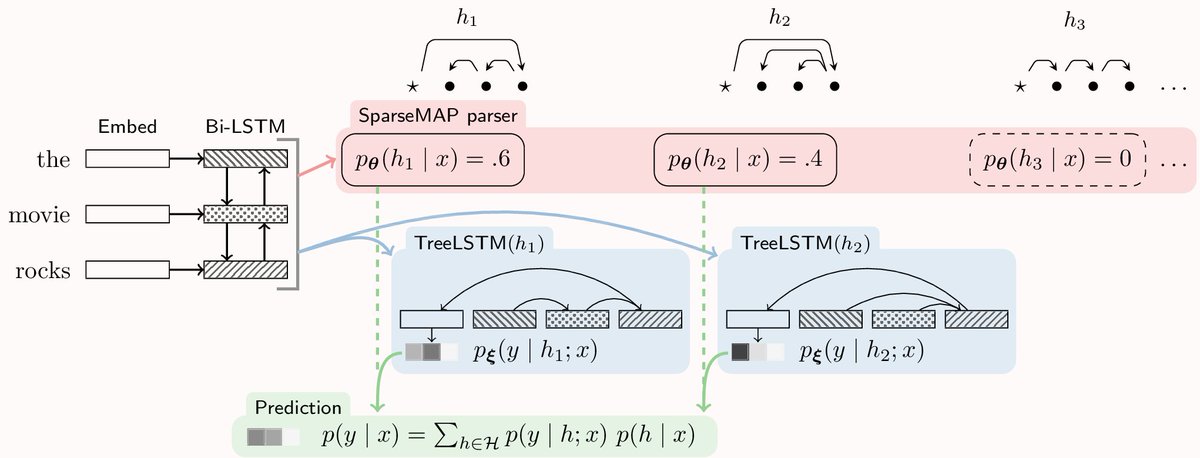

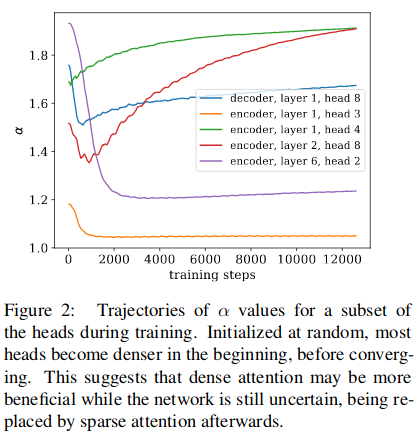

Towards Dynamic Computation Graphs via Sparse Latent Structure: #emnlp2018 + André Claire Cardie - marginalize over structured latent vars w/ SparseMAP - CG a function of discrete structure - eg latent dependency TreeLSTM pdf arxiv.org/abs/1809.00653 code github.com/vene/sparsemap…