Depen Morwani

@depen_morwani

PhD student at Harvard ML Foundations, Research Associate at Google AI, completed MS from IIT Madras

ID: 2560976503

11-06-2014 09:10:39

79 Tweet

211 Followers

136 Following

We're thrilled to introduce the 2024 cohort of #KempnerInstitute Graduate Fellows! This year’s recipients include seven incoming and eight continuing graduate students enrolled across six Harvard University Ph.D. programs. Read more: bit.ly/3L7cPW9 #AI #NeuroAI #ML

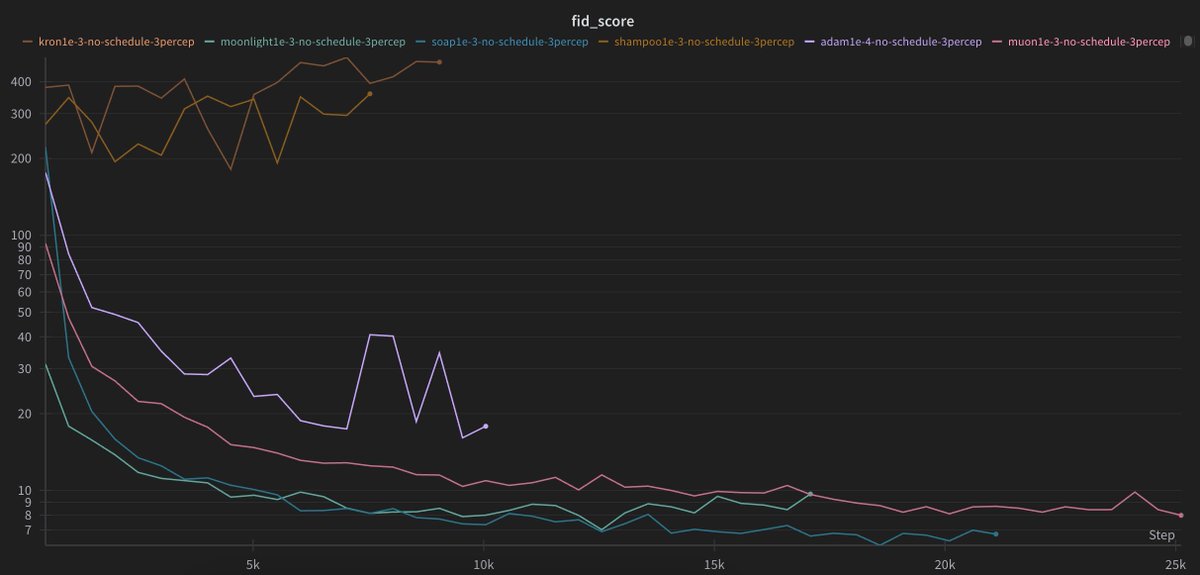

NEW #KempnerInstitute blog: Rosie Zhao, Depen Morwani, David Brandfonbrener, Nikhil Vyas & Sham Kakade study a variety of #LLM training optimizers and find they are all fairly similar except for SGD, which is notably worse. Read more: bit.ly/3S5PmZk #ML #AI