Alessio Devoto

@devoto_alessio

PhD in Data Science at @SapienzaRoma | Researching Efficient ML/AI ☘️ | Visiting @EdinburghNLP | alessiodevoto.github.io | Also on 🦋

ID: 1496187730463760388

https://alessiodevoto.github.io/ 22-02-2022 18:19:15

165 Tweet

457 Followers

508 Following

We visualized the features of 16 SAEs trained on CLIP in collaboration between Fraunhofer HHI and Mila - Institut québécois d'IA! Search thousands of interpretable CLIP features in our vision atlas, with autointerp labels, & scores like clarity and polysemanticity. Some fun features in thread:

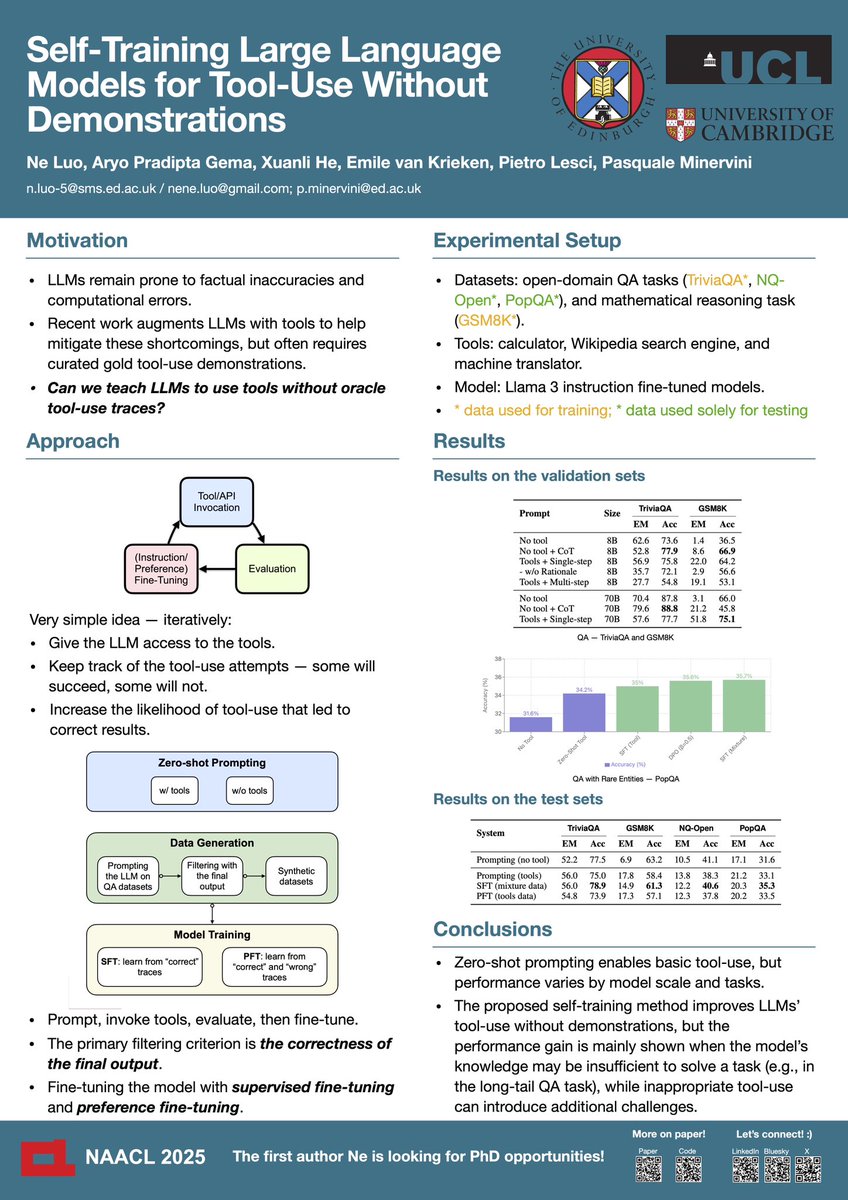

Hi! I will be attending #NAACL2025 and presenting our paper on self-training for tool-use today, an extended work of my MSc dissertation at EdinburghNLP, supervised by Pasquale Minervini is hiring postdocs! 🚀. Time: 14:00-15:30 Location: Hall 3 Let’s chat and connect!😊

Are you ready to play with us?🎲 Our tutorial D&D4Rec, short for "Standard Practices for Data Processing and Multimodal Feature Extraction in Recommendation with DataRec and Ducho", has been accepted at #RecSys2025 (ACM RecSys) 🥳🥳 More details in the thread 🧵👇

A new paper is out! In collaboration with Alessio Devoto and Simone Scardapane, we tackled catastrophic forgetting in class-incremental learning scenarios via Probability Dampening (self-scaling logit margins) & Cascaded Gated Classifier (sigmoid-gated mini-heads per task)

Happy to share I just started as associate professor in Sapienza Università di Roma! I have now reached my perfect thermodynamical equilibrium. 😄 Also, ChatGPT's idea of me is way infinitely cooler so I'll leave it here to trick people into giving me money.