dilara

@dilarafsoylu

phd student @StanfordNLP

ID: 1485454823281463297

24-01-2022 03:33:37

102 Tweet

348 Followers

1,1K Following

SmolLM3 uses the APO preference loss! Karel D’Oosterlinck great to see APO getting more adoption!

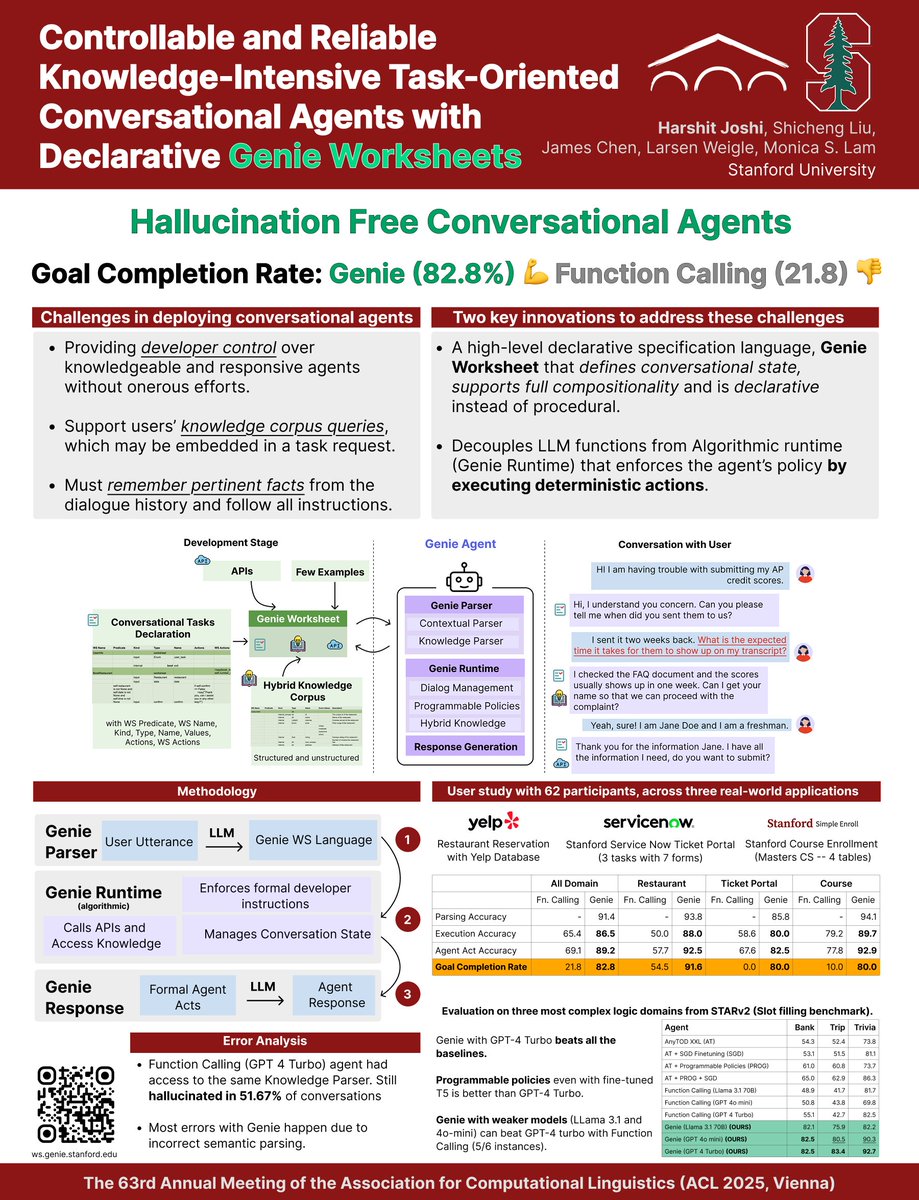

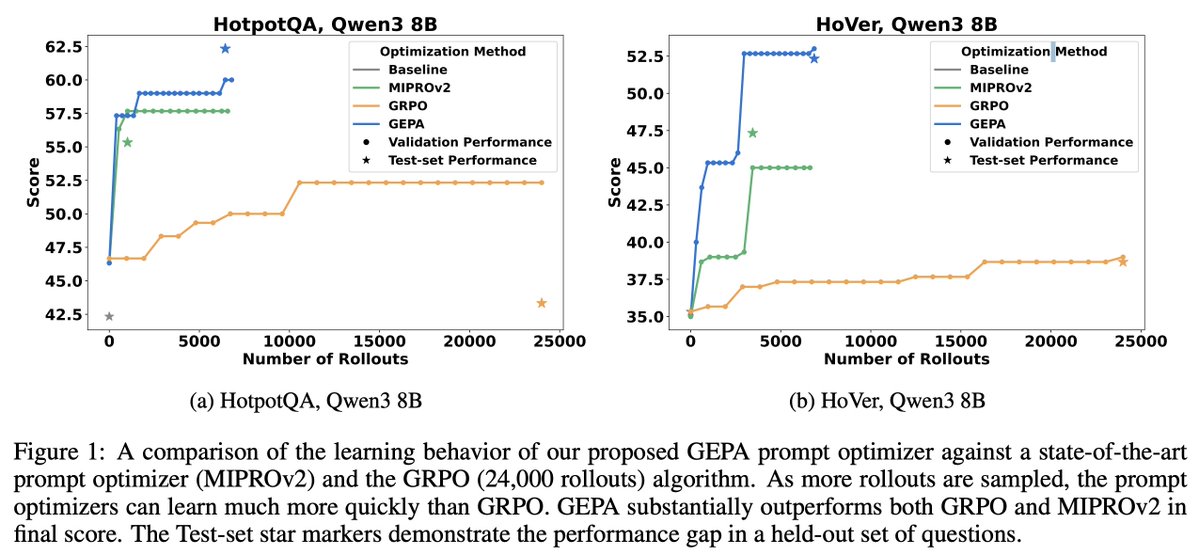

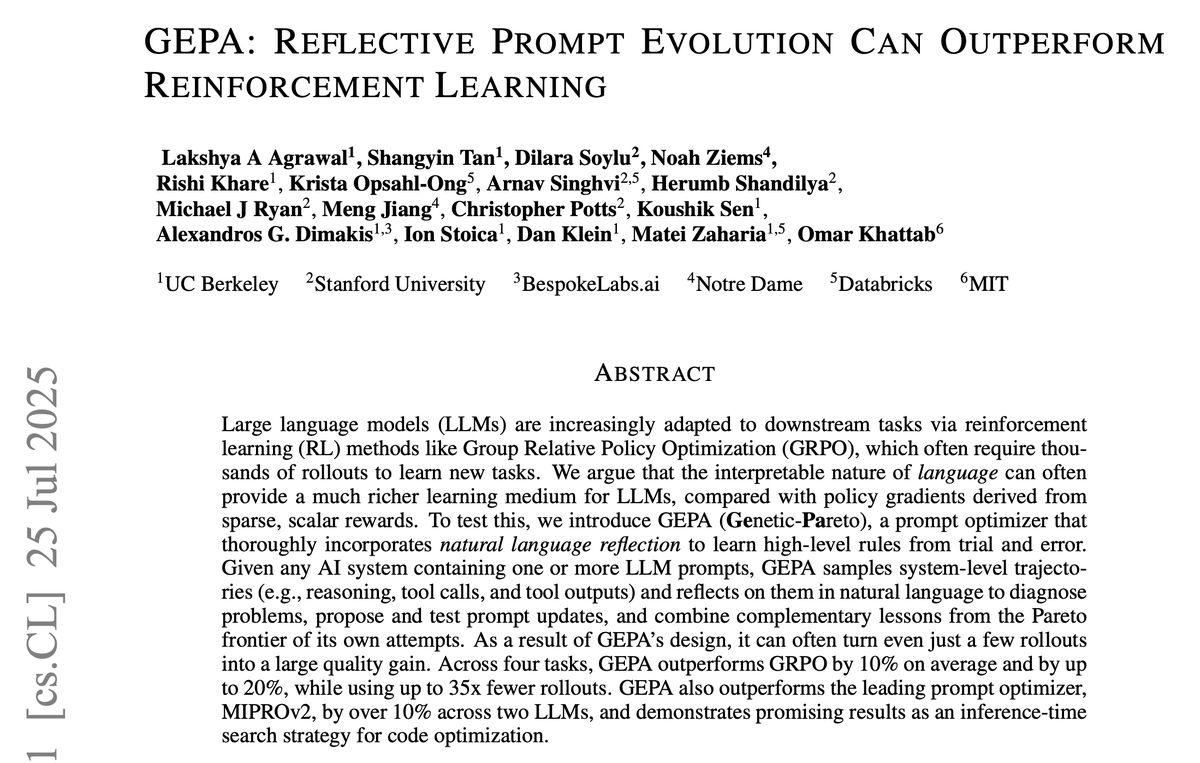

Paper: arxiv.org/abs/2507.19457 GEPA will be open-sourced soon as a new DSPy optimizer. Stay tuned! Incredibly grateful to the wonderful team Shangyin Tan Dilara Soylu Noah Ziems Rishi Khare Krista Opsahl-Ong Arnav Singhvi Herumb Shandilya Michael Ryan @ ACL 2025 🇦🇹 Meng Jiang Christopher Potts

Lakshya A Agrawal Obligatory tagging of Andrej Karpathy's take. Comparing prompt learning against GRPO by Lakshya A Agrawal, Dilara Soylu, Noah Ziems and team. See also earlier evidence of prompt optimization vs. offline RL in a narrower setting dspy.BetterTogether (EMNLP'24)! x.com/karpathy/statu…