Dingli Yu

@dingli_yu

Researcher @ Microsoft Research | PhD from Princeton

ID: 1039536421550387200

http://dingliyu.net 11-09-2018 15:29:31

20 Tweet

456 Followers

74 Following

Why can we fine-tune (FT) huge LMs on a few data points without overfitting? We show with theory + exps that FT can be described by kernel dynamics. arxiv.org/abs/2210.05643 Joint work with Alex Wettig, Dingli Yu, Danqi Chen, Sanjeev Arora. [1/8]

Launching blog Princeton PLI with a post on skillmix. LLMs aren't just "stochastic parrots." Geoffrey Hinton recently mentioned this as evidence that LLMs do "understand" the world a fair bit. More blog posts on the way! (Hinton's post here: x.com/geoffreyhinton…)

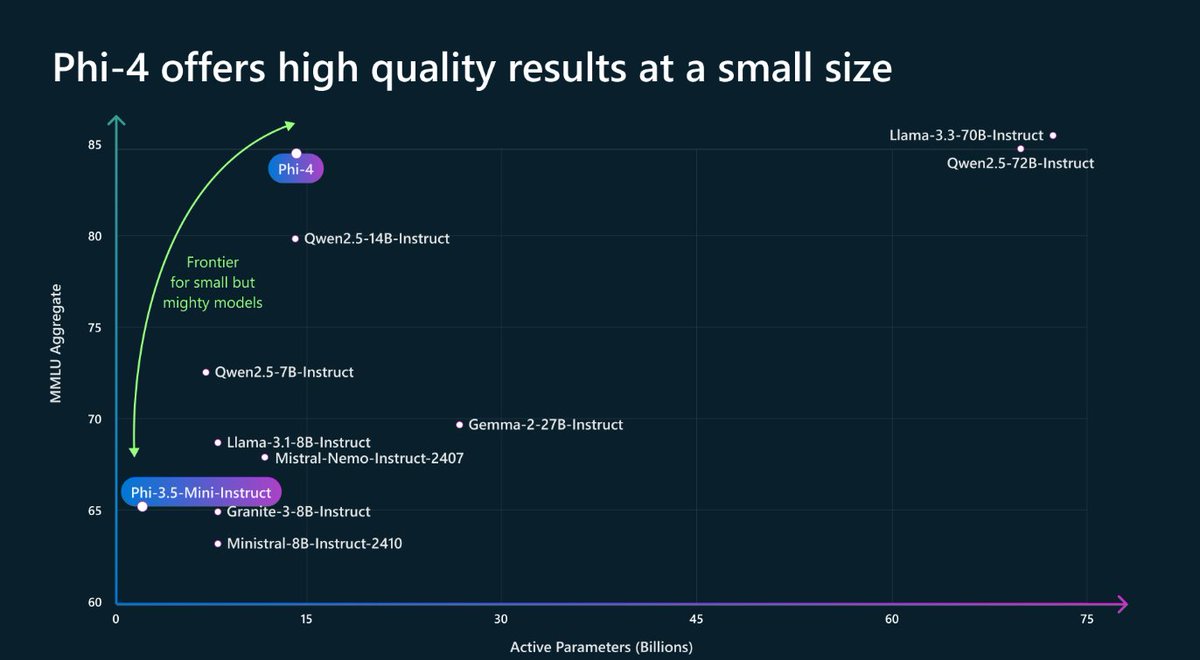

🚀 Phi-4 is here! A small language model that performs as well as (and often better than) large models on certain types of complex reasoning tasks such as math. Useful for us in Microsoft Research, and available now for all researcher on the Azure AI Foundry! aka.ms/phi4blog

Quanta Magazine featured our work on emergence of skill compositionality (and its limitations) in LLMs among the CS breakthroughs of the year. tinyurl.com/5f5jvzy5. Work was done over 2023 Google DeepMind and Princeton PLI. Key pieces: (i) mathematical framework for

Does all LLM reasoning transfer to VLM? In context of Simple-to-Hard generalization we show: NO! We also give ways to reduce this modality imbalance. Paper arxiv.org/abs/2501.02669 Code github.com/princeton-pli/… Abhishek Panigrahi Yun (Catherine) Cheng Dingli Yu Anirudh Goyal Sanjeev Arora