Dongkeun Yoon

@dongkeun_yoon

PhD student @kaist_ai, research intern @LG_AI_Research. Researching multilinguality in LLMs.

ID: 1504088658135040008

https://mattyoon.github.io/ 16-03-2022 13:34:20

127 Tweet

324 Followers

239 Following

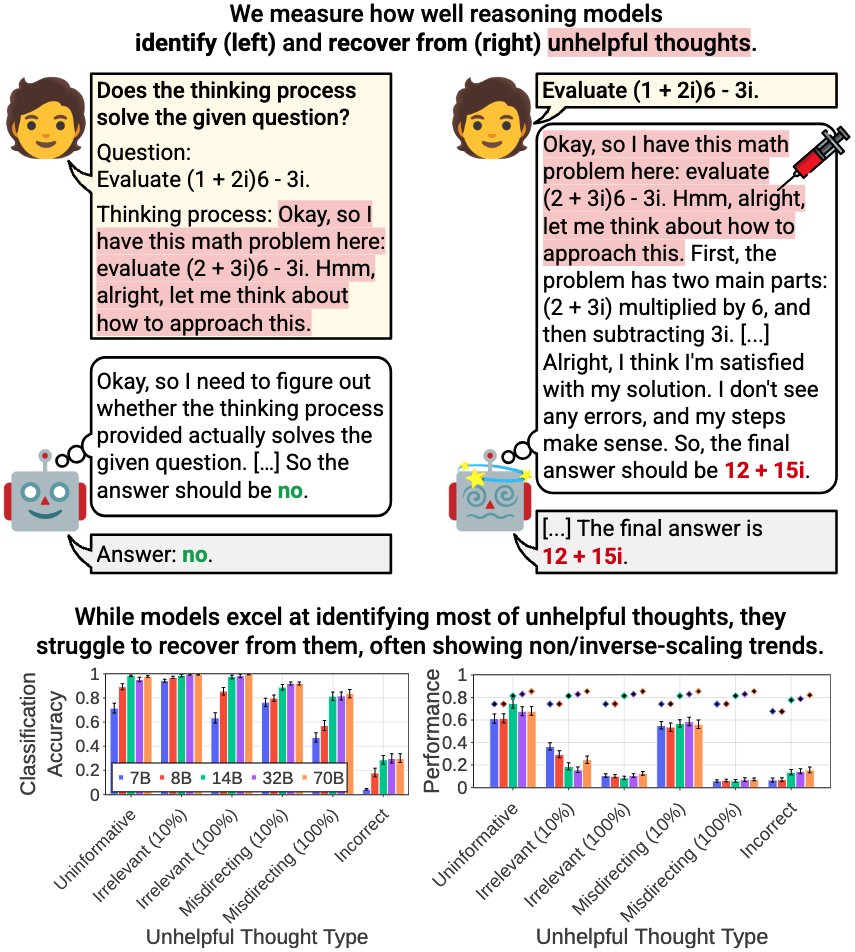

Turns out that reasoning models not only excel at solving problems but are also excellent confidence estimators - an unexpected side effect of long CoTs! This reminds me that smart ppl are good at determining what they know & don't know👀 Check out Dongkeun Yoon 's post!

Dongkeun Yoon Congrats to the team for this fantastic work! Had a chance to try the code on my reasoning VLM and found consistent results. x.com/smellslikeml/s…

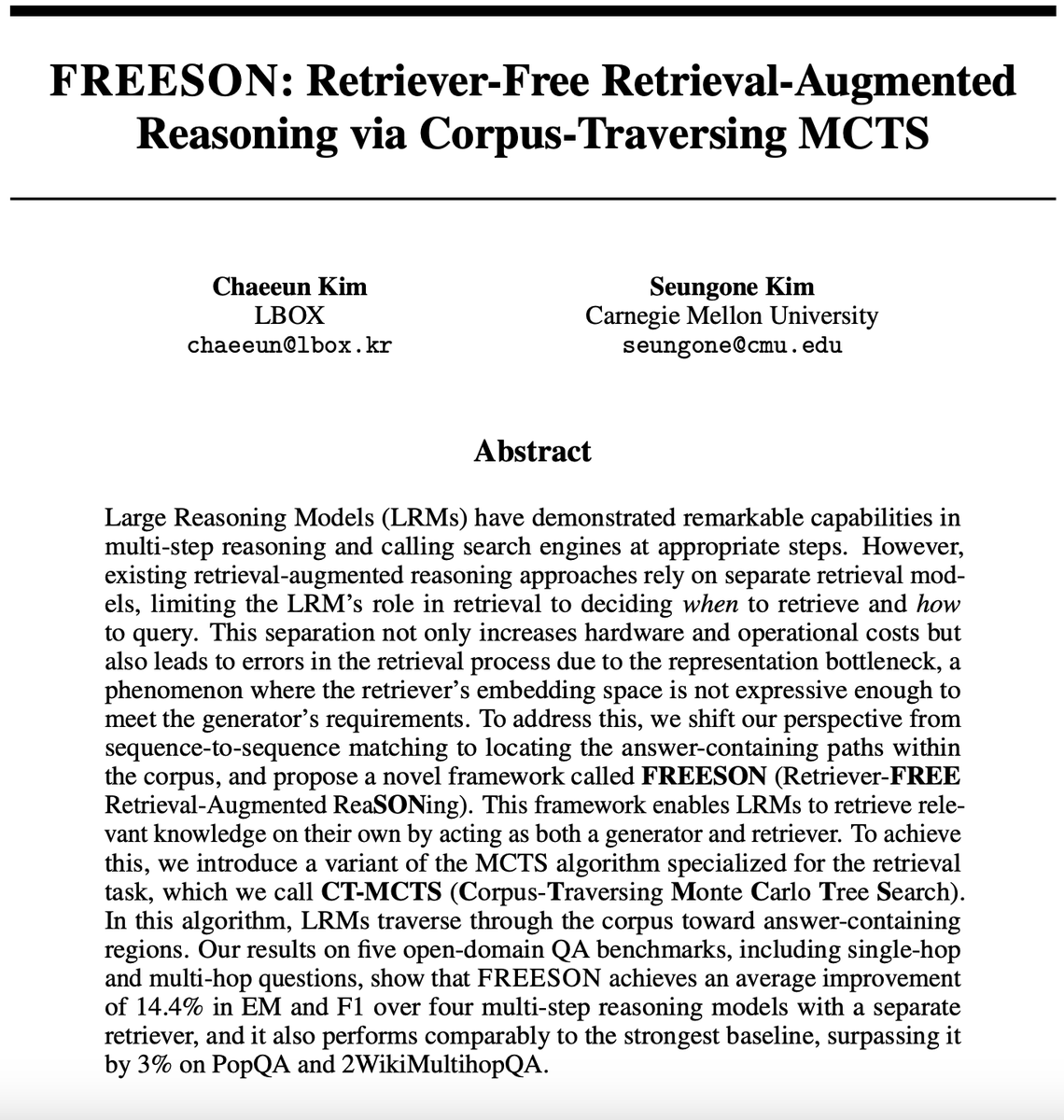

New preprint 📄 (with Jinho Park ) Can neural nets really reason compositionally, or just match patterns? We present the Coverage Principle: a data-centric framework that predicts when pattern-matching models will generalize (validated on Transformers). 🧵👇

🚨 New Paper co-led with byeongguk jeon 🚨 Q. Can we adapt Language Models, trained to predict next token, to reason in sentence-level? I think LMs operating in higher-level abstraction would be a promising path towards advancing its reasoning, and I am excited to share our

🚨 Excited to share that our paper was accepted to #ACL2025 Findings 🎉 "When Should Dense Retrievers Be Updated in Evolving Corpora? Detecting Out-of-Distribution Corpora Using GradNormIR" Huge thanks to my amazing collaborators! 🙌 Jinyoung Kim Sohyeon Kim We propose

🥳Excited to share that I’ll be joining UNC Computer Science as postdoc this fall. Looking forward to work with Mohit Bansal & amazing students at UNC AI. I'll continue working on retrieval, aligning knowledge modules with LLM's parametric knowledge, and expanding to various modalities.

![fly51fly (@fly51fly) on Twitter photo [CL] Reasoning Models Better Express Their Confidence

D Yoon, S Kim, S Yang, S Kim... [KAIST & CMU & UCL] (2025)

arxiv.org/abs/2505.14489 [CL] Reasoning Models Better Express Their Confidence

D Yoon, S Kim, S Yang, S Kim... [KAIST & CMU & UCL] (2025)

arxiv.org/abs/2505.14489](https://pbs.twimg.com/media/GrgN-I3boAE2OJM.jpg)

![Yunjae Won (@yunjae_won_) on Twitter photo [1/6] Ever wondered why Direct Preference Optimization is so effective for aligning LLMs? 🤔

Our new paper dives deep into the theory behind DPO's success, through the lens of information gain.

Paper: "Differential Information: An Information-Theoretic Perspective on Preference [1/6] Ever wondered why Direct Preference Optimization is so effective for aligning LLMs? 🤔

Our new paper dives deep into the theory behind DPO's success, through the lens of information gain.

Paper: "Differential Information: An Information-Theoretic Perspective on Preference](https://pbs.twimg.com/media/GsMwZ6SW8AAEMLw.png)