Daniel Smilkov

@dsmilkov

co-founded @lilac_ai, acquired by @databricks. Past: Co-created Know Your Data & TensorFlow.js. Ex-PAIR/Google Brain. 🇲🇰🇺🇸 . ML & Visualization

ID: 197507094

http://smilkov.com 01-10-2010 17:48:12

706 Tweet

6,6K Followers

1,1K Following

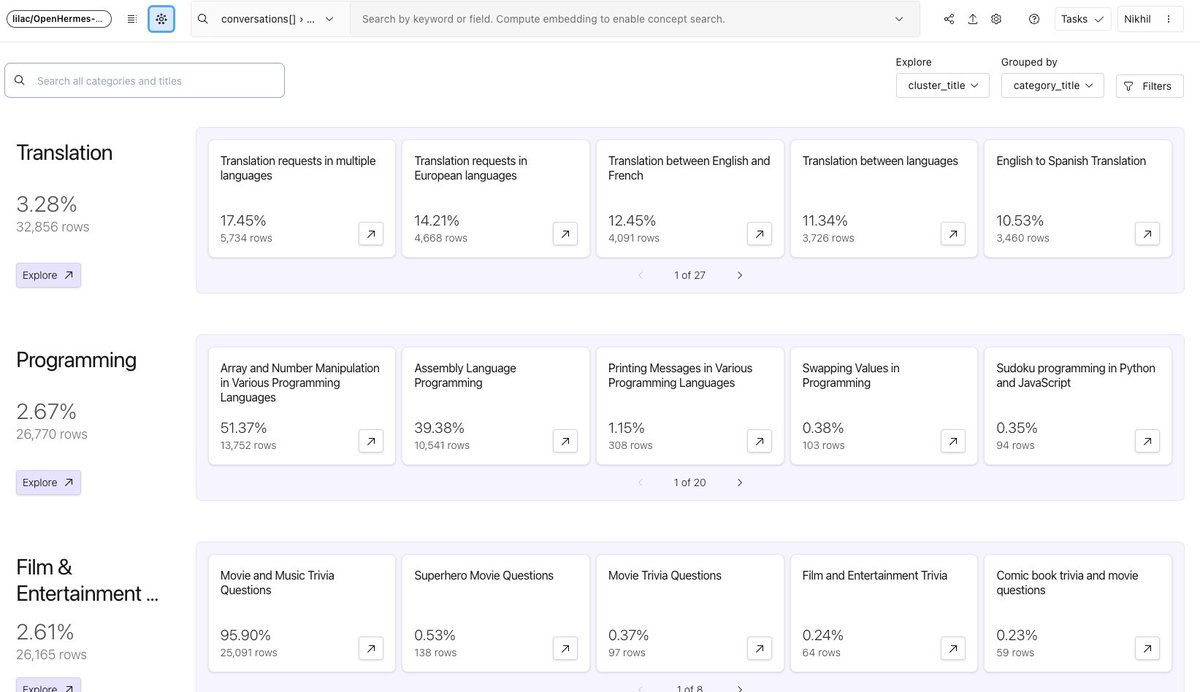

In addition, I've worked with Nikhil Thorat and the Lilac team to cluster the datasets into clusters for analysis and further curation! You can access the dataset in Lilac's Hugging Face Spaces here: lilacai-lilac.hf.space/datasets#lilac… And can access the clusters by clicking this button:

OpenHermes-2.5 dataset is finally here! We're hosting the full dataset with pre-computed Lilac, joining Databricks! clusters in our demo. Clusters: lilacai-lilac.hf.space/datasets#lilac…

Embedding-filtered version of reddit-instruct reddit-instruct is a QA dataset gathered from Reddit posts/comments, inspired by LIMA. This version filters it further using Lilac, joining Databricks!'s platform, preventing non-instruction/QA data from being included huggingface.co/datasets/eucla…

With such a warm recommendation, I had to get Lilac, joining Databricks! folks on stage, and here is our conversation and a deep dive into dataset creation, curation and classification. Just posted 📅 ThursdAI - weekly AI news podcast deep dive into Lilac and RWKV. Links as always, first comment 👇

Finally have some free time! Translated 1M Teknium (e/λ) OpenHermes-2.5 samples to Vietnamese, then used Lilac, joining Databricks! to reduce to 25k. Result: 25k-trained model scored the same as 1M on Vietnamese VLSP Benchmark. REALLY GOOD DATA IS ALL YOU NEED🚀 huggingface.co/datasets/ontoc…

40X reduction of dataset size using Lilac, joining Databricks!, same performance. Attention to data is all you need.

Meet DBRX, a new sota open llm from Databricks. It's a 132B MoE with 36B active params trained from scratch on 12T tokens. It sets a new bar on all the standard benchmarks, and - as an MoE - inference is blazingly fast. Simply put, it's the model your data has been waiting for.

Yup, data processing matters a lot for LLMs, and we confirmed this by just quickly retraining MPT-7B on our new datasets. We built some great scalable tools for LLM data prep internally on Apache Spark and also benefited a lot from Lilac, joining Databricks!.

✨ Lessons of a first time founder ✨ I wrote down a bunch of important lessons Daniel Smilkov and I learned over the last year building Lilac, joining Databricks!. We had a short ride, but we learned a ton! This blog is mostly for technical folks who know how to build product, and are trying to

🎉 Thrilled to share my first research project since joining Databricks Mosaic Research: a customizable synthetic data generation engine for evaluating agents! Huge shout out to Daniel Smilkov, Nikhil Thorat for the slick interface and to Jonathan Frankle, Alex Trott for research prowess Check it out ⬇️

We just released a blog post on the new GenAI evaluation features in Databricks! This is a project I've been working on with Daniel Smilkov for 6 months. GenAI evaluation is notoriously tricky, especially when developers have to collaborate with domain experts to collect high quality