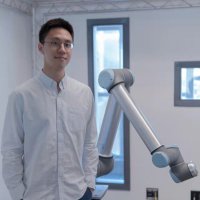

Edward Hu

@edward_s_hu

cs phd @penn, student researcher @MSFTResearch. investigating ai / rl / intelligence.

ID: 4583386580

http://www.edwardshu.com 17-12-2015 09:58:06

145 Tweet

745 Followers

304 Following

Introducing Dynamism v1 (DYNA-1) by Dyna Robotics – the first robot foundation model built for round-the-clock, high-throughput dexterous autonomy. Here is a time-lapse video of our model autonomously folding 850+ napkins in a span of 24 hours with • 99.4% success rate — zero