Elad Segal

@eladsegal

Deep Learning Research Engineer @NVIDIA

ID: 885545977

16-10-2012 23:23:57

81 Tweet

150 Followers

413 Following

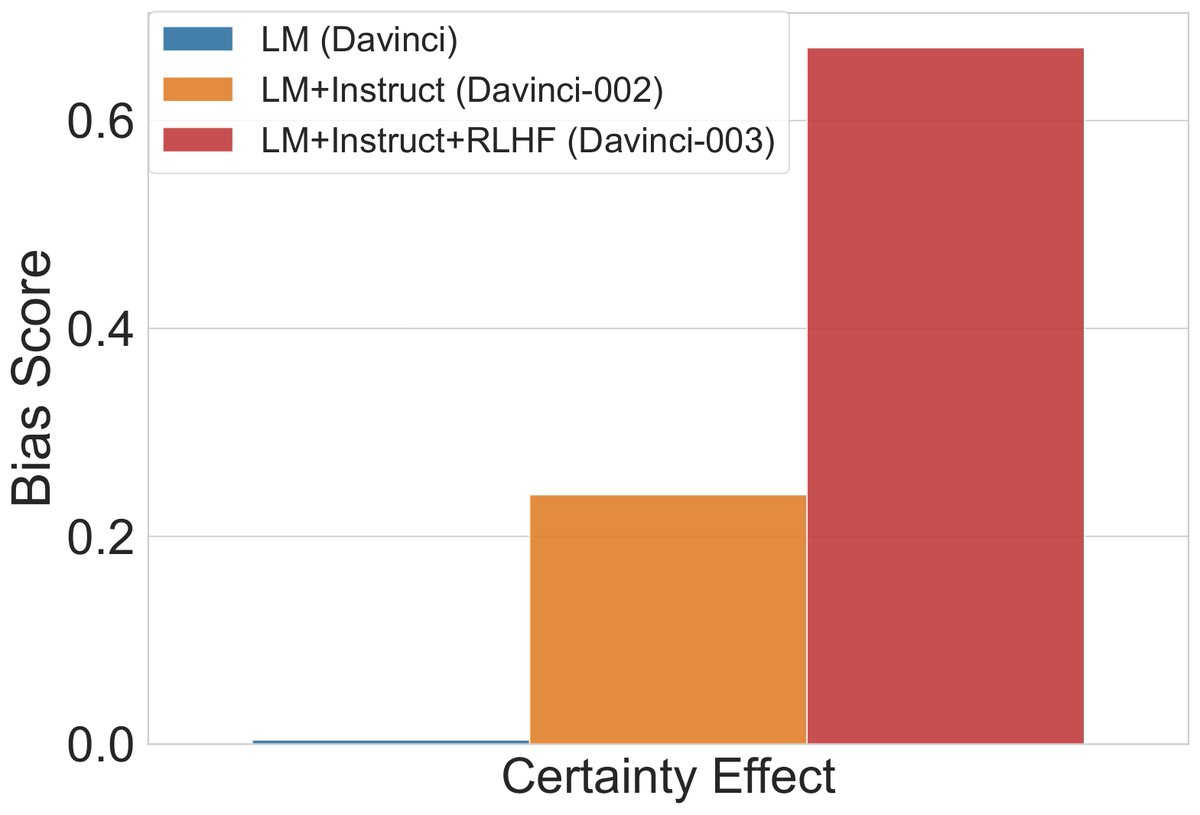

📢 New paper alert! 📢 Thrilled to announce `Instructed to Bias: Instruction-Tuned Language Models Exhibit Emergent Cognitive Bias'. Do instruction tuning and RLHF amplify biases in LMs? 🧵 Check it out arxiv.org/abs/2308.00225 W Yonatan Belinkov Gabriel Stanovsky and N. Rosenfeld.

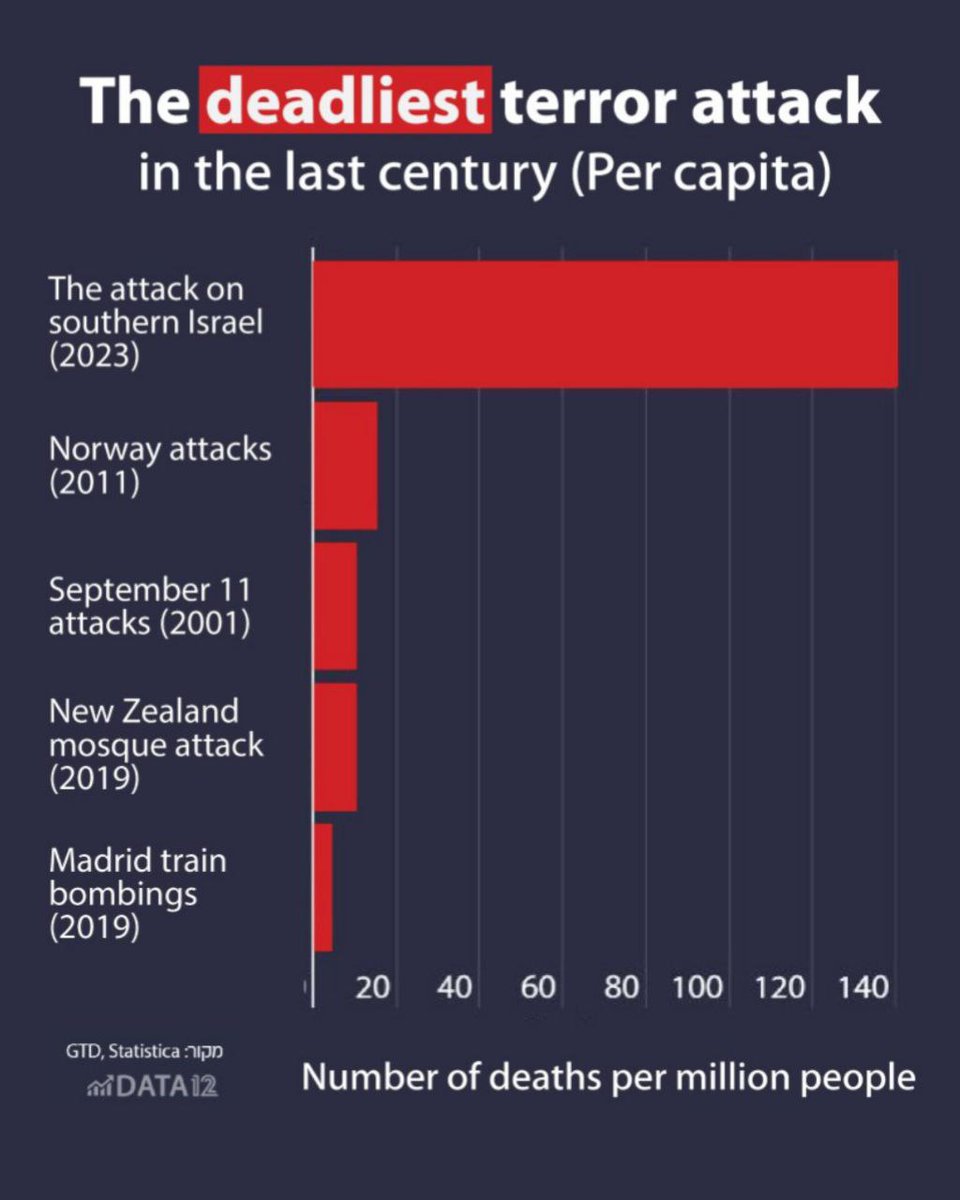

Yonit Levy’s extremely accurate thoughts on the world’s hypocrisy regarding Israel! Yonit Levi

Watch and Share with the world. A special project by החדשות - N12. This video contains footage taken by the young partygoers at the Nova Music Festival prior to the 7.10 terror attack. You’ll only see a handful of the 260 victims and dozens of those abducted or still missing doing

What's in an attention head? 🤯 We present an efficient framework – MAPS – for inferring the functionality of attention heads in LLMs ✨directly from their parameters✨ A new preprint with Amit Elhelo 🧵 (1/10)

![Ori Yoran (@oriyoran) on Twitter photo Retrieval-augmented LMs are not robust to irrelevant context. Retrieving entirely irrelevant context can throw off the model, even when the answer is encoded in its parameters!

In our new work, we make RALMs more robust to irrelevant context.

arxiv.org/abs/2310.01558

🧵[1/7] Retrieval-augmented LMs are not robust to irrelevant context. Retrieving entirely irrelevant context can throw off the model, even when the answer is encoded in its parameters!

In our new work, we make RALMs more robust to irrelevant context.

arxiv.org/abs/2310.01558

🧵[1/7]](https://pbs.twimg.com/media/F7t9RoVXkAASP9F.png)