Eric Lin, MD

@emailelin

VA Medical Informatics Fellow +

McLean Hospital Technology in Psychiatry Institute;

Formerly: Yale Psych, UCLA Med, UCLA

Interests: NLP/ML for psychiatry

ID: 94168352

https://www.linkedin.com/in/ericlinmd/ 02-12-2009 20:26:12

257 Tweet

80 Followers

238 Following

First, an instruction-tuned large language model, fine-tuned for chat from EleutherAI’s GPT-NeoX-20B with over 43 million instructions on 100% carbon negative compute available under Apache-2.0 license on Hugging Face. huggingface.co/togethercomput…

Informer, from "Beyond Efficient Transformer for Long Sequence Time-Series Forecasting" (AAAI'21 Best Paper) is now available Hugging Face Transformers! 🙌 We created a tutorial for multi-variate forecasting, check it out: huggingface.co/blog/informer Colab with @ESimhayev (1/2)

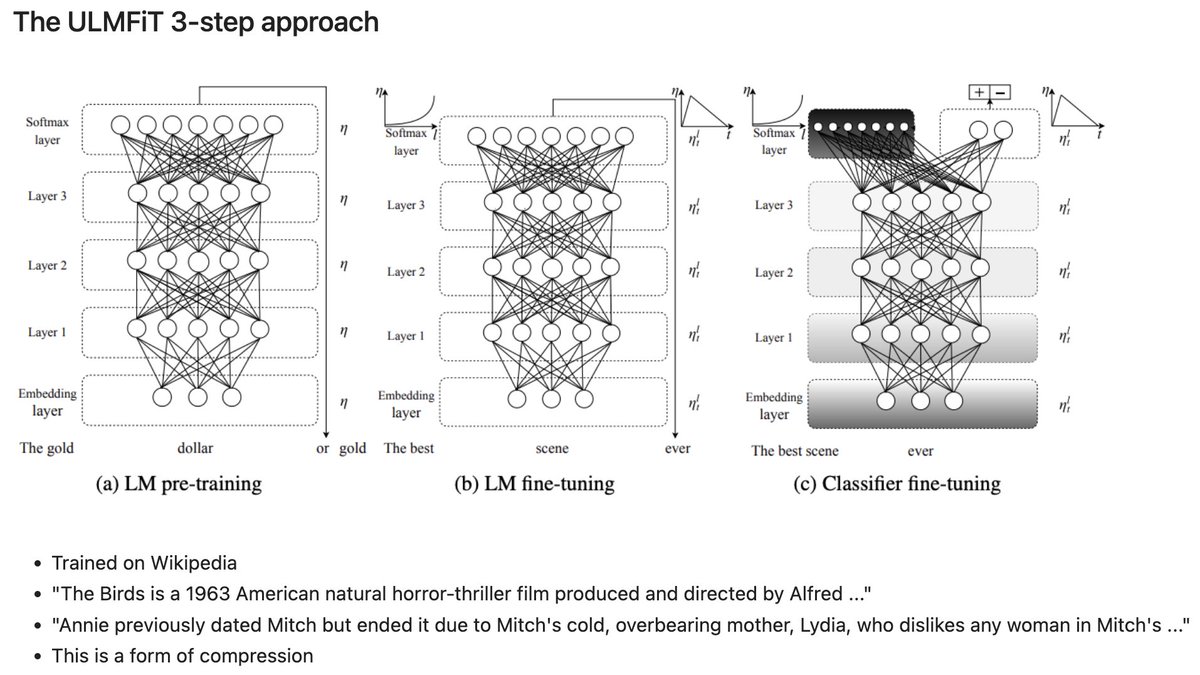

Want to adopt the latest LLMs for a specific target domain (e.g., finance data) or target task like document classification? Finetuning becomes more feasible as we see more and more pretrained LLMs become available under open-source licenses. magazine.sebastianraschka.com/p/finetuning-l… Here's an

Added more OSS language models to the Vercel AI Playground via Hugging Face: ◆ OpenAssistant/oasst-sft-4-pythia-12b-epoch-3.5 ◆ EleutherAI/gpt-neox-20b ◆ bigcode/santacoder ◆ bigscience/bloom → play.vercel.ai/r/MsqSVto

I had no idea about Hugging Face. Ppl can create and share interfaces to AI apps called Spaces. This one here can: - take any Youtube link - transcribes the linked video using Whisper - separates speakers and outputs the transcript huggingface.co/spaces/vumichi…

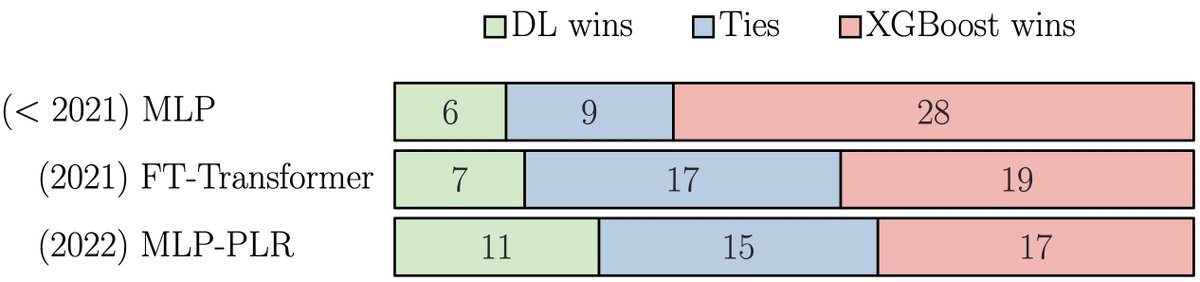

In our current project (coming soon, stay tuned!), we (with @irubachev) have evaluated our tabular DL models on the datasets from this paper by Léo Grinsztajn Gael Varoquaux 🦋 et al. Let's discuss the results! 🧵 1/7

Code Llama with Hugging Face🤗 Yesterday, @MetaAI released Code Llama, a family of open-access code LLMs! Today, we release the integration in the Hugging Face ecosystem🔥 Models: 👉 huggingface.co/codellama blog post: 👉 hf.co/blog/codellama Blog post covers how to use it!