Eric Chan

@ericryanchan

PhD Student, Stanford University

ID: 1334362663657349120

http://ericryanchan.github.io 03-12-2020 05:03:59

29 Tweet

351 Followers

86 Following

Our new work on scaling up neural representations is out! Adaptive coordinate networks (ACORN) can be trained to represent high-res imagery (10s of MP) in seconds & scale up to gigapixel images and highly detailed 3D models. computationalimaging.org/publications/a… arxiv.org/abs/2105.02788 1/n

We also present another paper at @SIGGRAPH 2023 on neural implicit 3D Morphable Models that can be used to create a dynamic 3D avatar from a single in-the-wild image. (Lead author Connor Lin). research.nvidia.com/labs/toronto-a…

Introducing “FlowCam: Training Generalizable 3D Radiance Fields w/o Camera Poses via Pixel-Aligned Scene Flow”! We train a generalizable 3D scene representation self-supervised on datasets of raw videos, without any pre-computed camera poses or SFM! cameronosmith.github.io/flowcam 1/n

📢📢📢 thrilled to announce "𝟒𝐃-𝐟𝐲: 𝐓𝐞𝐱𝐭-𝐭𝐨-𝟒𝐃 𝐆𝐞𝐧𝐞𝐫𝐚𝐭𝐢𝐨𝐧 𝐔𝐬𝐢𝐧𝐠 𝐇𝐲𝐛𝐫𝐢𝐝 𝐒𝐜𝐨𝐫𝐞 𝐃𝐢𝐬𝐭𝐢𝐥𝐥𝐚𝐭𝐢𝐨𝐧 𝐒𝐚𝐦𝐩𝐥𝐢𝐧𝐠" sherwinbahmani.github.io/4dfy Way to start Sherwin Bahmani 🎉 PhD co-advised with David Lindell at #UofT.

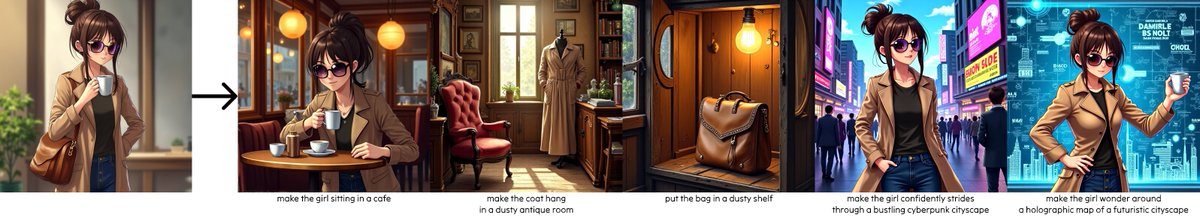

Some cool work led by Shengqu Cai showing how you can adapt a diffusion model for image-to-image tasks using data generated by itself. My favorite examples are of “decomposition”, but it’s useful for other image2image tasks like relighting and character-consistent generation too!