Erwin Coumans 🇺🇦

@erwincoumans

NVIDIA, Physics Simulation,Robotics Learning

ID: 2409516403

24-03-2014 20:21:50

2,2K Tweet

5,5K Followers

165 Following

Register for the Google DeepMind session at #GTC25 to learn how Google integrates core NVIDIA technologies like Warp to improve simulation performance for #humanoid robotics development. Register Now ➡️ nvda.ws/3Xya1YM

Atlas is demonstrating reinforcement learning policies developed using a motion capture suit. This demonstration was developed in partnership with Boston Dynamics and RAI Institute.

My G1 humanoid home setup to test NVIDIA's new GROOT-N1 model, led by Jim Fan and Yuke Zhu . Sitting in a wheel chair to focus on manipulation, with a test data set on Huggingface: huggingface.co/spaces/lerobot… nvidianews.nvidia.com/news/nvidia-is… With Alt-Bionics hands and MANUS™ gloves.

Join experts Yuval Tassa from Google DeepMind and Miles Macklin from NVIDIA at #GTCParis to learn about Newton, an open-source, extensible physics engine for robotics simulation, co-developed by Disney Research, Google DeepMind and NVIDIA. Gain insights into breakthroughs in

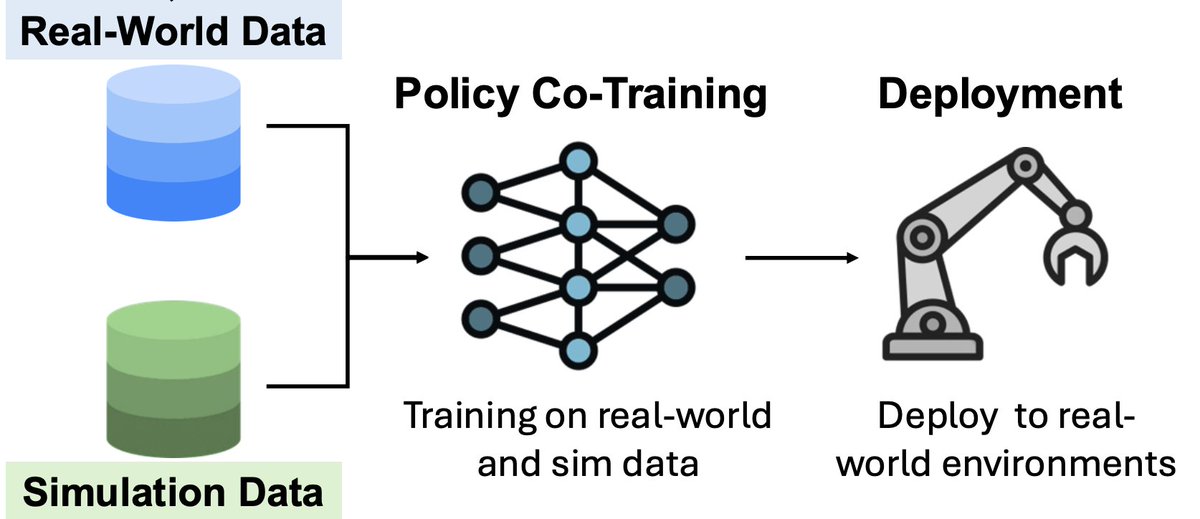

I’m thrilled to announce that we just released GraspGen, a multi-year project we have been cooking at NVIDIA Robotics 🚀 GraspGen: A Diffusion-Based Framework for 6-DOF Grasping Grasping is a foundational challenge in robotics 🤖 — whether for industrial picking or