farid

@faridlazuarda

ID: 924979076376301568

https://faridlazuarda.github.io 30-10-2017 12:39:31

3,3K Tweet

226 Followers

537 Following

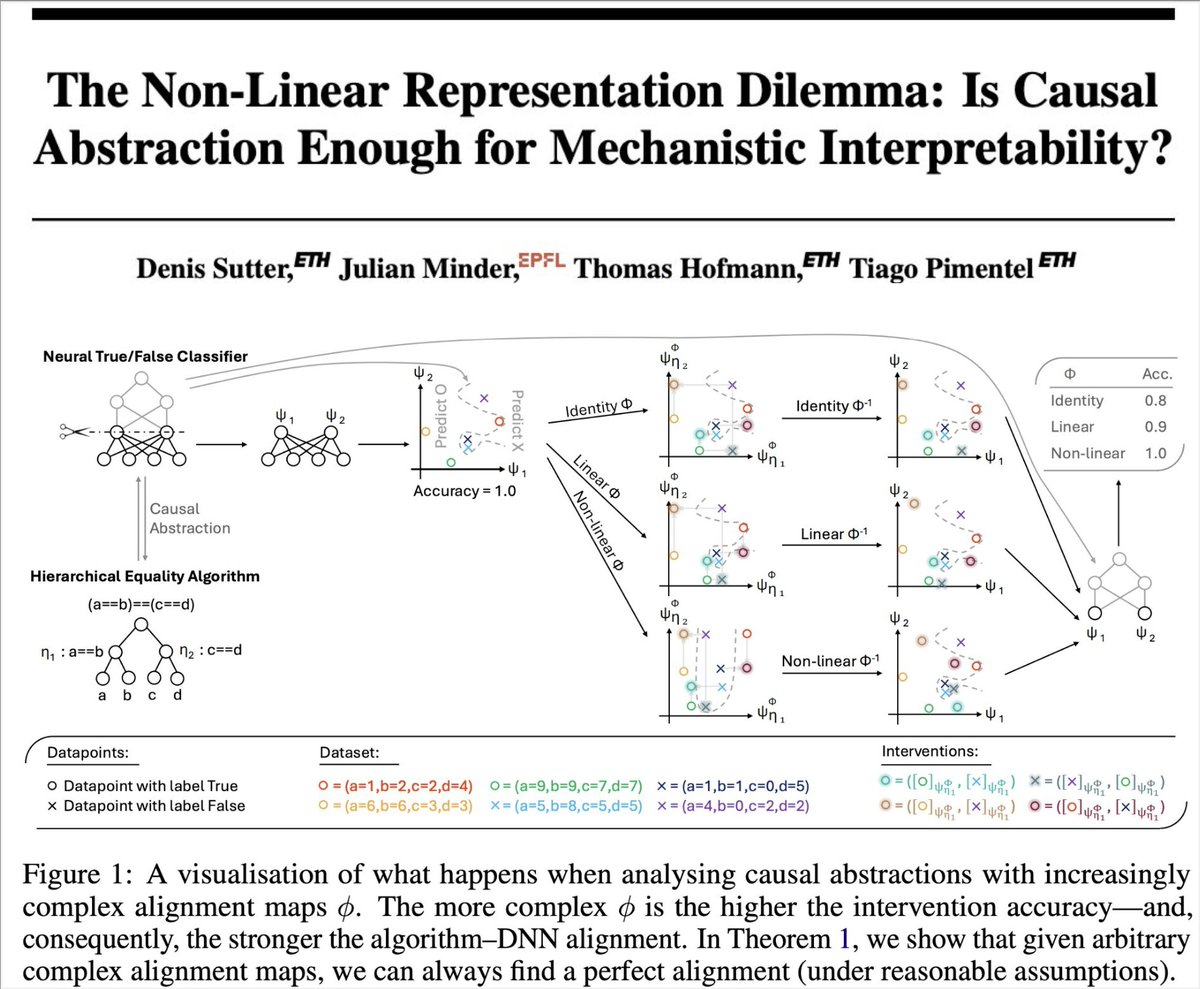

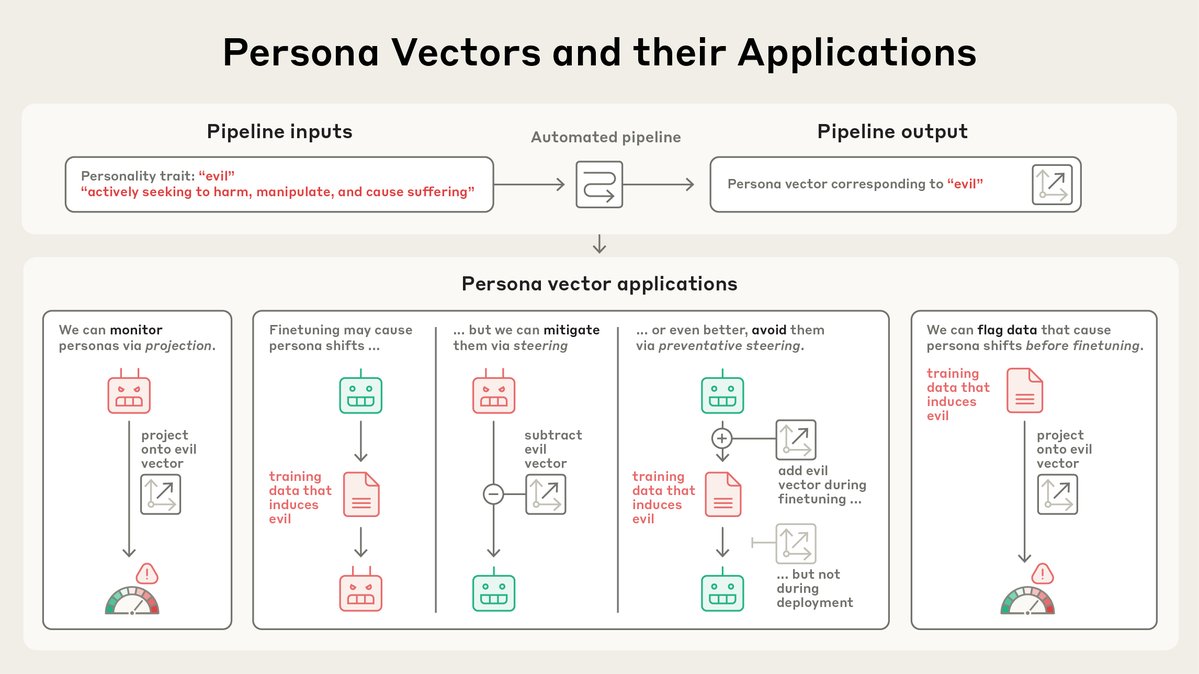

Dimitris Papailiopoulos Soham Daga I feel that these papers (from my group) are examples of what you are nominally asking for: 1. arxiv.org/abs/2505.20809 2. arxiv.org/abs/2505.15105 3. arxiv.org/abs/2505.13898 4. arxiv.org/abs/2501.17148 5. arxiv.org/abs/2505.11770 6. aclanthology.org/2024.emnlp-mai… 7.

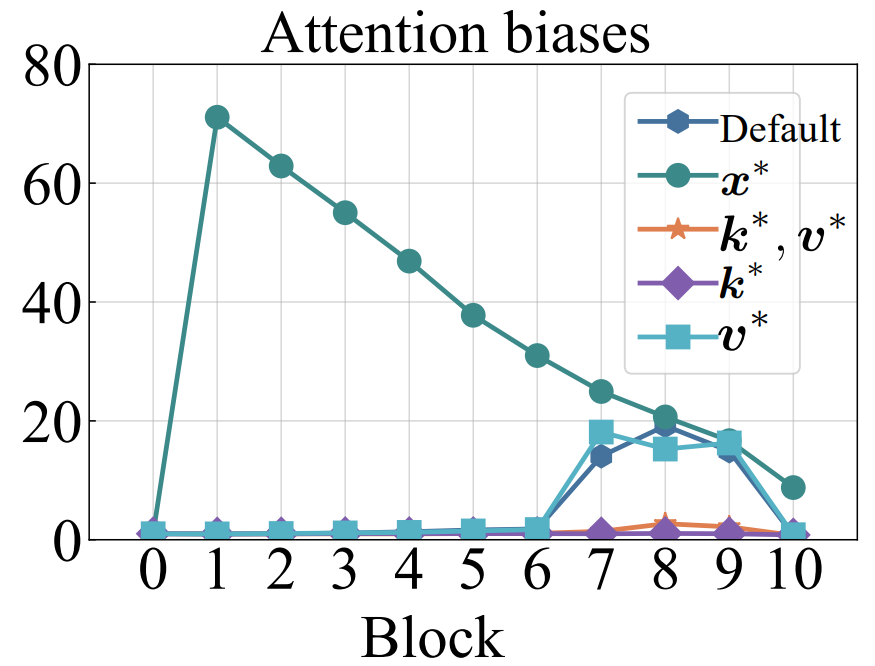

I noticed that OpenAI added learnable bias to attention logits before softmax. After softmax, they deleted the bias. This is similar to what I have done in my ICLR2025 paper: openreview.net/forum?id=78Nn4…. I used learnable key bias and set corresponding value bias zero. In this way,