Federico Barbero

@fedzbar

I like Transformers and graphs. I also like chess and a few other things as well.

ID: 1073302912854564870

https://federicobarbero.com 13-12-2018 19:45:30

231 Tweet

2,2K Followers

274 Following

We are back with a contributed talk from Christos Perivolaropoulos about some intriguing properties of sotfmax in Transformer architectures!

This just in -- Looks like you'll be seeing more of p-RoPE at #ICLR2025! 🔄 Congratulation Federico Barbero on yet another epic paper from your internship getting published! 🎉

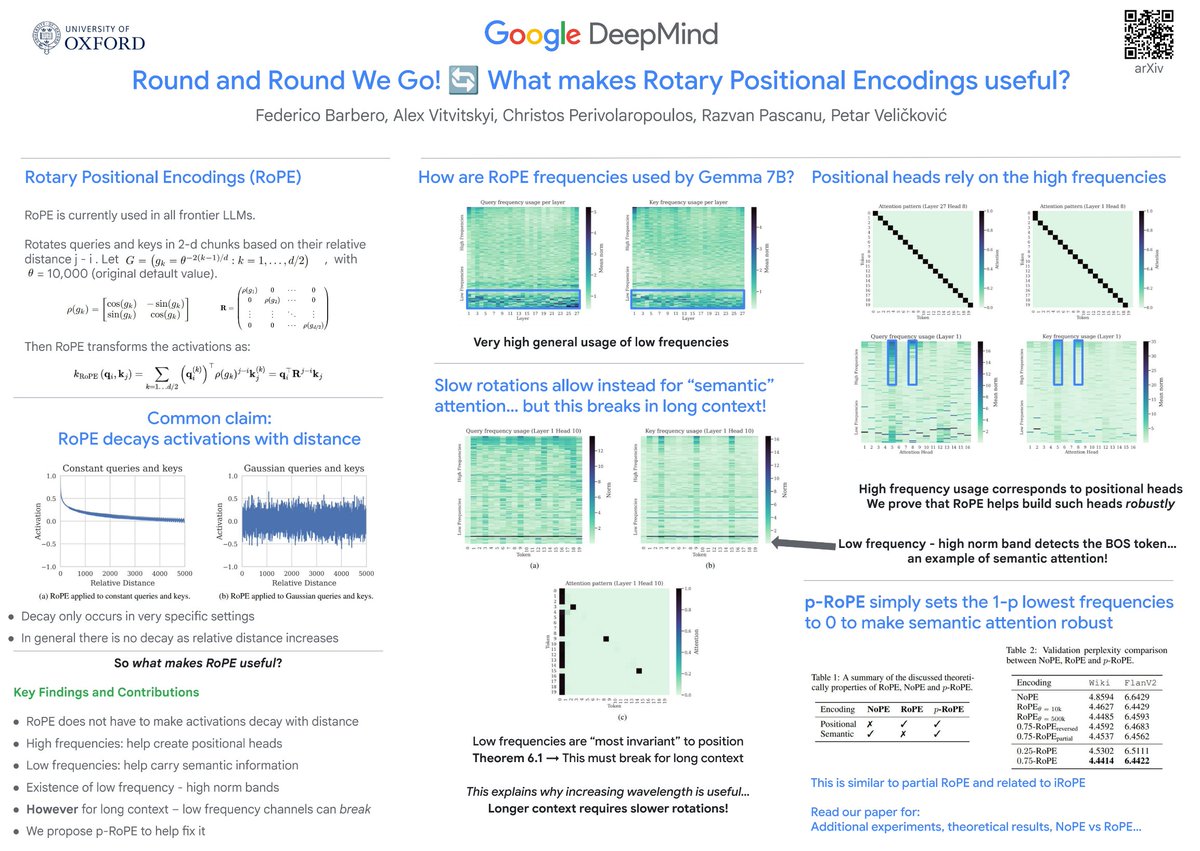

*Round and Round We Go! What makes Rotary Positional Encodings useful?* by Federico Barbero Petar Veličković Christos Perivolaropoulos They show RoPE has distinct behavior for different rotation angles - high freq for position, low freq for semantics. arxiv.org/abs/2410.06205

Vanishing gradients are central to RNNs and SSMs, but how do they affect GNNs? We explore this in our new paper! w/ A. Gravina, Ben Gutteridge Federico Barbero C. Gallicchio xiaowen dong Michael Bronstein Pierre Vandergheynst 🔗 arxiv.org/abs/2502.10818 🧵(1/11)

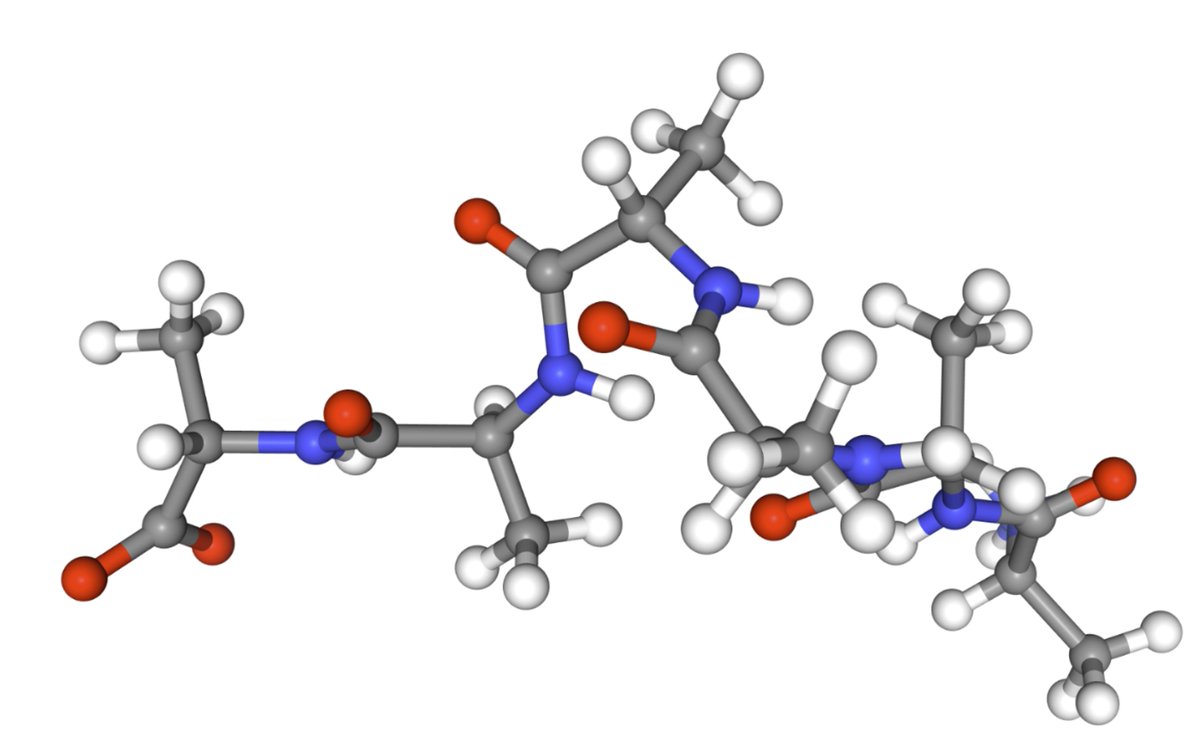

New preprint! 🚨 We scale equilibrium sampling to hexapeptide (in cartesian coordinates!) with Sequential Boltzmann generators! 📈 🤯 Work with Joey Bose, Chen Lin, Leon Klein, Michael Bronstein and Alex Tong Thread 🧵 1/11

I was left so impressed by the amount of effort and care Tim Scarfe puts into the production of his videos. Definitely recommend his channel, a true privilege to have been interviewed. Please excuse me as I was very jet lagged so be nice!! :)

Super excited to be heading to Singapore tomorrow to present our work on RoPE with Alex, Christos Perivolaropoulos, Razvan, Petar Veličković. Christos and I will be presenting on Fri 25 Apr 7 p.m. PDT — 9:30 p.m. PDT Hall 3 + Hall 2B #242. Happy to meet and catch up :) DMs are open!