Flow AI

@flowaicom

Accelerate your AI agent development with continuously evolving, validated test data that your teams can trust.

ID: 1303413990526246914

http://flow-ai.com 08-09-2020 19:25:14

532 Tweet

1,1K Followers

1,1K Following

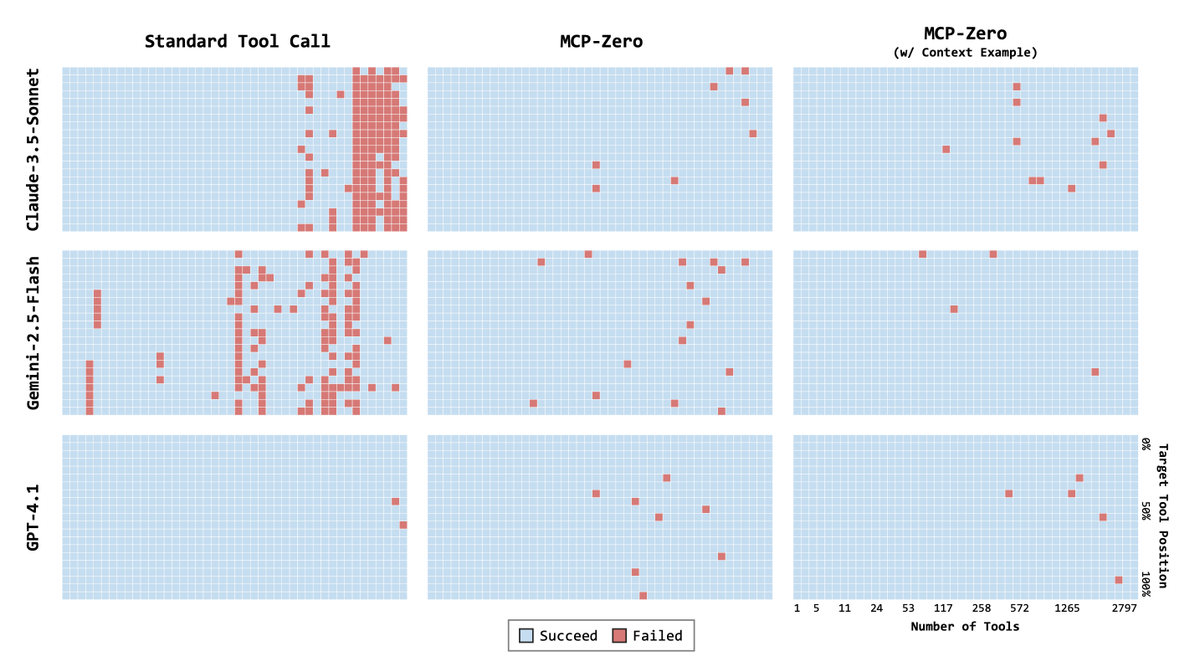

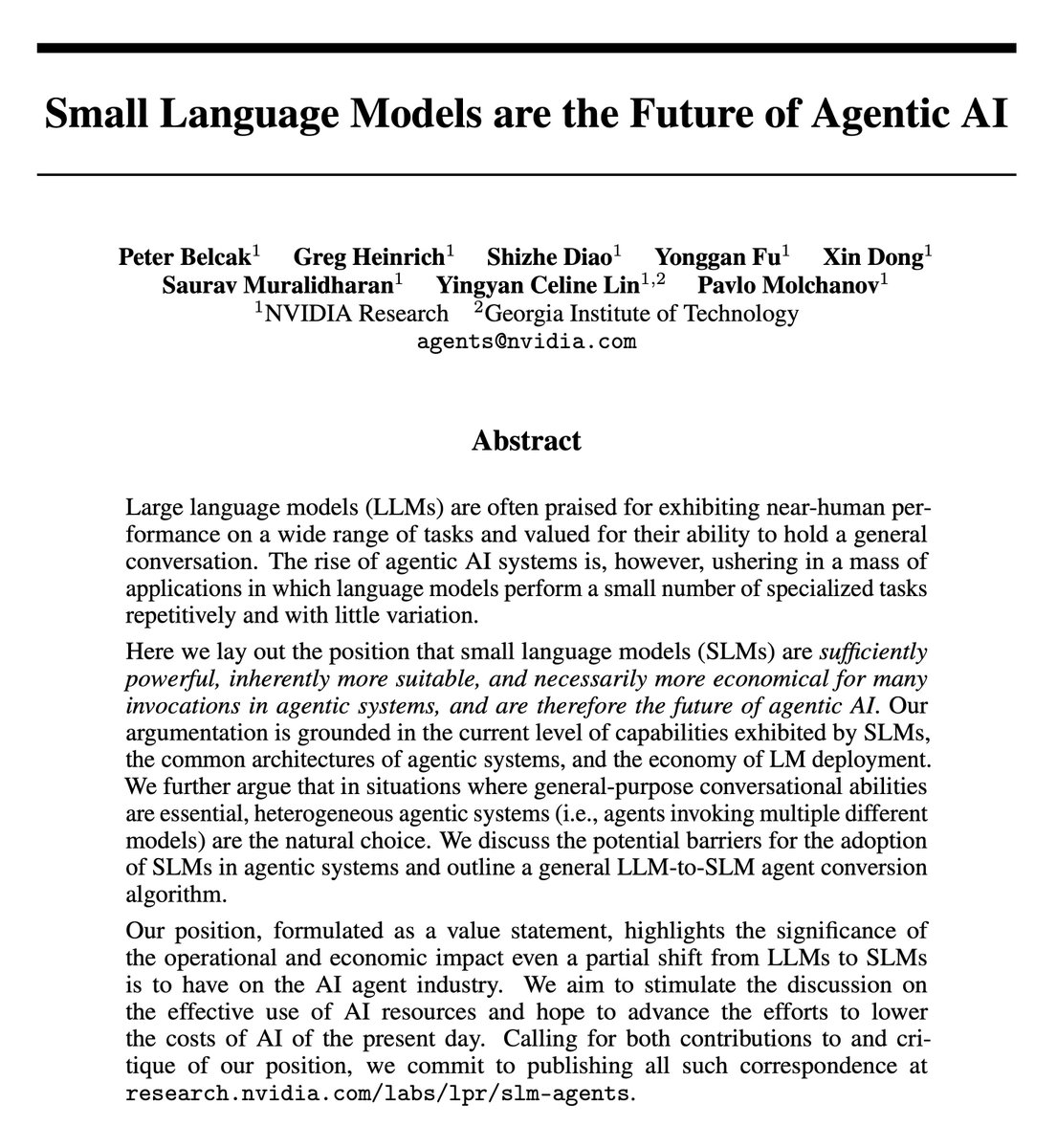

LLMs are great at generating text, but what if they need to call external tools? By enforcing a standardized JSON format and applying an automatic multi-stage verification, APIGen by Salesforce AI Research provides a powerful way to generate verifiable and diverse function-calling

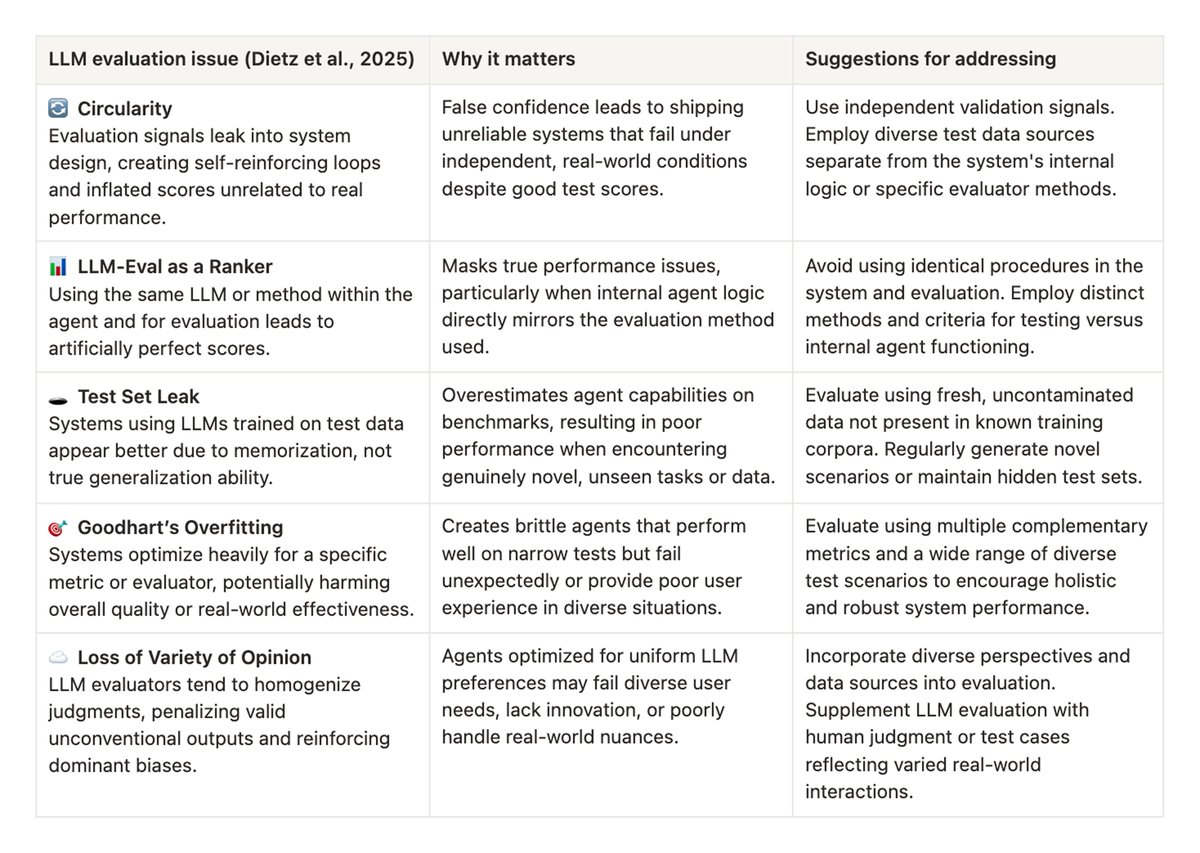

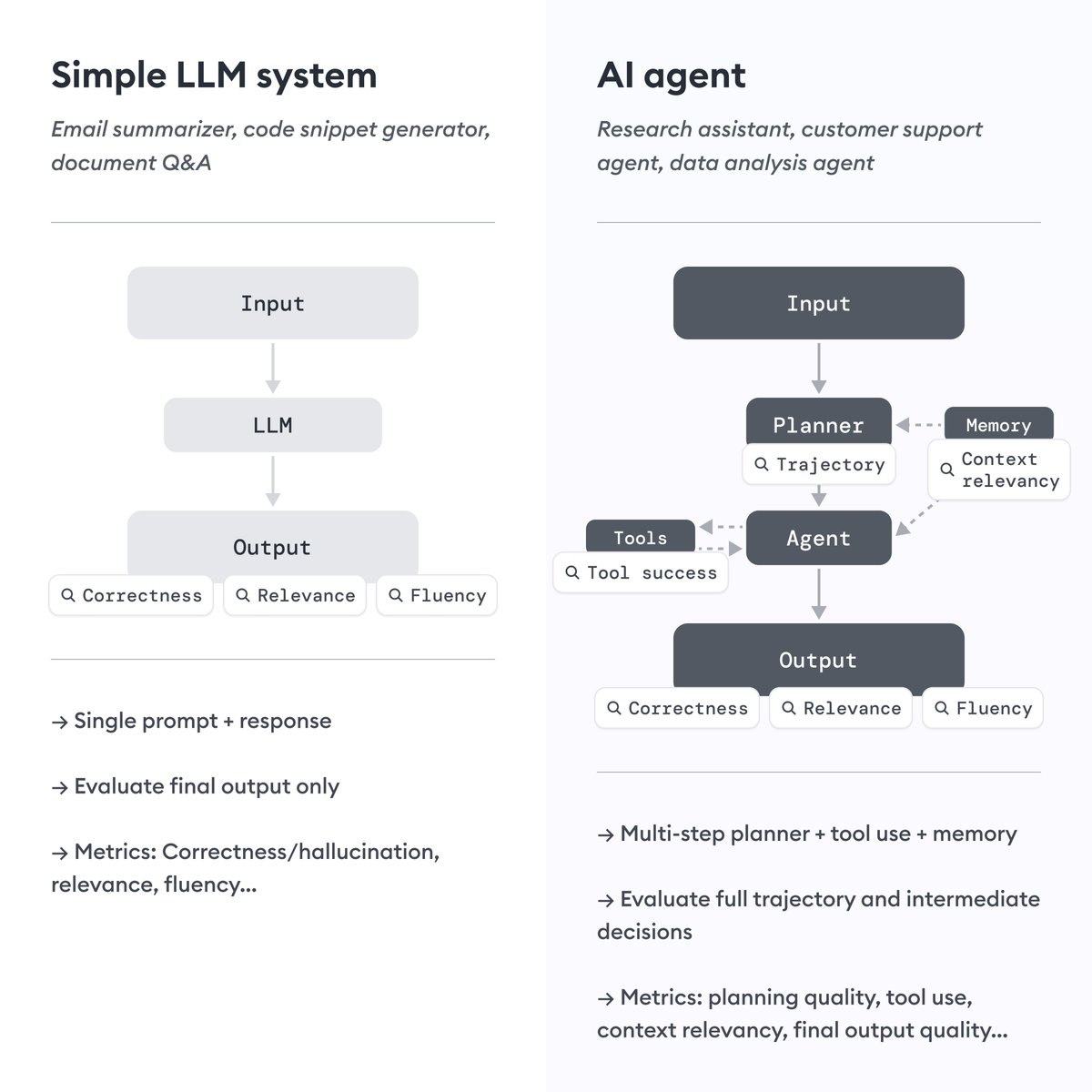

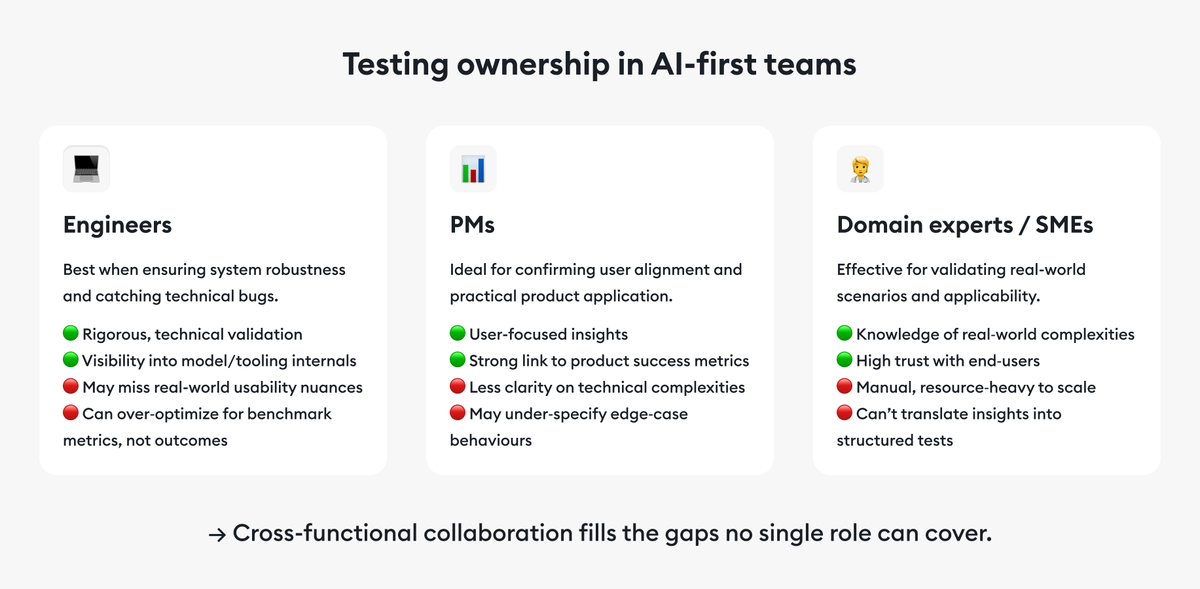

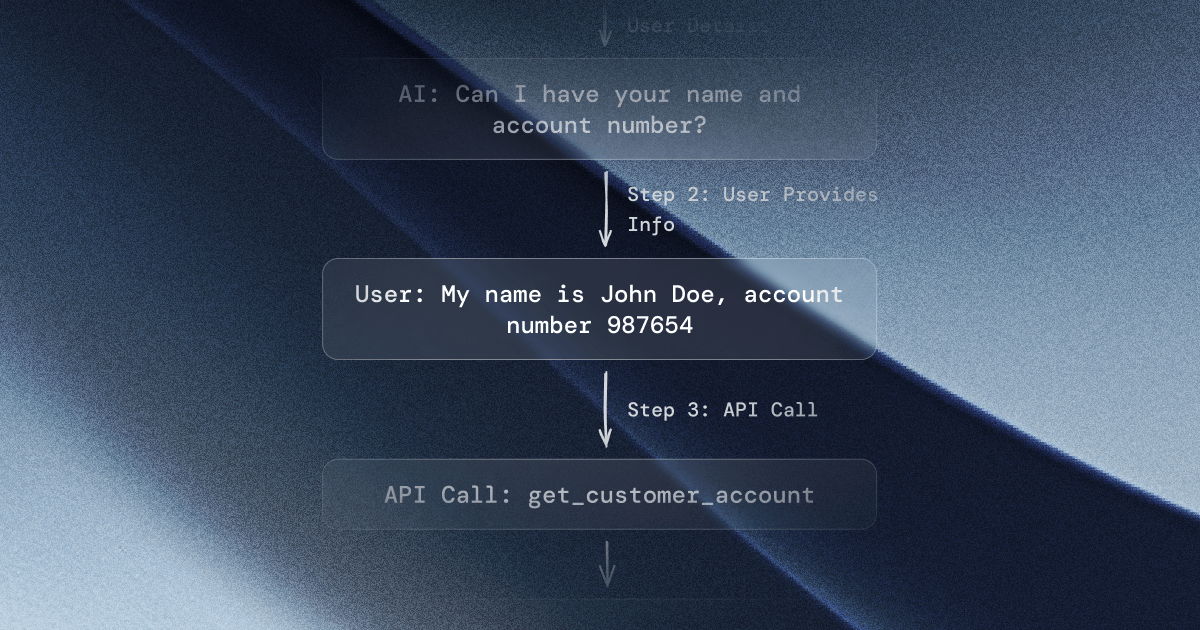

AI agents need robust test sets to handle real-world complexity—multi-turn dialogues, tool interactions, and unpredictable inputs. Yet, this is where most evaluation methods fall short. In our latest blog, Tiina Vaahtio explores how automated test generation can improve AI agent

How do you measure if your model is processing long documents correctly—or as well as possible? Our co-founder & CTO Karolus Sariola compiled a comparison of recent advances, exploring how LLMs now process context more effectively by leveraging attention. flow-ai.com/blog/advancing…