Fida Mohammad

@fmthoker

PhD student at University of Amsterdam | Deep learning for Computer Vision

ID: 988447344

https://fmthoker.github.io/ 04-12-2012 09:59:37

32 Tweet

107 Followers

180 Following

ACM #Multimedia 2021: Skeleton-Contrastive 3D Action Representation Learning w/ Fida Mohammad, Hazel Doughty: arxiv.org/abs/2108.03656 We learn invariances to multiple #skeleton representations and introduce various skeleton augmentations via noise contrastive estimation 1/n

Excited to share our #ECCV2022 work "How Severe is Benchmark-Sensitivity in Video Self-Supervised Learning?" w/ Fida Mohammad, Piyush Bagad and Cees Snoek. We investigate if video self-supervised methods generalize beyond current benchmarks. Details: bpiyush.github.io/SEVERE-website/ 🧵1/5

Happy our paper 'Tubelet-Contrastive Self-Supervision for Video-Efficient Generalization' was accepted to #ICCV2023. Congrats Fida Mohammad! Preprint: arxiv.org/abs/2303.11003

Next Thursday at #ICCV2023, we'll present our work on self-supervised learning for motion-focused video representations. Work w/ Fida Mohammad and Cees Snoek We learn similarities between videos with identical local motion dynamics but an otherwise different appearance. 1/6

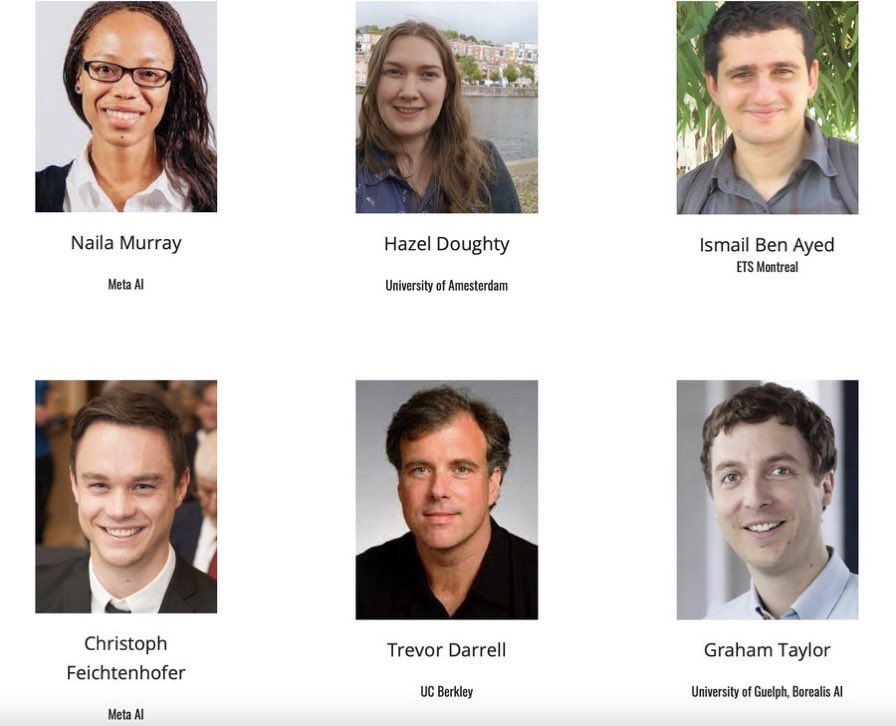

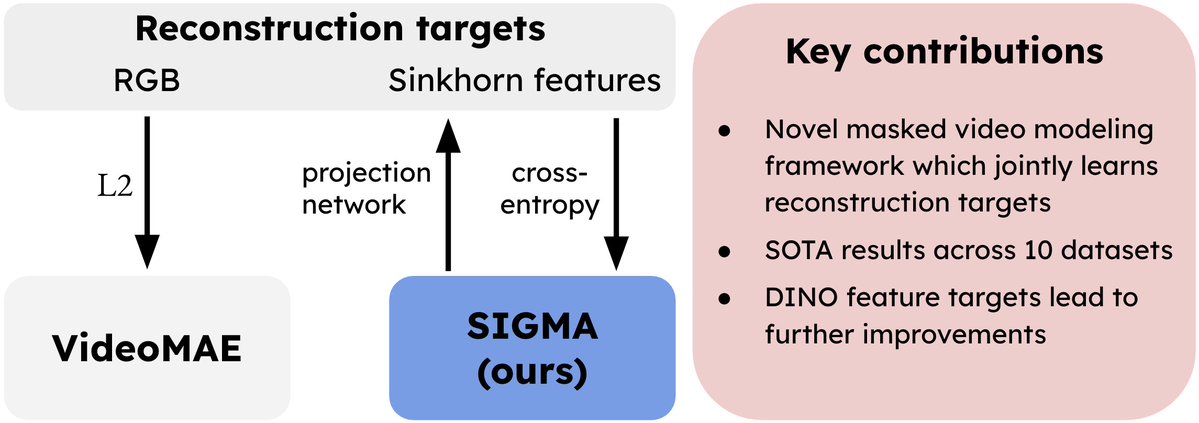

📢SIGMA: Sinkhorn-Guided Masked Video Modeling got accepted to European Conference on Computer Vision #ECCV2026 #ECCV2024 TL;DR: Instead of using pixel targets in Video Masked Modeling, we reconstruct jointly trained features using Sinkhorn guidance, achieving SOTA. 📝Project page: quva-lab.github.io/SIGMA/ 🌐Paper:

Our #ACCV2024 Oral "LocoMotion: Learning Motion-Focused Video-Language Representations" w/ Fida Mohammad and Cees Snoek is now on ArXiv arxiv.org/abs/2410.12018 We remove the spatial focus of video-language representations and instead train representations to have a motion focus.

Super excited to share that 4 papers from our KAUST Computer Vision Lab (IVUL) IVUL lab got into #CVPR2025! Huge congrats to all the authors and our amazing collaborators! 🎉👏 #CVPR2025 KAUST Research

Just released "DiffCLIP", extending Differential Attention proposed by Tianzhu Ye to CLIP models - replacing both visual & text encoder attention with the differential attention mechanism! TL;DR: Consistent improvements across all tasks with only 0.003% extra parameters!