Ghada Sokar

@g_sokar

ID: 1175712956807598080

22-09-2019 10:06:36

608 Tweet

416 Followers

363 Following

Time for celebrating🎉 One paper accepted for an oral presentation at #ICML2023! I was very lucky to work on this project with amazing collaborators utku , Pablo Samuel Castro , Rishabh Agarwal ! Details soon✨ See you in Hawaii🏝️

Don't miss Bram Grooten 's presentation at #ICLR2023 this Friday, where we will be sharing our work on how to deal with noisy environments in RL.

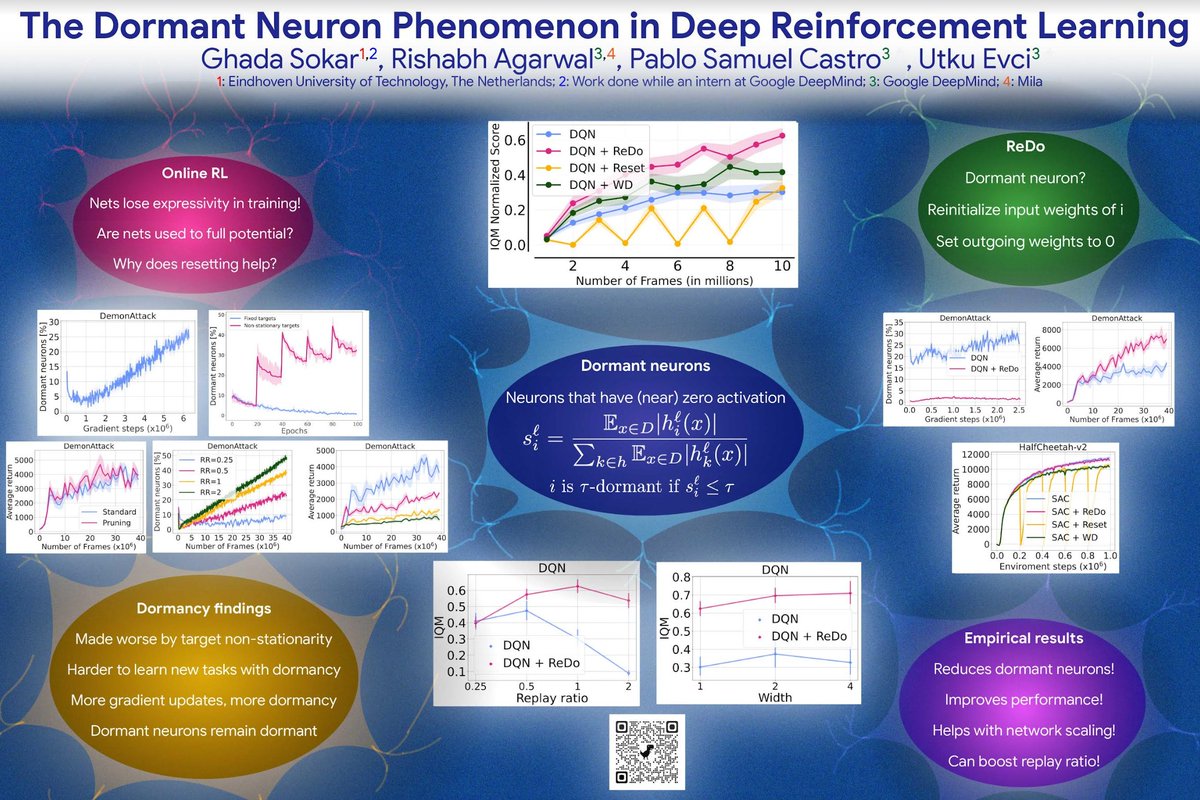

2. The Dormant Neuron Phenomenon in Deep RL (led by Ghada Sokar w/t utku and Pablo Samuel Castro). Reviewers loved this work and it was accepted as an oral. x.com/pcastr/status/…

with utku and Rishabh Agarwal i'll be presenting work led by Ghada Sokar on the dormant neuron phenomenon in deep RL. utku & i give oral on tue at 5:30pm in Ballroom C then with rishabh we'll present the poster in sess 3, wed at 11am, Exhibit Hall 1 #709 x.com/pcastr/status/…