gal vardi

@galvardi

ID: 1539689263

22-06-2013 22:55:06

10 Tweet

60 Followers

151 Following

Glad to share our new work: "Deconstructing Data Reconstruction: Multiclass, Weight Decay and General Losses" arxiv.org/abs/2307.01827 About trainset reconstruction in multiclass & regression tasks + perks (w/ Gon Buzaglo Gilad Yehudai gal vardi Yakir Oz Yaniv Nikankin M. Irani) 🧵1/5

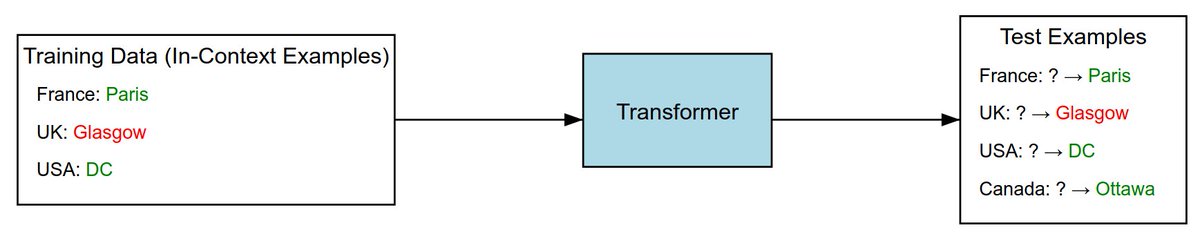

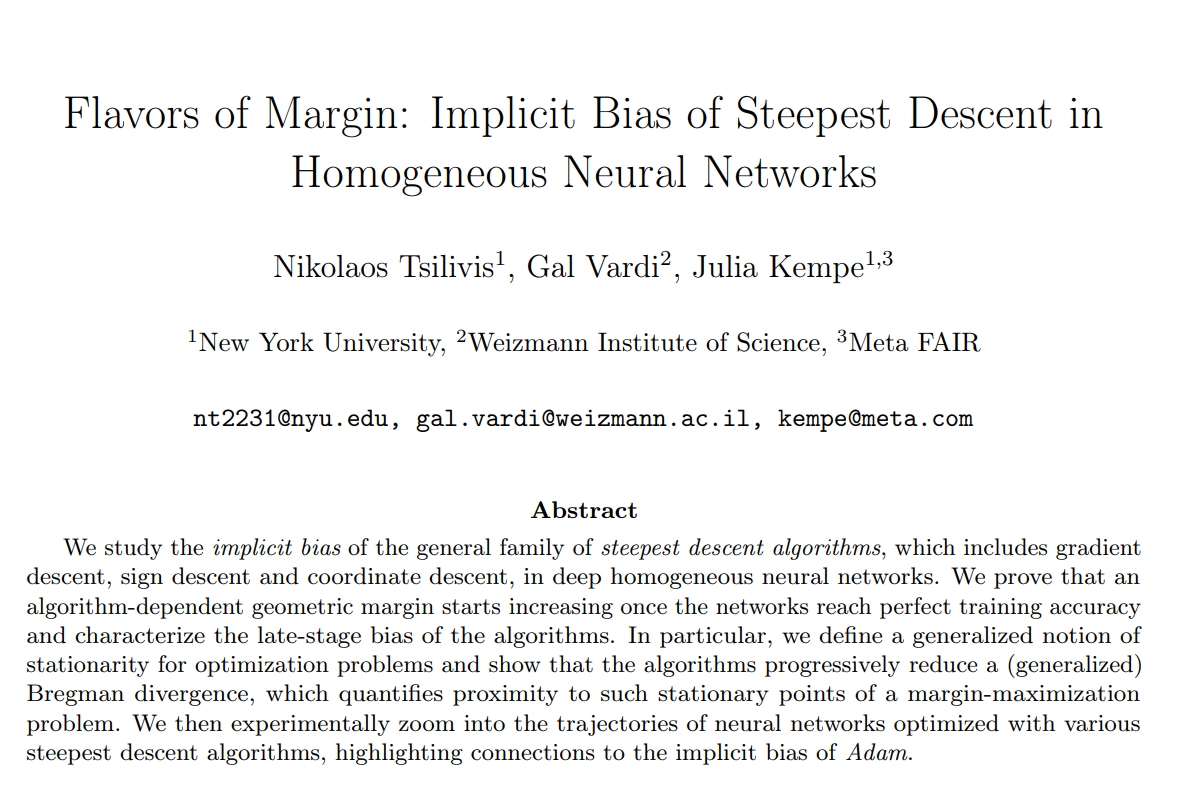

Optimization induces implicit bias. We study general steepest descent in homogeneous nets & show (generalized) convergence to a (generalized) KKT pt. Adam presents a curious case between l2 & l1: arxiv.org/abs/2410.22069 With Nikos Tsilivis & gal vardi NYU Center for Data Science AI at Meta

Happy to share our work on: "A Theory of Learning with Autoregressive Chain of Thought" arxiv.org/abs/2503.07932 Joint work with great collaborators: gal vardi, Adam Block, Surbhi Goel, Zhiyuan Li , Theodor Misiakiewicz, Nati Srebro