Gintare Karolina Dziugaite

@gkdziugaite

Sr Research Scientist at Google DeepMind, Toronto. Member, Mila. Adjunct, McGill CS. PhD Machine Learning & MASt Applied Math (Cambridge), BSc Math (Warwick).

ID: 954436574468624384

https://gkdz.org 19-01-2018 19:33:06

83 Tweet

3,3K Followers

114 Following

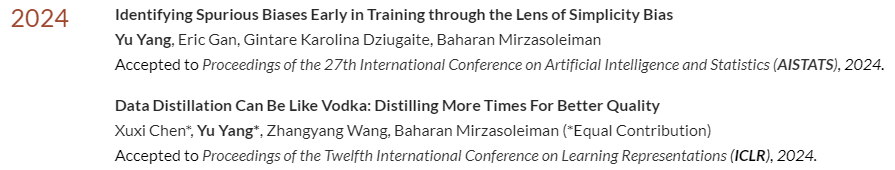

🎉 Two of my papers have been accepted this week at #ICLR2024 & #AISTATS! Big thanks and congrats to co-authors Xuxi Chen & Eric Gan, mentors Atlas Wang & Gintare Karolina Dziugaite, and especially my advisor Baharan Mirzasoleiman! 🙏 More details on both papers after the ICML deadline!

thrilled this paper was accepted to #ICML2024 , looking forward to chatting about this with many of you in vienna! camera-ready version available at arxiv.org/abs/2402.08609 w/ Johan S. Obando 👍🏽 Ghada Sokar Timon Willi Clare Lyle Jesse Farebrother Jakob Foerster Gintare Karolina Dziugaite & doina precup

Excited to present our spotlight paper on MoEs in RL today at #ICML2024! Me, Johan S. Obando 👍🏽, Pablo Samuel Castro, and Jesse Farebrother are looking forward to chat with you! Poster #1207 Hall C 4-9 at 1:30-3:00 pm

We are hiring Applied Interpretability researchers on the GDM Mech Interp Team!🧵 If interpretability is ever going to be useful, we need it to be applied at the frontier. Come work with Neel Nanda, the Google DeepMind AGI Safety team, and me: apply by 28th February as a

I will travelling to Singapore 🇸🇬 this week for the ICLR 2025 Workshop on Sparsity in LLMs (SLLM) that I'm co-organizing! We have an exciting lineup of invited speakers and panelists including Dan Alistarh, Gintare Karolina Dziugaite, Pavlo Molchanov, Vithu Thangarasa, Yuandong Tian and Amir Yazdan.

Sparse LLM workshop will run on Sunday with two poster sessions, a mentoring session, 4 spotlight talks, 4 invited talks and a panel session. We'll host an amazing lineup of researchers: Dan Alistarh Vithu Thangarasa Yuandong Tian Amir Yazdan Gintare Karolina Dziugaite Olivia Hsu Pavlo Molchanov Yang Yu