Guy Yariv

@guy_yariv

Generative AI Researcher |

Research Scientist Intern @ Meta | PhD Candidate @ HUJI

ID: 1596815042611232771

https://guyyariv.github.io/ 27-11-2022 10:36:03

99 Tweet

146 Followers

120 Following

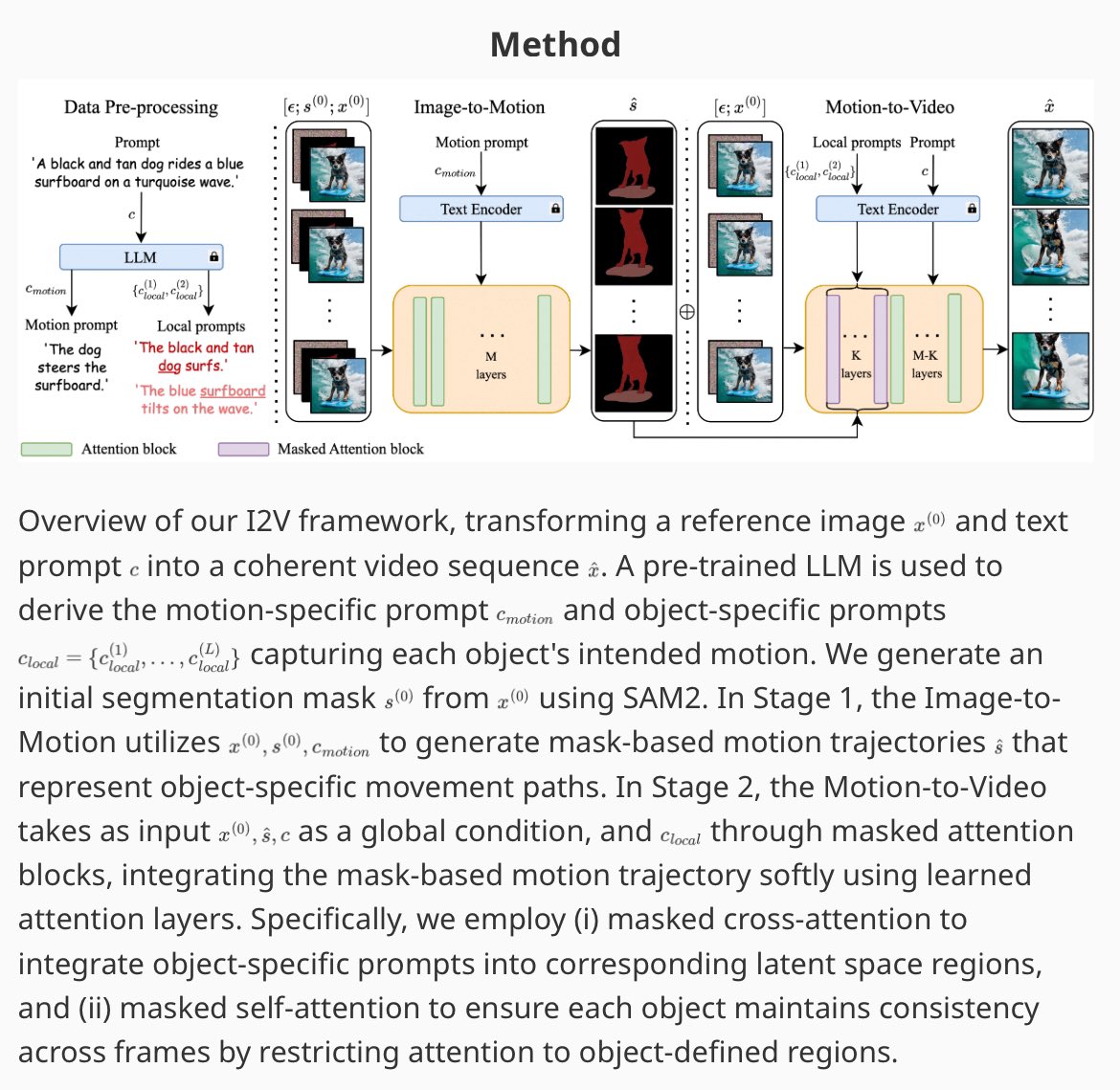

VideoJAM is our new framework for improved motion generation from AI at Meta We show that video generators struggle with motion because the training objective favors appearance over dynamics. VideoJAM directly adresses this **without any extra data or scaling** 👇🧵

Introducing RewardSDS, a text-to-3D score distillation method that enhances SDS by using reward-weighted sampling to prioritize noise samples based on alignment scores, achieving fine-grained user alignment. Led by the great Itay Chachy

Beyond excited to share FlowMo! We found that the latent representations by video models implicitly encode motion information, and can guide the model toward coherent motion at inference time Very proud of ariel shaulov Itay Hazan for this work! Plus, it’s open source! 🥳

Excited to share our recent work on corrector sampling in language models! A new sampling method that mitigates error accumulation by iteratively revisiting tokens in a window of previously generated text. With: Neta Shaul Uriel Singer Yaron Lipman Link: arxiv.org/abs/2506.06215

[1/n] New paper alert! 🚀 Excited to introduce 𝐓𝐫𝐚𝐧𝐬𝐢𝐭𝐢𝐨𝐧 𝐌𝐚𝐭𝐜𝐡𝐢𝐧𝐠 (𝐓𝐌)! We're replacing short-timestep kernels from Flow Matching/Diffusion with... a generative model🤯, achieving SOTA text-2-image generation! Uriel Singer Itai Gat Yaron Lipman