Alex Hägele

@haeggee

Fellow @AnthropicAI + PhD Student in Machine Learning @ICepfl MLO. MSc/BSc from @ETH_en. Previously: Student Researcher @Apple MLR

ID: 1212418353094000640

https://haeggee.github.io/ 01-01-2020 17:00:47

271 Tweet

512 Followers

634 Following

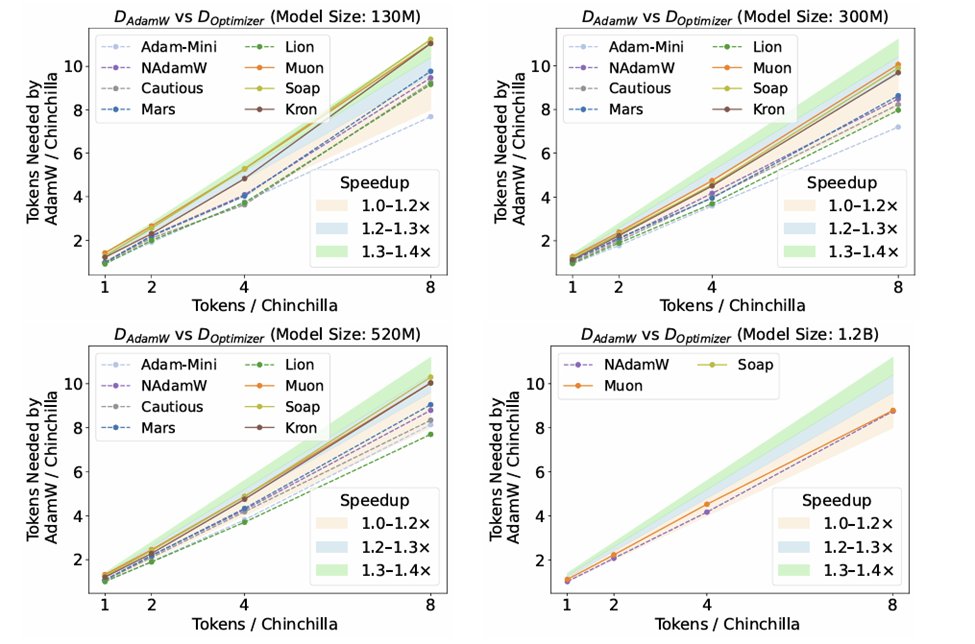

Lucas Beyer (bl16) Alex Hägele EPFL Andrei Semenov I got excited about the results we got in the AdEMAMix paper (I’m the first name on that paper). Around that time Andrei wanted to benchmark some of the recent optimizers. I naturally suggested AdEMAMix as I was curious how it would compare to others.