haley keglovits

@haleykegl

no longer using this platform, sorry if I ignore you // phd student @brownclps studying cog control @badrelab // previously @cal @ccnlab // go bears // she/her

ID: 2239734584

http://haleyk.github.io 10-12-2013 20:34:35

323 Tweet

194 Followers

323 Following

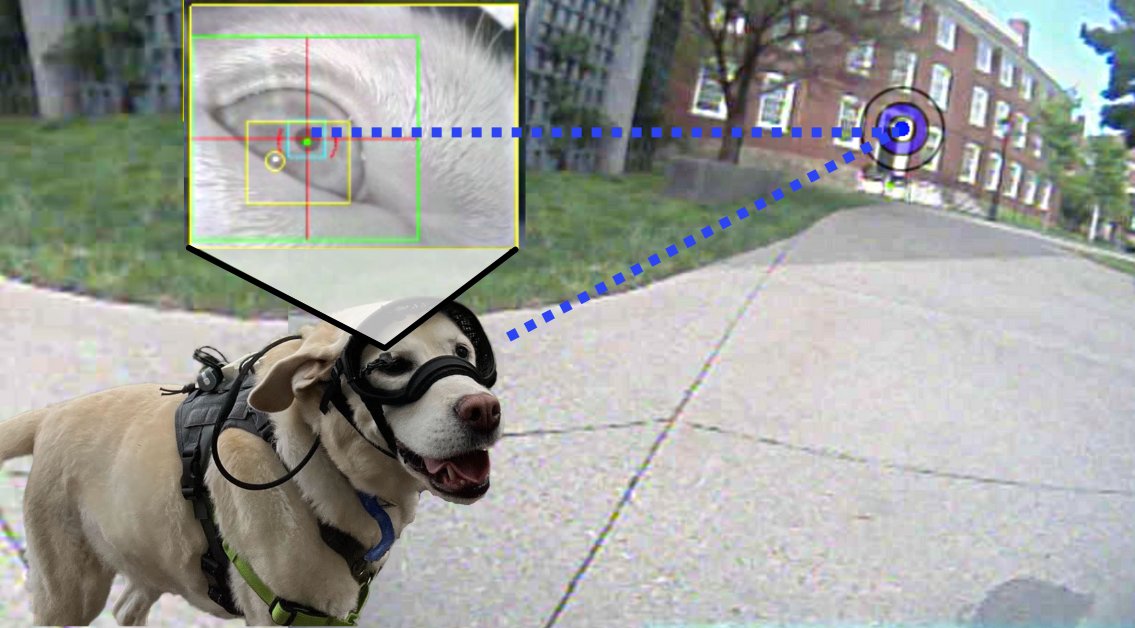

🔍Recent publication highlight: our grad student, Madeline Pelgrim, and PI, Dr. Daphna Buchsbaum, recently published in Proceedings of the Cognitive Science! They analyze what dogs see & pay attention to based on eye-tracking data from a walk outdoors! 👀🌳 escholarship.org/uc/item/769022…

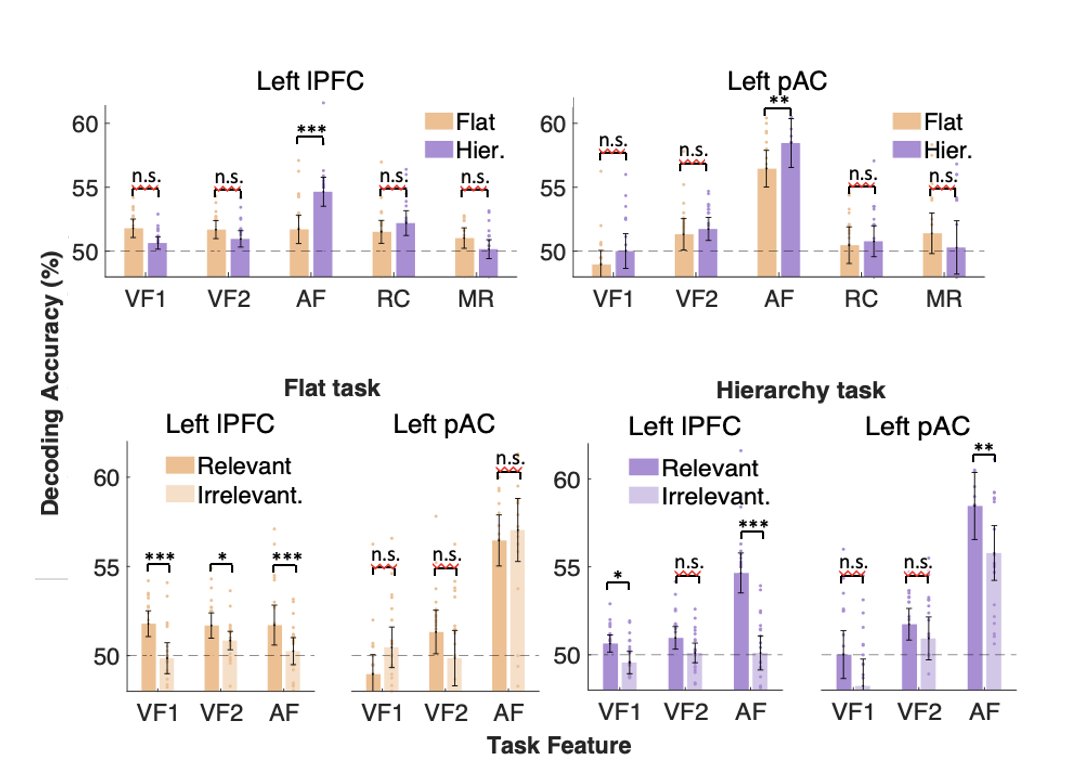

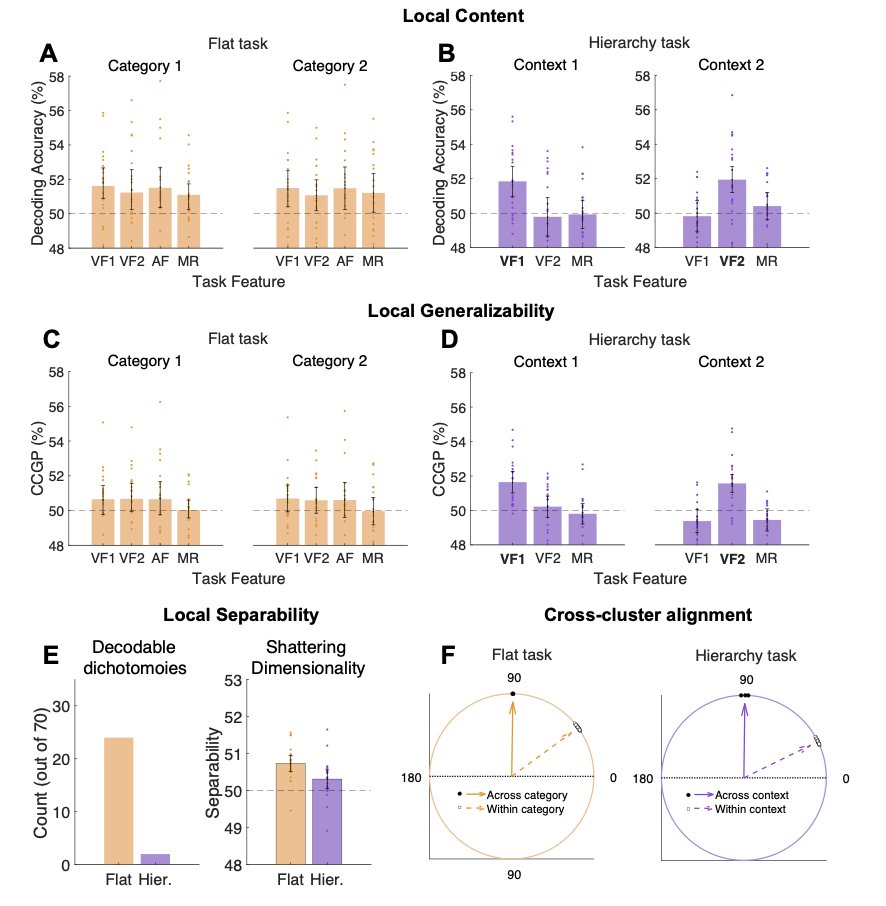

As we have previously shown, lPFC representations are hard to study with fMRI, with poor pattern reliability and small effects. haley keglovits tackled this head-on with deep sampling, heroically collecting 200+ minutes of fMRI data on each task from 20 subjects.