Tanveer Hannan

@hannan_tanveer

PhD at LMU Munich, Multimodal Deep Learning on Computer Vision and NLP

ID: 2241926006

https://tanveer81.github.io/ 12-12-2013 06:43:51

122 Tweet

37 Followers

223 Following

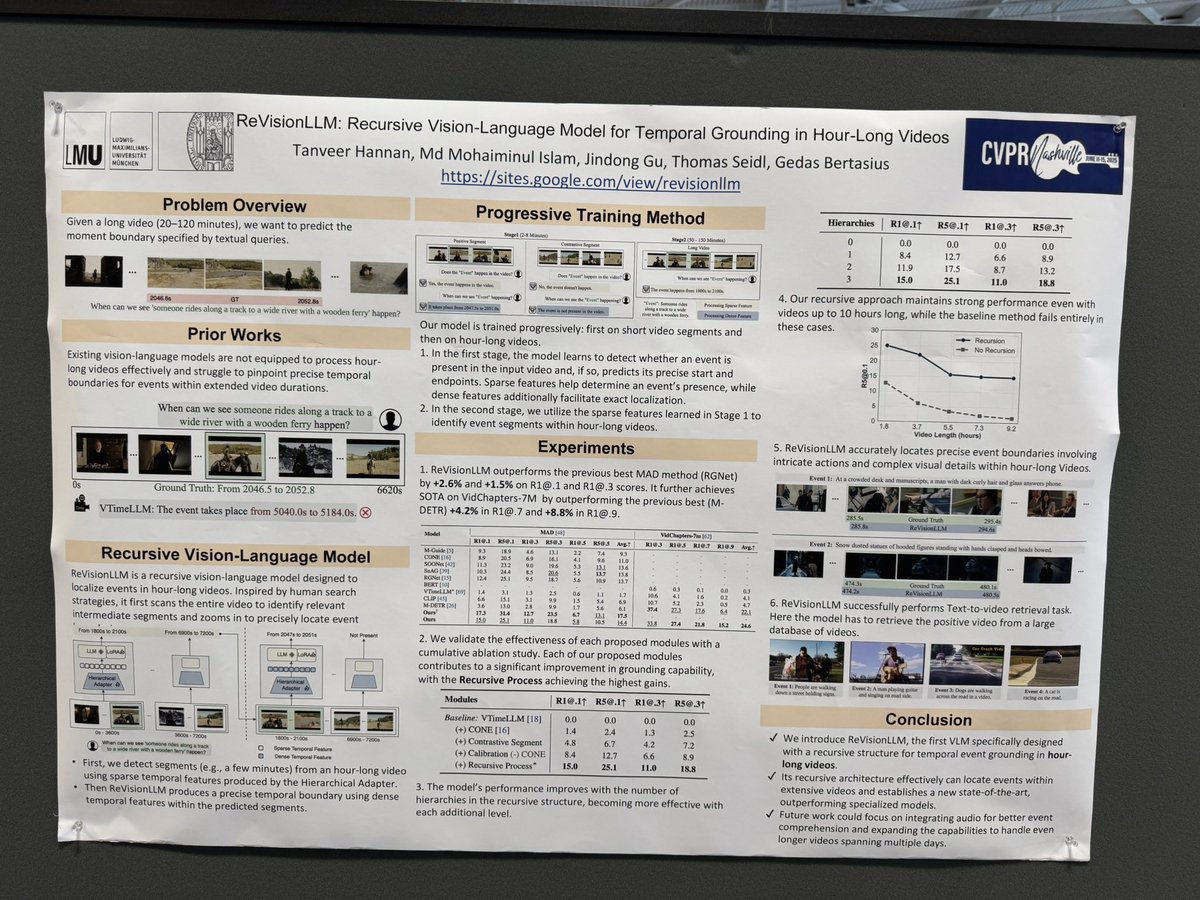

Had a great time presenting at the GenAI session @CiscoMeraki—thanks Nahid Alam @ CVPR 2025 for the invite🙏 Catch us at #CVPR2025: 📌 BIMBA: arxiv.org/abs/2503.09590 (June 15, 4–6PM, Poster #282) 📌 ReVisionLLM: arxiv.org/abs/2411.14901 (June 14, 5–7PM, Poster #307) Gedas Bertasius Tanveer Hannan

Great to see a lot of interest among the video understanding community about ReVisionLLM! If you missed it, checkout arxiv.org/abs/2411.14901 Tanveer Hannan