Hao Peng

@haopeng_nlp

Assistant Professor at UIUC CS

ID: 1316236065271758849

14-10-2020 04:35:22

35 Tweet

573 Followers

100 Following

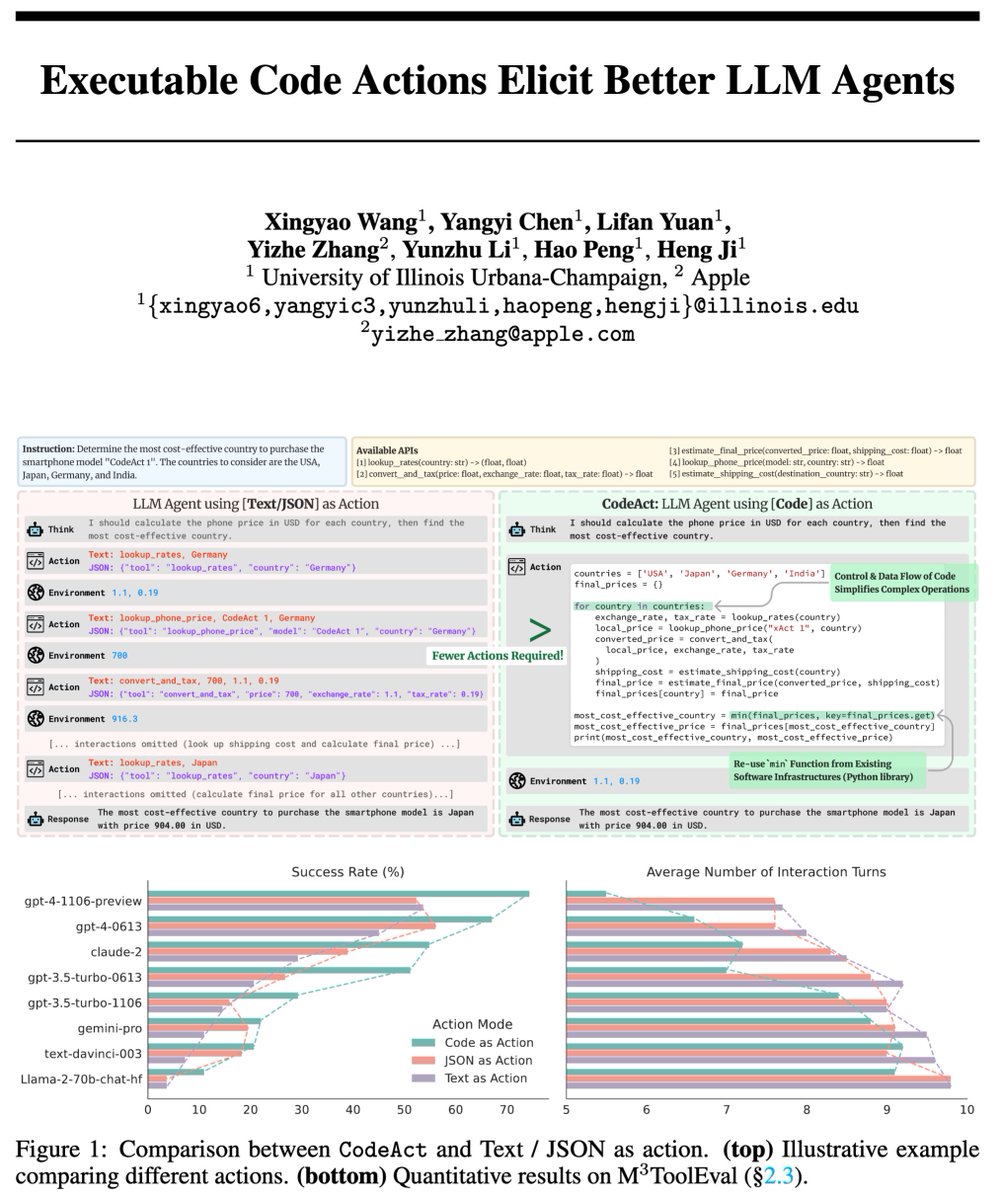

This a joint work with Yangyi Chen (on job market) , Lifan Yuan , Yizhe Zhang , Yunzhu Li , Hao Peng , and @elgreco_winter . Check out our paper for more details: arxiv.org/abs/2402.01030 10/

I'm joining the UIUC University of Illinois this fall as an Assistant Professor in the iSchool, with an affiliation in Computer Science! My research passion lies in the intersection of NLP and the medical domain. I'm recruiting students for 2025! Check more info: yueguo-50.github.io.

🚨 I’m on the job market this year! 🚨 I’m completing my Allen School Ph.D. (2025), where I identify and tackle key LLM limitations like hallucinations by developing new models—Retrieval-Augmented LMs—to build more reliable real-world AI systems. Learn more in the thread! 🧵