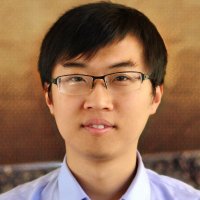

Hao Su

@haosu_twitr

Associate Professor @UCSanDiego. Computer Vision, Graphics, Embodied AI, Robotics. Co-Founder of hillbot.ai @hillbot_ai

ID: 1422688248950788101

https://cseweb.ucsd.edu/~haosu/ 03-08-2021 22:38:18

240 Tweet

6,6K Followers

374 Following

some exciting news, ManiSkill/SAPIEN now has experimental support for MacOS w/ CPU simulation and rendering. You can now do your local debugging/development on Mac. Example shown here is a Push-T policy trained on my 4090 running on my mac! try now: maniskill.readthedocs.io/en/latest/user…

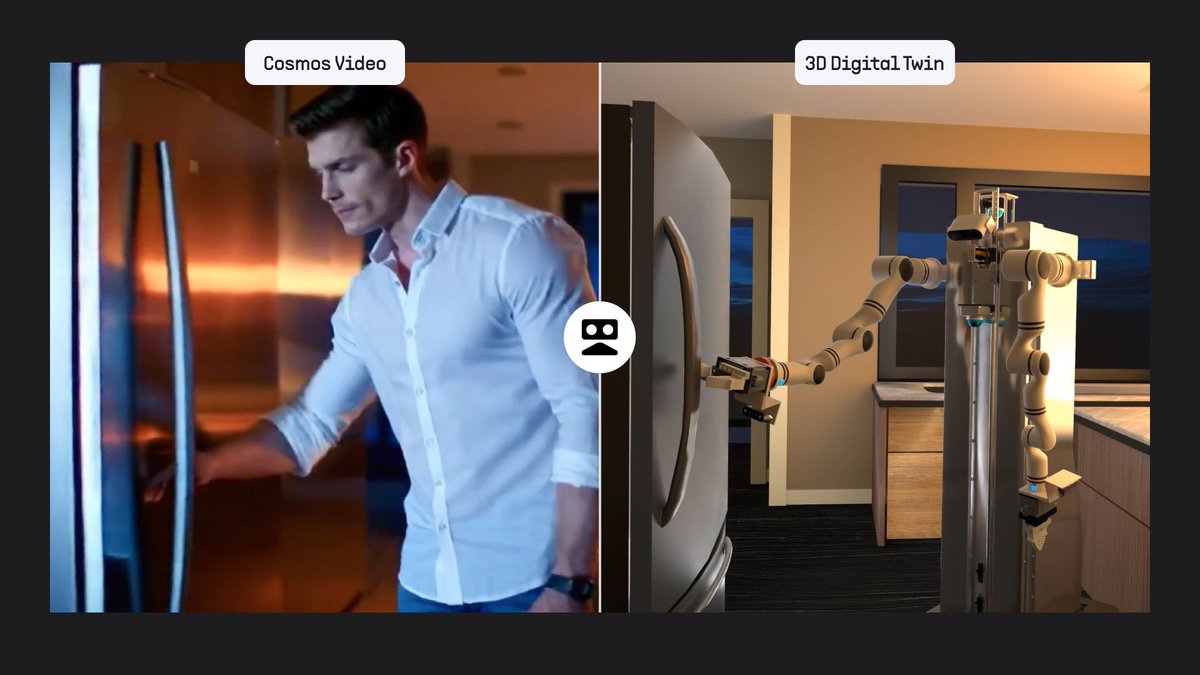

See Nicklas Hansen's amazing work on robot perception, planning, and action in dynamic environments (NVIDIA Graduate Research Fellow and PhD candidate at UC San Diego). ✨🤖 #NationalRoboticsWeek Learn more about Nicklas's work. ➡️ nvda.ws/4lv85eb

🌎🌏🌍 We are organizing a workshop on Building Physically Plausible World Models at ICML Conference 2025! We have a great lineup of speakers, and are inviting you to submit your papers with a May 10 deadline. Website: physical-world-modeling.github.io

![Adria Lopez (@alopeze99) on Twitter photo 🤖Introducing DEMO3: our new model-based RL framework for multi-stage robotic manipulation from visual inputs and sparse rewards. 🧵🔽

📜 Paper: [arxiv.org/abs/2503.01837]

🌍 Project Page: [adrialopezescoriza.github.io/demo3/]

💻 Code: [github.com/adrialopezesco…] 🤖Introducing DEMO3: our new model-based RL framework for multi-stage robotic manipulation from visual inputs and sparse rewards. 🧵🔽

📜 Paper: [arxiv.org/abs/2503.01837]

🌍 Project Page: [adrialopezescoriza.github.io/demo3/]

💻 Code: [github.com/adrialopezesco…]](https://pbs.twimg.com/media/GlReU7TXEAAn918.jpg)