Hassan Sajjad

@hassaan84s

Associate Professor - Dalhousie University, Halifax, Canada

NLP, deep learning, explainable AI

ID: 415790425

https://hsajjad.github.io/ 18-11-2011 20:32:42

358 Tweet

448 Followers

104 Following

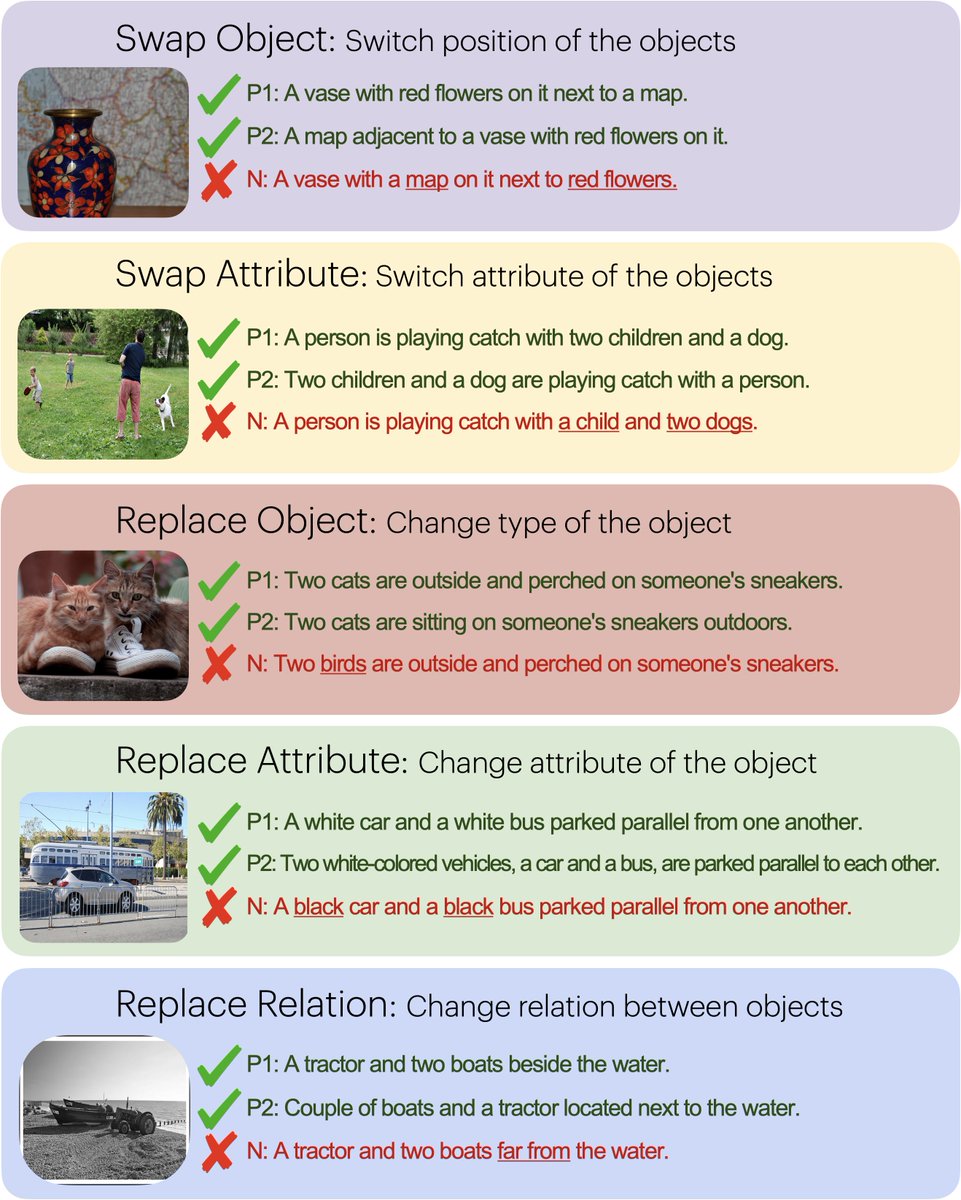

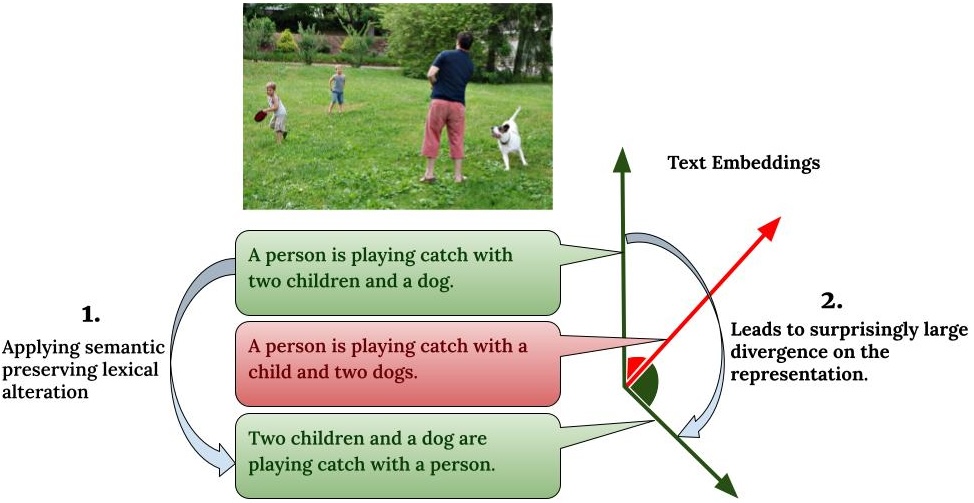

10/n 📖 Full paper 🔗 Explore our comprehensive findings! Paper: arxiv.org/abs/2406.11171 Dataset: huggingface.co/datasets/Aman-… Exciting collboration with Sri Harsha Chandramouli S Sastry, Evangelos Milios, sageev Hassan Sajjad

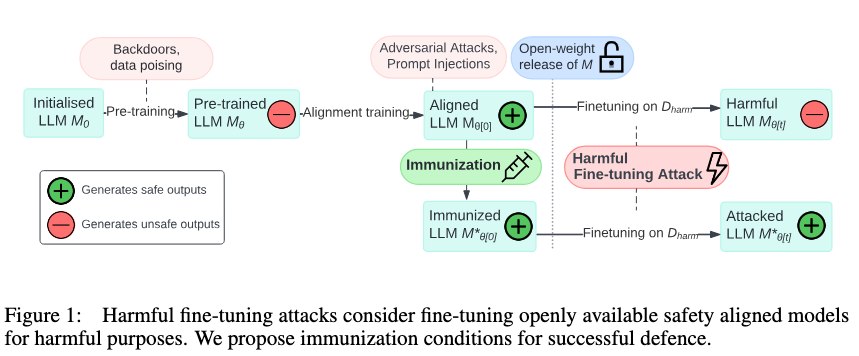

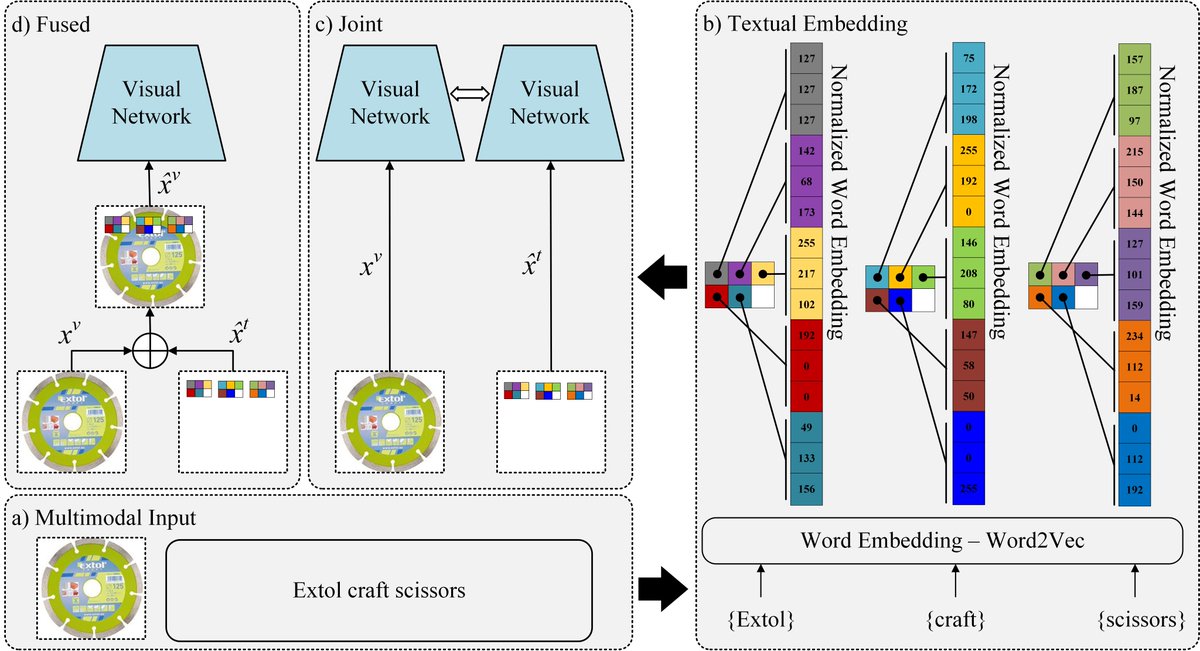

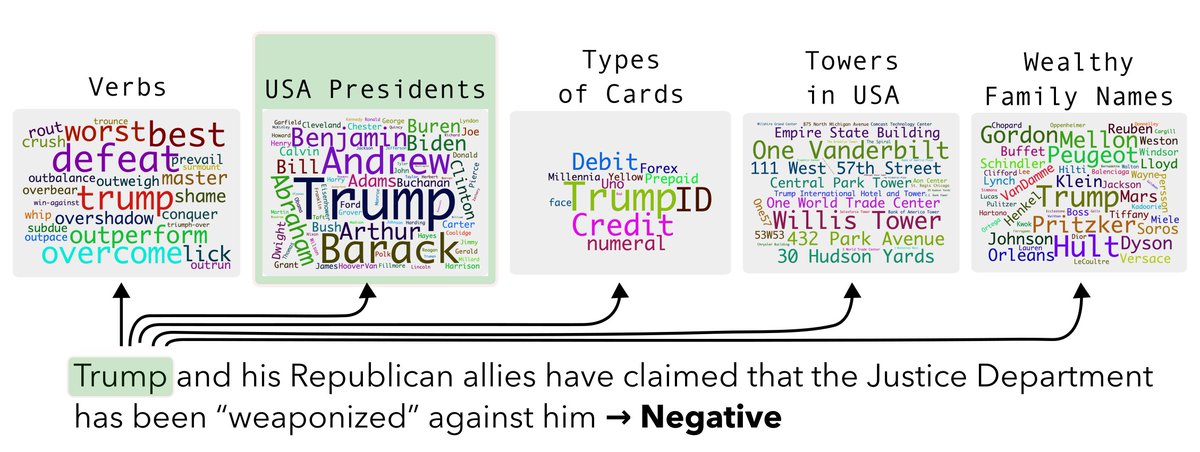

I am excited to share our two papers on Safe and Trustworthy AI accepted at EMNLP 2025 #EMNLP2024. Thanks to my awesome students and collaborators. Latent Concept-based Explanation of NLP Models arxiv.org/pdf/2404.12545 Immunization against harmful fine-tuning attacks