He He

@hhexiy

NLP researcher. Assistant Professor at NYU CS & CDS.

ID: 806193181972779008

http://hhexiy.github.io 06-12-2016 17:46:47

123 Tweet

6,6K Followers

382 Following

Had a lot of fun poking holes at how LLMs capture diverse preferences with Chuanyang Jin, Hannah Rose Kirk and He He 🧐! Not all is lost though, a simple regularizing term can help prevent overfitting to binary judgments. Check out our paper SoLaR @ NeurIPS2024 to find out more 😉

A real honour and career dream that PRISM has won a NeurIPS Conference best paper award! 🌈 One year ago I was sat in a 13,000+ person audience of NeurIPs '23 having just finished data collection. Safe to say I've gone from feeling #stressed to very #blessed 😁

Unbelievable. This quote is blatantly false and unnecessary for the argument. And she surely had expected the backlash with the patronizing NOTE. This is racism, not "cultural generalization". NeurIPS Conference

When benchmarks talk, do LLMs listen? Our new paper shows that evaluating that code LLMs with interactive feedback significantly affects model performance compared to standard static benchmarks! Work w/ Ryan Shar, Jacob Pfau, Ameet Talwalkar, He He, and Valerie Chen! [1/6]

![Jane Pan (@janepan_) on Twitter photo When benchmarks talk, do LLMs listen?

Our new paper shows that evaluating that code LLMs with interactive feedback significantly affects model performance compared to standard static benchmarks!

Work w/ <a href="/RyanShar01/">Ryan Shar</a>, <a href="/jacob_pfau/">Jacob Pfau</a>, <a href="/atalwalkar/">Ameet Talwalkar</a>, <a href="/hhexiy/">He He</a>, and <a href="/valeriechen_/">Valerie Chen</a>!

[1/6] When benchmarks talk, do LLMs listen?

Our new paper shows that evaluating that code LLMs with interactive feedback significantly affects model performance compared to standard static benchmarks!

Work w/ <a href="/RyanShar01/">Ryan Shar</a>, <a href="/jacob_pfau/">Jacob Pfau</a>, <a href="/atalwalkar/">Ameet Talwalkar</a>, <a href="/hhexiy/">He He</a>, and <a href="/valeriechen_/">Valerie Chen</a>!

[1/6]](https://pbs.twimg.com/media/GkuSATRXkAANLpR.jpg)

Life update: I'm starting as faculty at Boston University in 2026! BU has SCHEMES for LM interpretability & analysis, so I couldn't be more pumped to join a burgeoning supergroup w/ Najoung Kim 🫠 Aaron Mueller. Looking for my first students, so apply and reach out!

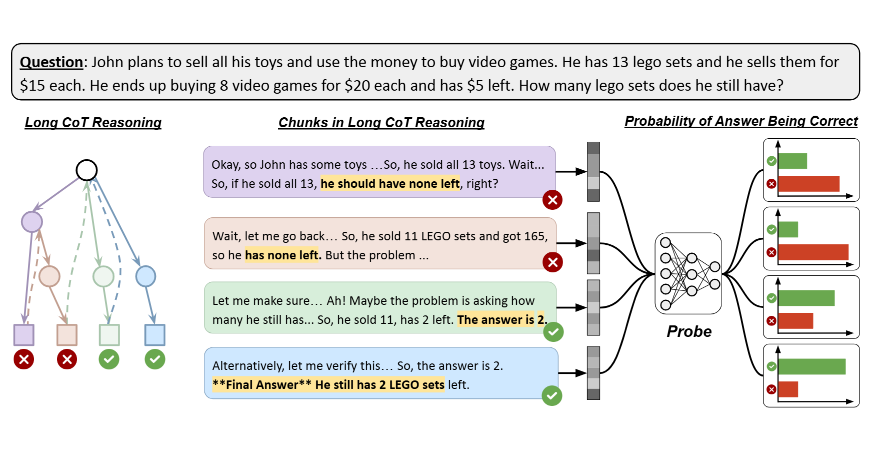

Do reasoning models know when their answers are right?🤔 Really excited about this work led by Anqi and Yulin Chen. Check out this thread below!

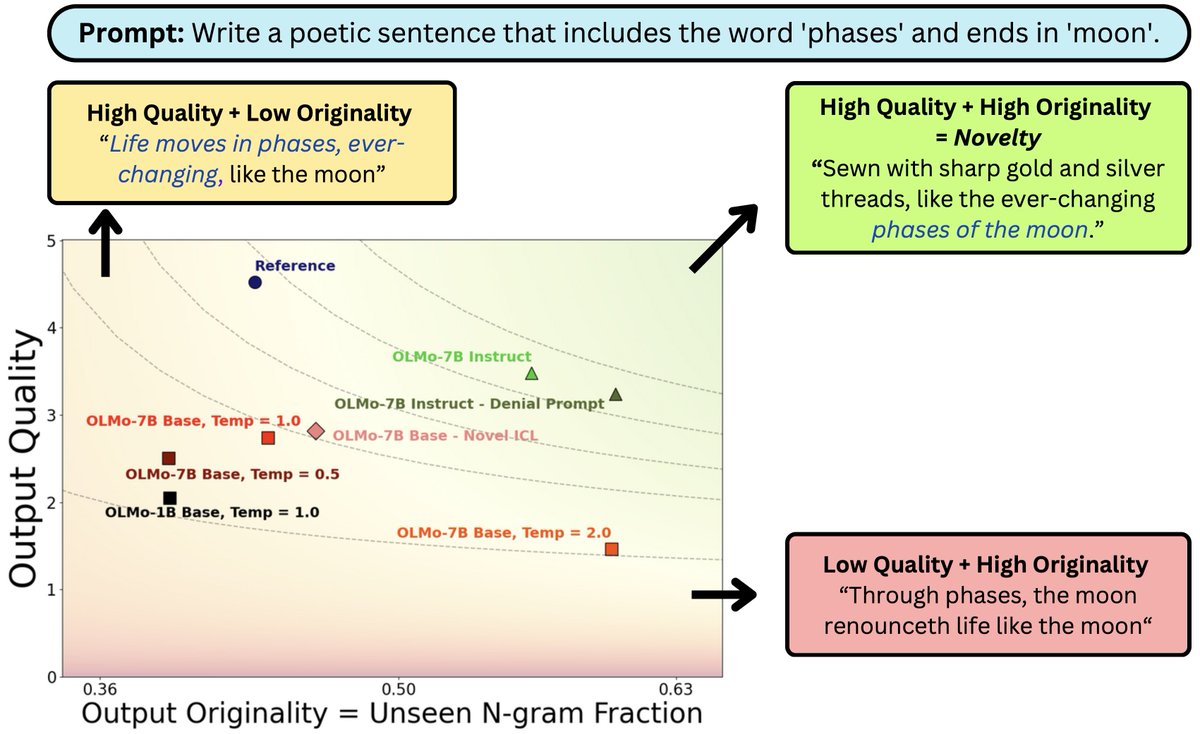

What does it mean for #LLM output to be novel? In work w/ John(Yueh-Han) Chen, Jane Pan, Valerie Chen, He He we argue it needs to be both original and high quality. While prompting tricks trade one for the other, better models (scaling/post-training) can shift the novelty frontier 🧵

Wrapped up Stanford CS336 (Language Models from Scratch), taught with an amazing team Tatsunori Hashimoto Marcel Rød Neil Band Rohith Kuditipudi. Researchers are becoming detached from the technical details of how LMs work. In CS336, we try to fix that by having students build everything:

Talking to ChatGPT isn’t like talking to a collaborator yet. It doesn’t track what you really want to do—only what you just said. Check out work led by John (Yueh-Han) Chen and @rico_angel that shows how attackers can exploit this, and a simple fix: just look at more context!