Jaehoon Lee

@hoonkp

Researcher in machine learning with background in physics; Member of Technical Staff @AnthropicAI; Prev. Research scientist @GoogleDeepMind/@GoogleBrain.

ID: 90276706

http://jaehlee.github.io 15-11-2009 23:47:33

242 Tweet

1,1K Followers

662 Following

the deadline for applying to the OpenAI residency is tomorrow. if you are an engineer or researcher from any field who wants to start working on AI, please consider applying. many of our best people have come from this program! boards.greenhouse.io/openai/jobs/46… boards.greenhouse.io/openai/jobs/46…

Very interesting paper by James Sully, Dan Roberts and Alex Maloney investigating theoretical origin of neural scaling laws! Happy to read the 97p paper and learn about new tools in RMT and insights of how statistics of natural datasets are translated into power-law scaling.

Jasper Jasper talking about the ongoing journey towards BIG Gaussian processes! A team effort with Jaehoon Lee, Ben Adlam, Shreyas Padhy and Zachary Nado. Join us at NeurIPS GP workshop neurips.cc/virtual/2022/w…

Analyzing training instabilities in Transformers made more accessible by awesome work by Mitchell Wortsman during his internship at Google DeepMind! We encourage you to think more on understanding the fundamental cause and effect of training instabilities as the models scale up!

Ever wonder why we don’t train LLMs over highly compressed text? Turns out it’s hard to make it work. Check out our paper for some progress that we’re hoping others can build on. arxiv.org/abs/2404.03626 With Brian Lester, Jaehoon Lee, Alex Alemi, Jeffrey Pennington, Adam Roberts, Jascha Sohl-Dickstein

Is Kevin onto something? We found that LLMs can struggle to understand compressed text, unless you do some specific tricks. Check out arxiv.org/abs/2404.03626 and help Jaehoon Lee, Alex Alemi, Jeffrey Pennington, Adam Roberts, Jascha Sohl-Dickstein, Noah Constant and I make Kevin’s dream a reality.

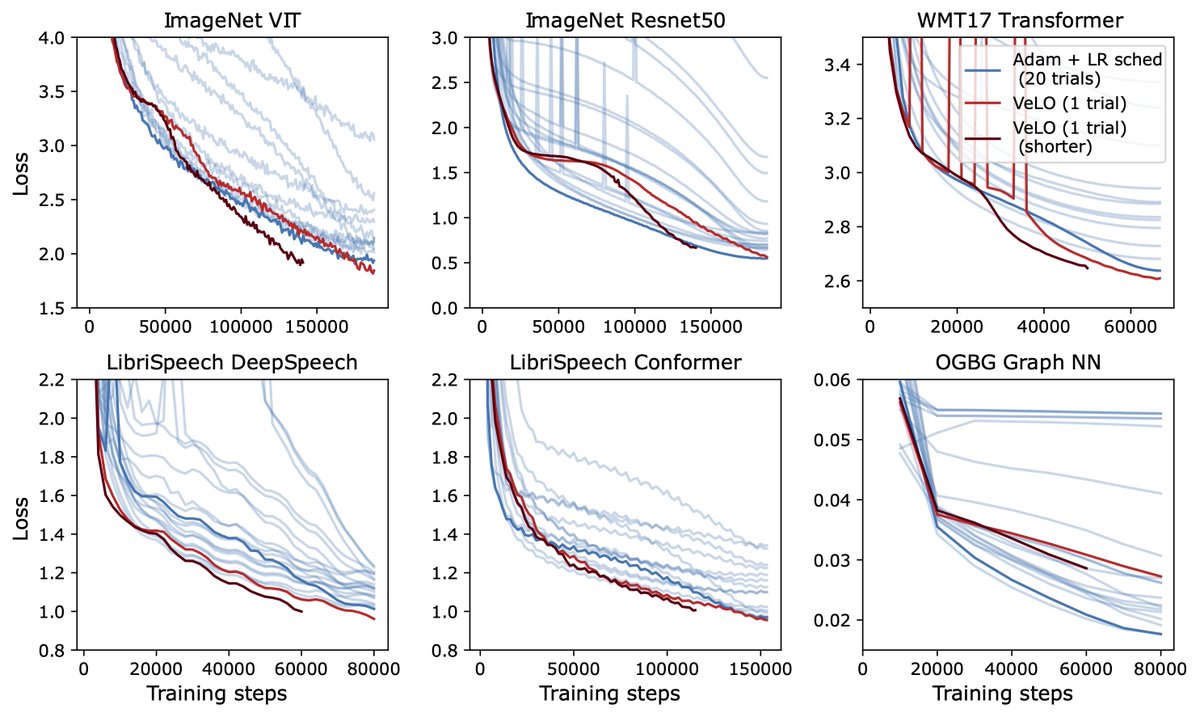

Tour de force led by Katie Everett investigating the interplay between neural network parameterization and optimizers; the thread/paper includes lot of gems (theory insight, extensive empirics, and cool new tricks)!