Hrishi

@hrishioa

Building artificially intelligent bridges at Southbridge, prev-CTO Greywing (YC W21).

Chop wood carry water.

ID: 1548645654

https://olickel.com 26-06-2013 17:14:03

3,3K Tweet

10,10K Followers

2,2K Following

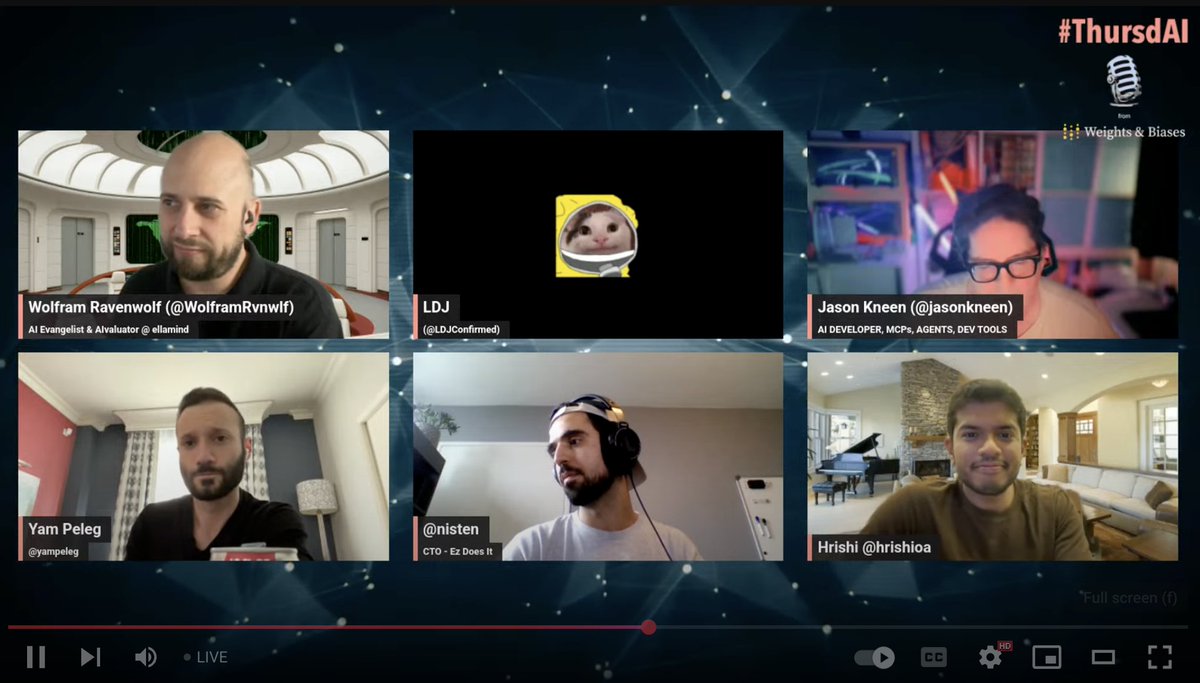

Thanks for Wolfram Ravenwolf for the sess. This was awesome! nisten🇨🇦e/acc Yam Peleg LDJ Jason Kneen Fun to see Hrishi share some of the adventures we have at Southbridge haha

Humans vibing over CLIs is what I needed 1am SGT Hrishi nisten🇨🇦e/acc Yam Peleg LDJ Jason Kneen Awesome way to go down the latest releases! Wolfram Ravenwolf

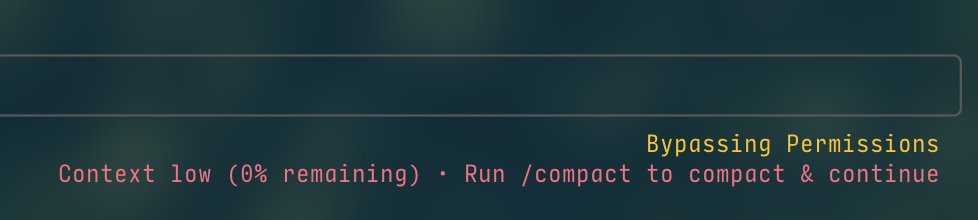

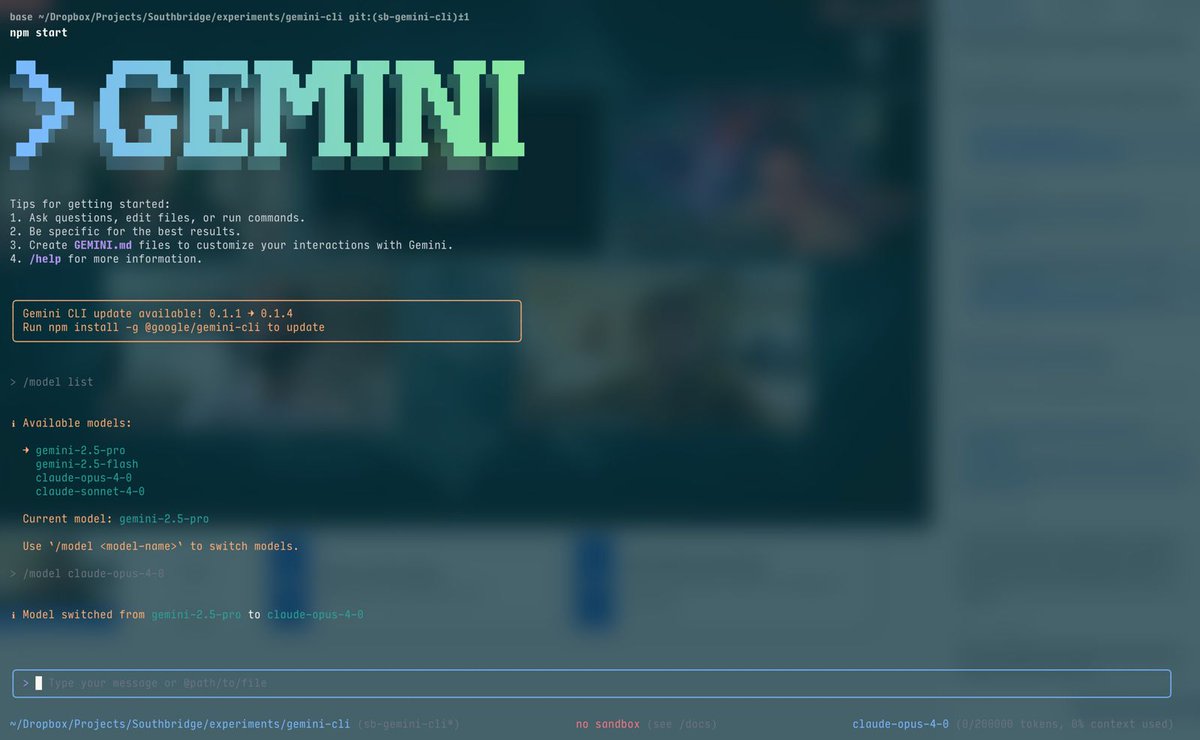

Super simple - make a Cloudflare gateway at developers.cloudflare.com/ai-gateway/get… Get the account id and gateway id ANTHROPIC_BASE_URL=gateway.ai.cloudflare.com/v1/<account-id>/<gateway-id>/anthropic claude Look at charts and drink wine

![Minu (@minune29) on Twitter photo "frog chilling on southbridge chip"

FLUX.1 [dev]: no idea what a southbridge is, but at every iteration, this frog will be vibing

Love all the frogs "frog chilling on southbridge chip"

FLUX.1 [dev]: no idea what a southbridge is, but at every iteration, this frog will be vibing

Love all the frogs](https://pbs.twimg.com/media/GuY9W3QaMAAkO2Z.jpg)