Ihab Ilyas

@ihabilyas

professor and co-founder

ID: 262387823

http://www.cs.uwaterloo.ca/~ilyas 07-03-2011 23:30:54

379 Tweet

1,1K Followers

186 Following

Come hear about work on datamodels (arxiv.org/abs/2202.00622) at ICML *tomorrow* in the Deep Learning/Optimization track (Rm 309)! The presentation is at 4:50 with a poster session at 6:30. Joint work with Sam Park Logan Engstrom Guillaume Leclerc Aleksander Madry

Big news from Formerly Axelar, now at @axelar congratulations on the launch!

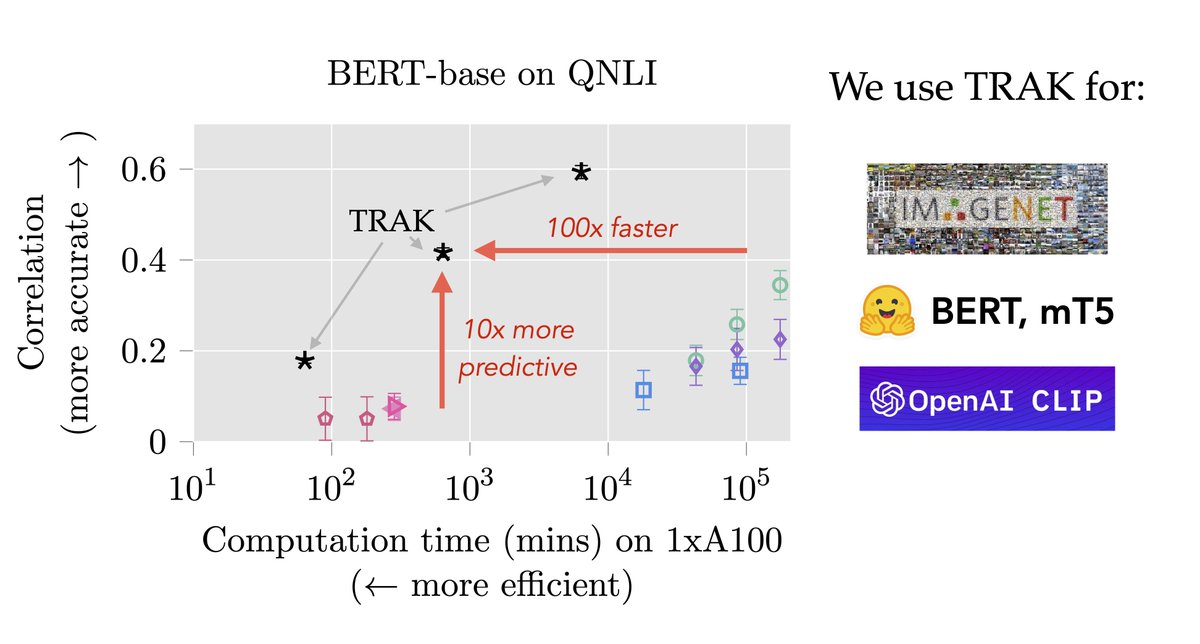

As ML models/datasets get bigger + more opaque, we need a *scalable* way to ask: where in the *data* did a prediction come from? Presenting TRAK: data attribution with (significantly) better speed/efficacy tradeoffs: w/ Sam Park Kristian Georgiev Andrew Ilyas Guillaume Leclerc 1/6

"Data Science–A Systematic Treatment," by M. Tamer @Ozsu University of Waterloo, discusses the scope of #DataScience and highlights aspects that are not part of AI bit.ly/3JqNp5z. Özsu talks about leveraging data and deploying data science in a #video. bit.ly/3NJaToY

🥳 Super excited at multiple #EMNLP2023 acceptance. Two by my summer interns Apple: Simone Conia on growing multilingual KG and Farima Fatahi Bayat on factual error detection & correction. One with my former colleagues IBM Research on a novel labeling tool. Details coming soon.

Two more ACM fellows for University of Waterloo . Congrats to my colleagues Shai and Ian. Well deserved! Association for Computing Machinery Waterloo Mathematics Waterloo's Cheriton School of Computer Science

ConvKGYarn🧶: Spinning Configurable & Scalable Conversational KGQA datasets with LLMs is accepted at #EMNLP2024 Industry Track with a top-20% rec⭐️ Work done Apple with Daniel Lee , Ali, Jeff, Yisi Sang , Jimmy Lin , Ihab Ilyas , Saloni Potdar , Mostafa, Yunyao Li ✈️ ACL'2025!

Want state-of-the-art data curation, data poisoning & more? Just do gradient descent! w/ Andrew Ilyas Ben Chen Axel Feldmann Billy Moses Aleksander Madry: we show how to optimize final model loss wrt any continuous variable. Key idea: Metagradients (grads through model training)

“How will my model behave if I change the training data?” Recent(-ish) work w/ Logan Engstrom: we nearly *perfectly* predict ML model behavior as a function of training data, saturating benchmarks for this problem (called “data attribution”).